1.1 Introduction

It comes as no surprise that robots are entering society in ever increasing numbers, in fact, according to the International Federation of Robotics (IFR), there are more than 3,000,000 industrial robots operating in factories around the world.Footnote 1 Robot density, a metric used by the IFR, measures the number of robots per 10,000 workers in an industry. From 2015 to 2020, robot density nearly doubled worldwide, increasing from 66 units in 2015 to 126 units in 2020. In addition, recent reports have indicated that 88 percent of companies plan to invest in adding robots to their organizations and roughly 400,000 new robots are predicted to enter the market yearly.Footnote 2 When discussing how law, policy, and regulations apply to robots, it is important to realize that not only are industrial robots becoming more prevalent within society but so too are robots that are becoming more human-like in appearance and behavior and that function in semi- or fully autonomous modes. Such robots, which include health-care robots, service and security robots, entertainment robots, companion robots, and others are raising unique challenges for law, policy, and regulations which apply to human–robot interaction (HRI). Such robots are the focus of this Cambridge Handbook, which takes an interdisciplinary approach to the topic.

One reason for the rapid spread of humanoid, expressive, and AI-enabled robots throughout society is the increasing number of tasks they perform, their ability to display human-like social skills, and the wide range of motions they display. For example, considering mobility, in the United States, Boston Dynamics developed a bipedal humanoid robot whose range of motion and dexterity allows it to participate in extreme sports, such as parkour, and even perform backflips. And considering the social abilities of robots, the humanoid robot Sophia designed by Hong Kong based Hanson Robotics was granted citizenship in Saudi Arabia, based in part due to its human-like appearance and expressive interactions with humans.Footnote 3 Additionally, nations view investments in robotics that are intelligent, humanoid, and social as essential for their economic well-being. For example, in Japan, the goal of the Moonshot R&D Program, is, by 2050, to overcome many of the current challenges in the design of human-like robots that display sophisticated social skills.Footnote 4 On this point, Moonshot’s ambitious Goal 3 is to develop AI-enabled robots that autonomously learn, adapt to their environment, evolve in intelligence, and act alongside humans in social contexts.

Along with technological advances in the design of humanoid, expressive, AI-enabled, and anthropomorphic robots have come a pressing need to determine how to regulate the behavior of this emerging class of robots given their particular set of skills. On this point, we note that nations such as Japan, China, South Korea, and the United States, along with the EU, are just beginning the process of determining how to regulate AI-enabled robots that are becoming more like us in social skills, form, and behavior. However, the increasing intelligence and human-likeness of social robots points to a future in which determining the appropriate law, policy, and regulations for the design and use of smart robotic technologies will be challenging – among others, the challenges will range from the law of contracts, criminal and commercial law, to constitutional and human rights law. But it’s not just the law that will be challenged by AI-enabled, expressive, humanoid, and anthropomorphized robots; so too will issues of public policy be important to consider and so too will ethical issues be impacted by our personal interactions with social robots.

To discuss the areas of law, policy, and regulations which apply to human interaction with AI-enabled and social robots, we invited an international group of scholars to contribute chapters to this handbook. The chapters in this volume discuss how law, policy, and regulations apply to AI-equipped robots that are becoming increasingly human in appearance and have the ability to detect human emotions and to express emotions themselves, and that are often perceived by users as having a personality, race, or gender. To focus on this emerging class of robots, we organized this book around four topic areas: (1) An Introduction to Law, Policy, and Regulations for Human–Robot Interaction; (2) Issues and Concerns for Human–Robot Interaction; (3) Ethics, Culture, and Values Impacted by Human–Robot Interaction; and (4) Legal Challenges for Human–Robot Interaction. Taken together, the parts provide a comprehensive discussion of law, policy, and regulations that relate to our interactions with robots that are becoming more like us in form and behavior. However, because the development of law and policy for HRI is an emerging area of scholarship and legislative concern, the current volume is designed primarily to provide a conceptual framework for the field and to spur further discussion and research on how law, policy, and regulations can be used to guide our future interaction with highly intelligent and increasingly social robots.

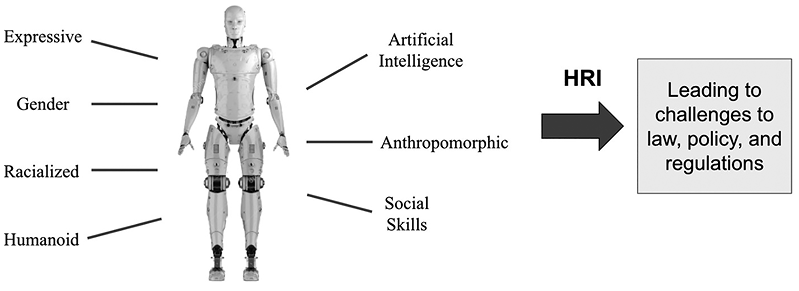

Given the focus of the handbook, it is necessary to define a few terms early in the chapter. Generally, the term “law” refers to a rule of conduct or action that a nation or a group of people agree to follow, whereas a “regulation” is broadly defined as the imposition of rules by government, typically backed by penalties that are intended specifically to modify the (economic) behavior of individuals and firms in the private sector. And “policy” most commonly refers to a rule or plan of action, especially an official one adopted and followed by a group, organization, or government. Additionally, we operationally define “humanoid robots” as those robots that have a human-like appearance in terms of form and behavior. And when we use the term “anthropomorphic robot,” we mean robots that are thought by individuals to behave as if they had human-like characteristics. Finally, by “smart” or “intelligent” we mean robots that are equipped with different AI abilities often allowing sophisticated social interactions to occur with humans. Figure 1.1 shows a drawing of a robot with the set of abilities that form the focus of this handbook.

Figure 1.1 An example of an anthropomorphic robot with a set of skills and abilities offering challenges to law, policy, and regulations for human–robot interaction

The combination of smart, humanoid, and anthropomorphic robots that elicit reactions from individuals as if the robot were in some way human, represents a unique class of robots which are leading to interesting challenges to current law, policy, and regulations directed at the behavior of emerging smart robotic technology. On this point, Gadzhiev and Voinikanis commented that in the digital age, the development of AI-enabled robotics technology has not only reached a new scale but also raised both socioeconomic and legal problems that we think are exacerbated by the class of robots discussed in this chapter and throughout this handbook.Footnote 5 Of particular interest for humanoid, expressive, and smart robots is the issue of whether such robots should be awarded rights associated with legal persons. As the resolution of the 2017 European Parliament on Civil Law Rules on Robotics revealed,Footnote 6 the issue of legal personhood for robots has not only scientific but also practical or applied significance in the context of law. However, according to current laws and statutes, robots cannot be recognized as legal persons even though they are quickly becoming more autonomous and human-like in appearance and social abilities. For this and other reasons discussed within this handbook, there is a current gap in the law, policy, and regulations that apply to human interaction with robots.

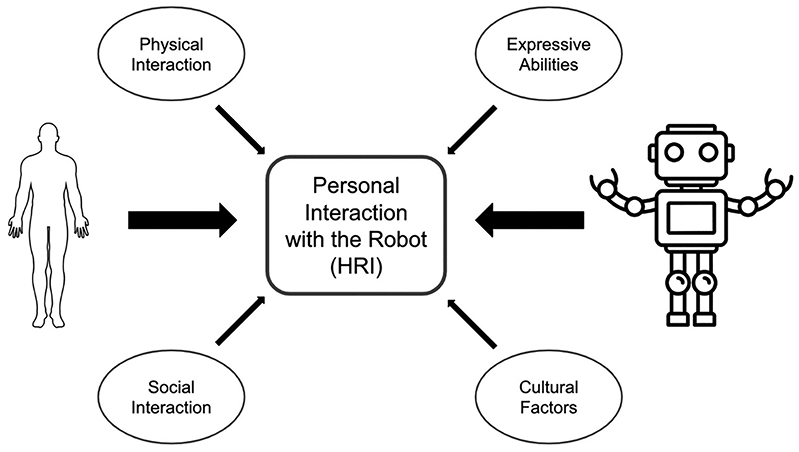

In this handbook, we focus on the interdisciplinary study of law and robotics from a unique perspective in that we emphasize human interactions with robots at an interpersonal level. So, while robotics often focuses on the physical design of intelligent agents that operate in the real world, the study of HRI focuses specifically on the social interactions that occur between humans and robots.Footnote 7 It is at the level of HRI that humans share information and data with robots, form bonds with robots, work together with robots, and socialize with robots. And at the level of HRI, so too do many of the interactions between humans and robots raise public policy concerns and have ethical and legal consequences. Figure 1.2 emphasizes that the focus of the handbook is on interactions with robots at an interpersonal level and the various factors involved. The four topics connected by arrows to the larger box representing HRI are representative of issues that are addressed in this handbook and that are creating a class of robots with skills that challenge current law, policy, and regulations.

Figure 1.2 Human–robot interaction with robots emphasizing interpersonal interactions

1.1.1 Human–Robot Interaction Impacts the Law in Many Areas

Given robots becoming more like us in form and behavior, a regulatory challenge for HRI will be based on the level of robot anthropomorphism a robot receives from individuals, which will be determined in part by the cultural, political, and normative differences existing among populations interacting with robots. From this, in an age of increasingly smart, expressive, and humanoid robots, an important issue will be to determine how to implement an international standard of laws and policies designed to regulate HRI, given the diversity of users expected to interact with robots. As an example, anthropomorphic robots can be created based on the appearance of historic figures, celebrities, or other people, but the use of such images for the design of humanoid robots may receive different treatment in different legal jurisdictions (e.g., in the United States, they may violate the Right of Publicity). On this point, consider that in China, a robotics company created a robot with the appearance of the former Japanese Prime Minister Shinzo Abe and made the robot bow and apologize to people in China at a robotics exhibition in Shanghai.Footnote 8 The controversial use of the Abe-bot raises interesting questions of law and policy reflecting different national approaches to the use of robots, not the least of which is to determine which cause of action applies to the disparaging use of the Abe-bot, which court would hear the dispute, and which parties have standing to initiate a legal action. It could even be the case that fundamental issues reflecting an individual’s rights such as freedom of speech and expression could be involved.

Another emerging challenge to law, policy, and regulations from the use of humanoid, AI-enabled, and expressive robots is the proliferation and use of sex robots. The application of sex robots ranges from their use as interactive toys to engage a user’s fetishism to intimate companions for people with disabilities who are unable to have a sexual relationship with another natural person. Considering robotics, one concern brought forth by the use of robots is the displacement of human labor from factory jobs; we note the same concern has been raised from the use of sex robots to replace human sex workers. For example, in China, there has been an attempt to establish a sex-doll brothel in the high-tech city of Shenzhen;Footnote 9 this has created controversy for several reasons. For example, as the use of sex robots proliferate, one concern is a challenge to societal norms; another is determining the regulatory requirements for sex robots from a health perspective. In either case, without changes, regulations of the robot sex industry will be dependent on existing laws, religious beliefs, political systems, and ideology operating within a particular nation or local community. On this point, in Muslim nations having sexual relations with a robot would be considered a criminal act based on the application of Islamic law.Footnote 10 However, for a country that supports individual rights, from a social democracy framework, an issue for law would be whether there was informed consent to engage in sexual relations with a robot.Footnote 11 From the previous examples, it is evident that cultural, religious, and political considerations will need to be taken into account as laws, policy, and regulations are created for AI-enabled, expressive, humanoid, and anthropomorphic robots.

As a broad statement, we note that current discussions of a law of robots often focus on solving several well-discussed and important issues that are based on the use of machine learning algorithms to direct a robot’s behavior as it interacts with humans in social contexts. For example, assigning liability for damages resulting from the performance of autonomous or semiautonomous robotic systems has been a topic of discussion and legal scholarship for some time now. While this is an important issue of law that we have contributed to in our own research,Footnote 12 our view is that past scholarship on regulating AI has often overlooked what we describe as a “regulatory gap” between law and AI; this gap has emerged from the use of AI-enabled and anthropomorphic robots that have the ability to detect human emotion, to be expressive themselves, and to interact with people at an interpersonal level. More specifically, current, as well as proposed, regulations for robots interacting with people are not focused on the anthropomorphic and expressive abilities of robots, even though designing such robots are major initiatives among robotics experts and are beginning to challenge different areas of law.

As is discussed within this handbook, there are many other areas of law which will be challenged by robots that are anthropomorphized, expressive, and interact with people in social contexts. For example, in the area of criminal law, Vuletic and Petrasevic asked whether EU legislators should develop a framework of criminal law rules designed to regulate the behavior of AI-enabled robots and, if so, what behavior would the rules address.Footnote 13 They also commented that there needs to be ethical and legal guidelines developed to ensure that AI-enabled robots are trustworthy, a user’s privacy is protected, the issue of liability for robots is considered, and user rights are protected. Additionally, they also observed that a user’s acceptance of robots within society and their potential psychological dependence on robotics devices were ethical issues that should be addressed in an age of increasingly sophisticated robots.Footnote 14 We expect that these rights and ethical issues will more and more be challenged by the rise of AI-enabled robots interacting with people at an interpersonal level.

However, even with the different approaches discussed earlier for the regulation of robotic technology (more approaches will be discussed later), given the increasing level of intelligence for robots, according to Baranov and colleagues, various problems and contradictions will occur in efforts to develop legal rules for smart robots.Footnote 15 Specifically, they argue that there is an lack of preparedness in modern legal science to conceptualize and design the legal and technical regulatory acts necessary for robots. Further, they point out that we are especially unprepared to define the responsibility and appropriate protection modes for different interests, rights, and freedoms impacted by robots. Baranov and colleagues did, however, propose that there are two consecutive stages in the regulation of robots. The first includes the development and introduction of necessary changes in existing branches of law to account for advances in robotics; the second includes the conceptual-legal and doctrinal-legal formulation of key priorities which will lead to the creation of a new integrated branch of law – robotics law, which, they argue, will be an independent subject and method of legal regulation. Similarly, in our chapters, we propose that there should be a legal framework developed for robots entering society and argue that the focus of such a legal framework should be at the level of the social interactions between humans and robots.Footnote 16

Another emerging topic for the law to consider is the ability of robots to be deceptive. For example, an AI-enabled robot may engage in “anthropomorphic deception” in which an individual could be deceived into thinking that he or she is interacting with a human rather than a robot; essentially that individual is being deceived into thinking that the robot possesses human abilities beyond those of the robot’s programming. We argue that such robots raise a host of important and timely issues for the law to address but which have not been discussed in depth among legal scholars. Thus, the issue of “robot deception” (along with other challenges to the law) motivates the necessity to regulate the expressive aspects of autonomous and anthropomorphic robots when such robots interact with people. For this reason, a “law of HRI” seems appropriate and timely as it is the interaction between robots and people at the interpersonal level that raises interesting and unique issues for the law to address. Returning to the previous example, we should note here that there have been some initial efforts to regulate robot deception, at least in the context of internet bots. For example, California passed an “internet bot law” in which (under some specific circumstances) the bot must identify itself as an artificial agent. As an extension, we argue that a similar law should also be considered for anthropomorphic and embodied robots interacting with users in the real world.

The California Bot law reads in part … (a) It shall be unlawful for any person to use a bot to communicate or interact with another person in California online, with the intent to mislead the other person about its artificial identity for the purpose of knowingly deceiving the person about the content of the communication in order to incentivize a purchase.Footnote 17

Also discussing bots, Schellekens commented that search engines may provide data collected by bots that are freely available to anybody visiting an internet site.Footnote 18 Extending this observation to robots we ask – how should the law regulate disputes involving robots that are anthropomorphized, intelligent, and humanoid in appearance and that collect information that is shared with third parties without a user’s consent? Broadly speaking, from the perspective of law, we need to ask which regulatory approach should be used to protect an individual’s privacy as anthropomorphic and expressive robots interact with people in social contexts and collect personal data during the interaction. On this point, according to Wong, regulatory systems have often attempted to keep pace with new technologies by recalibrating and adapting current regulatory frameworks to account for new opportunities and risks, to confer rights and duties where appropriate, to offer safety and liability frameworks, and to ensure legal certainty for businesses.Footnote 19 But these approaches are often reactive and sometimes piecemeal and can result in what seems to be an artificial delineation of rights and responsibilities. In addition, complicating the effort to regulate AI-enabled robots is that previous robotic technologies have been considered tools to support human activities, but as machine autonomy and the self-learning capabilities of robots increase, robots are being experienced less and less like machines and tools and more like social entities that are more human-like in appearance and behavior. In fact, we think that humanity is approaching a critical point in our interaction with robots, one from which there is no going back because robots with machine learning algorithms can now “learn,” adapt their performances, and “make decisions” from data and “life experiences” such that they are becoming more autonomous and human-like in behavior. These abilities will create anthropomorphic robots whose behavior will challenge current law, policy, and regulations and will require new approaches for the regulation of robots.

Discussing regulatory schemes being considered for robotics, Villaronga and Heldeweg commented that a dynamic regulatory instrument that coevolves with advances in robot technology is necessary; that is, as advances in smart humanoid robots continue to occur, a regulatory scheme with the ability to adopt to this development is crucial. But based on past efforts to regulate technology, this will be difficult. In response, one suggested approach to regulate robots that are becoming more human-like in skills and behavior is to employ a regulatory impact assessment procedure and evaluation based on simulation methods and the use of “living labs” which could be used to empirically study and evaluate robots. Such a procedure would provide roboticists with a practical tool to determine what regulations may be needed during the life cycle of a robot and would also be useful in helping to fill the existing regulatory gaps caused by the speed of the technological progress occurring within robotics and especially for robots that interact with people in social contexts.Footnote 20 Discussing this approach, Weng and his colleagues reviewed the “Tokku” Special Zone for Robotics Empirical Testing and Development program that is located in Japan.Footnote 21 Also discussing the regulation of robots, Salvini and colleagues reviewed the administrative, criminal, and civil aspects of Italian law in order to determine whether and how current legal regulations in Italy impact robot deployment in urban environments.Footnote 22 They noted that under Italian law, there is currently a lack of legal authorizations for autonomous mobile robots operating on public roads; similarly, there is a lack of regulations for interpersonal interactions occurring between humans and robots, even though human interaction with increasingly smart robots affects the full array of rights awarded people, such as the right to privacy, the protection of people’s bodies, spaces, properties, and communications, and the protection of people’s self-development in their intellectual, decisional, associational, and behavioral dimensions.Footnote 23

Given human interaction with AI-enabled robots, the issue of privacy, as mentioned earlier, has also become an important problem to consider for HRI. Robots that are AI enabled are interacting with people in their homes and work environments, and within public spaces, and thus often result in challenges to traditional doctrines of law such as the “reasonable expectation of privacy” in US constitutional law, or the principle of informed consent in most data protection jurisdictions.Footnote 24 Within the EU, under the principle of “data minimization,” a data controller is required to limit the collection of personal information to what is directly relevant and necessary to accomplish a specified purpose. While an interesting approach for the Internet, this may be difficult to apply to interpersonal interactions between humans and mobile robots.Footnote 25 For data privacy in HRI, by embedding legal safeguards and constraints into the design of robotic technology, the goal is to address limits of current regulations and to guarantee the transparency of data processing and the control of data and information. Here we argue that privacy by design should make transparent what data the robot processes, thus letting users or masters of the robot have control over the robot, while excluding third parties from access to data.

1.1.2 The Law, AI, and the Impact on Human–Robot Interaction

We discussed earlier how the law is necessary to govern the impact of increasingly anthropomorphic and smart robots experienced in social contexts. It is also the case that the application of the law to human interaction with robots may depend on matters of trust, social acceptability, and cohesion between humans and robots, which as we discussed previously largely depend on the norms and culture within which the HRI takes place. Several chapters of this handbook are devoted to these issues given different legal systems and cultures experiencing robots. However, we do not mean to say that international law, or models of legal governance play no role in this context, rather, we should be attentive to different approaches of national and quasi-federal legislators (e.g., EU law) and their crucial role in the regulation of anthropomorphic and AI-enabled robots.

In addition to domain-specific regulations of AI-enabled robots in the fields of medical devices, civil aviation, finance, and so on, legislators may opt for an all-embracing “horizontal approach” to the challenges of AI-enabled technologies. To some extent, this has been the approach of the EU institutions. For example, in the EU, the Parliament, the Council, and the Commission have time and again argued in their reports and resolutions that the “European values” enshrined in the Charter of Fundamental Rights (CFRs) from 2000 should be protected against misuses and overuses by AI systems. In 2021, the European Commission presented a first draft of the Artificial Intelligence Act, or “AIA,” which is particularly relevant for robotics and HRI as it aims to regulate all high-risk uses of AI systems, except when AI systems are “developed or used exclusively for military purposes” (Art. 2(3) of the first draft of the AIA). Although it is likely that several parts of the AIA will be reformulated through the institutional process of amendments at the European Parliament and European Council levels, the overall architecture of the regulation is clear in its application to emerging robots. Interestingly, within the EU several applications of anthropomorphic robots under discussion within this handbook already fall under the provisions and binding rules of the AIA and thus we discuss them here.

The aims of the AIA can be summed up with five points. First, the intent of the AIA is to ban a set of unacceptable uses of AI that trigger a clear threat to the safety, life, and other rights of individuals. According to Art. 5 of (the first draft of) the AIA, AI practices that are prohibited include the deployment of “subliminal techniques beyond a person’s consciousness” (Art. 1(1) lett. a); the abuse of people’s “vulnerabilities” due to their age, physical or mental disability (lett. b); certain uses and services of social scoring by public authorities (lett. c); and, the use of real-time biometric identification systems, which admits, however, certain exceptions (lett. d). With the above in mind, we note here the ability of robots to scan an individual’s face for facial recognitionFootnote 26 and to detect an individual’s emotions.Footnote 27

Second, the AIA rests on the difference between high-risk AI systems and AI systems that do not raise high risk. That which should be considered a “high-risk AI system” is determined by an annex of the normative act, that is, Annex III of the AIA on the uses of technology. High-risk AI systems are subject to strict obligations and mandatory requirements before they can be placed into the market (Art. 9-15). This is one of the parts of the AIA that has ignited controversies. Annex III of (the first draft of) the Act determines when an AI system is high-risk pursuant to Article 6(2), according to the uses of the AI system. An AI system can be considered high risk when employed in several different areas that span from biometric identification and categorization of natural persons to management and operation of critical infrastructure; education and vocational training; employment, workers management, and access to self-employment; access to and enjoyment of essential private services and public services and benefits; law enforcement; migration, asylum, and border control management; and down to the administration of justice and democratic processes.

Third, providers of non–high-risk AI systems are encouraged to create their own codes of conduct, pursuant to no. 81 and Art. 69(2) of the proposal. In other words, although an AI-equipped robot can be considered non–high risk (e.g., robot puppies), manufacturers of such robots, such as NAO, are encouraged to create codes of conduct. In between the top-down regulation of high-risk AI systems and the self-regulation of other than high-risk AI systems, Art. 52 of (the first draft of the AIA), similar to the California bot law discussed earlier, establishes some “transparency obligations for certain AI systems.” In particular, “providers shall ensure that AI systems intended to interact with natural persons are designed and developed in such a way that natural persons are informed that they are interacting with an AI system, unless this is obvious from the circumstances and the context of use” (Art. 52(1)). Likewise, Art. 52(2) of the draft establishes that “users of an emotion recognition system or a biometric categorization system shall inform of the operation of the system the natural persons exposed thereto.” These transparency obligations seem particularly relevant for anthropomorphic robots under discussion within this handbook that would not be high-risk pursuant to the “areas” of Annex III of the regulation. Thus, for a low-risk use of a robot, which accordingly falls beyond the reach of Annex III, there would still be transparency obligations for producers and designers of such low-risk robots.

Fourth, the EU legislators endorse some of the new regulatory schemes for robotics we mentioned earlier in previous sections, that is, the regulatory sandboxes “in support of innovation” pursuant to Art. 53 of (the first draft) of the AIA. As a form of experimentalist governance,Footnote 28 or derogation and open access,Footnote 29 the creation of legally deregulated zones for the empirical testing and development of AI and emerging technologies should “lead to a larger uptake of trustworthy artificial intelligence in the Union,” in accordance with no. 81 of the Act. The development of such special zones of legal and technological experimentation is complemented with the governance model enshrined in Title VI of the Act, in particular, as regards the role of the new European Artificial Intelligence Board (Art. 56) (EAIB). The “EAIB” can be understood as a meta-regulatory board developed to prevent the fragmentation of the EU system, and clarify the implementation of the new regulation, for example, as concerns the authorizations of EU Member States for the development of the regulatory sandboxes of Art. 53.

Finally, it is likely that the proposal of the EU Commission will be amended to include further legal issues posed by AI, but not included in the first draft of the AIA. Some of these legal issues closely relate to the design, manufacture, and use of anthropomorphic robots. It is noteworthy that the mandatory requirements for high-risk AI systems pursuant to Art. 9-15 of (the first draft of) the AIA do not include any commitment against adverse environmental impacts, least AI systems raise a direct threat to “the health and safety, or a risk of adverse impact on fundamental rights.” As a result, most proposals on the “environmental sustainability” of robotic technology, including AI-enabled robots, are left to voluntary initiatives put in place by providers of non–high-risk AI systems as concerns, for instance, the formation of codes of conduct set up with Art. 69(2). This lack of attention to the challenges of AI and its overall sustainability has led to recommendations by scholars for academic research centers to formalize amendments to the current proposal of the Commission.Footnote 30 According to several scholars and institutions, the AIA should comprise the assessment of the environmental impact of AI.Footnote 31 It is likely that the new version of the EU act will include it. We find the previous discussion on the environmental impact of AI to be an area that should be extended to robots and their potential environmental impact.

1.2 Types of Law to Consider for Human–Robot Interaction

As a guiding principle for the regulation of AI-enabled and anthropomorphic robots, laws should be clear, transparent, and predictable tools for their governance. However, the formal and due process procedures involved in legislation often make “hard laws” inflexible in the context of rapidly changing robotic advances. But in addition to the enactment of hard laws, other approaches can be considered for the regulation of robot technology. In this section we briefly discuss AI-hard laws, AI-soft laws, and non–AI-hard laws.

(1) AI-hard laws refer to formal legal regulations that are designed with a central focus on AI including the design, use, or other legal issues related to AI. For example, the provisions of the AIA in EU law mentioned earlier in the previous section.

(2) AI-soft laws refer to opinions, recommendations, and guidelines initiated by public institutions. For example, the opinions of the European Data Protection Board.

(3) Non–AI-hard laws refer to many current existing legal regulations that are not originally created with a focus on AI, but that still apply to the governance of robots. For example, the provisions of the GDPR in EU law.

Against this backdrop, it is worth mentioning the approach taken in Japan for the regulation of robots. Japan’s approach on AI governance is mainly focused on a series of AI-soft laws developed by Japanese ministries and governmental agencies. Further, the Japanese Society for Artificial Intelligence (JSAI) has published ethical guidelines that expect all JSAI members and their AI agents to abide by.Footnote 32 Considering that quality assurance will be a critical issue for AI products, the QA4AI (Quality Assurance for Artificial-Intelligence-based products and services) Consortium has published Guidelines for Quality Assurance of AI-based Products and Services, which helps AI developers evaluate the quality of their AI products from the aspects of data integrity, model robustness, system quality, process agility, and customer expectation.Footnote 33 In some circumstances, AI-soft laws can be a good option for AI developers in Japan, as they can help avoid the possibility of overregulation from rigid AI-hard laws, and they can be used to protect business secrets by the implementation of voluntary self-regulation. The interpretation of the (hard) law in Japan is often formalistic and pedantic; therefore, to prevent this risk, the Japanese government has opted for a soft-law approach, which helps producers of AI-equipped robots because most decisions are up to them via the process of self-regulation.

However, a concern about AI governance in Japan is the regulatory gap from potential conflicts between emerging AI applications and many non–AI-hard laws that have already been deployed inside Japanese society. To solve this problem, the Japanese government uses deregulation as a strategy by allowing AI and robots empirical experiments to be conducted inside many special zones around the country. This form of experimentalist governance results in interesting convergences among jurisdictions, just as in EU law and Art. 53 of the (first draft of the) AIA, that mostly hinge on an AI hard-law, rather than the AI soft-law approach.

1.3 Ethical and Design Considerations and Human–Robot Interaction

In this section, we briefly discuss efforts to design ethical robots for HRI, as we think the goal of designing ethical robots goes hand-in-hand with law, policy, and regulations for HRI. Note that the basic approach discussed in this section is to design ethical robots through the design team’s selection of hardware, software, and machine learning techniques that direct the robot’s behavior. Considering the process of design, in the late 1960s, Herbert A. Simon lamented the lack of research on “the science of design” that characterized the curricula of both professional schools and universities.Footnote 34 Later, in Code and Other Laws of Cyberspace Lawrence Lessig similarly stressed the lack of research on the impact of design on both social relationships with technology and the functioning of legal systems. However, Lessig commented that human behavior can be shaped by the design of spaces, places, and artefacts.Footnote 35 Since Simon’s and Lessig’s comments, the application of human-centric design principles has become a common approach to creating robots that act ethically toward humans. Considering the challenges involved in creating ethical robots, the approach of “value sensitive design” (VSD) which considers human values during the design process is particularly useful for HRI in order to ensure robots are attentive to human needs. On this point, Cheon and Su using the VSD approach interviewed robotics scientists to discover the values they used for designing humanoid robots.Footnote 36 In interviews with twenty-seven roboticists, they found that their values were shaped primarily by their engineering-based background that emphasized robotics as a field of integration. From this, one conclusion was that VSD principles should be integrated into the design process for humanoid robots.

Considering early efforts to control the behavior of robots which were predominately used for industrial purposes, regulations mainly focused on safety concerns for industrial robots working in close proximity to humans on factory floors. At this time, robots were regulated by the equivalent of Occupational Health and Safety Acts as they were enacted in different nations. For example, a regulation that emerged for industrial robotics was a straightforward and reactive rule for HRI – when an emergency button is pressed, then the robot must stop its operations. However, when human-collaborative robots began to enter factory production lines and other spaces, robot safety regulations needed to be updated to include the increased risks to users resulting from expanded HRI scenarios. One approach taken was to consider which design features should be the subject of legal regulations using the principles expressed in “Safety by Design.” A major aspect of safety by design is not aimed at retrofitting safeguards after a safety issue has occurred, rather using Safety by Design principles as a way to minimize threats by anticipating, detecting, and eliminating harms before they occur. As an example, designing the robot Baxter, the design team considered using lightweight and compliant materials, software control when coworking with a user in close proximity, dynamic braking for the robotic device, and human awareness.Footnote 37 In addition, for AI-enabled humanoid robots designed for service and health-care industries, design teams have also used the principles of Safety by Design by considering the ethical, legal, and social issues (ELSI) posed by service and health-care robots. As an example, Wynsberghe proposed a framework for the ethical evaluation of care robots which focused on ethical concerns during the design stage of robots.Footnote 38

Professional societies are also becoming active in offering ethical design principles for AI-enabled technologies. For example, the IEEE Standard Association launched the developer focused 7,000 series AI ethics standards which covers different topics for ethics and design for AI systems which also applies to robotics. In particular, the IEEE Std 7000™-2021 standard provides an operation procedure for developers to identify ethical considerations from their systems:Footnote 39

The procedure uses a system engineering standard approach integrating human and social values into traditional systems engineering and design.

It includes processes for engineers to translate stakeholder values and ethical considerations into system requirements and design practices.

And the standard advocates a systematic, transparent, and a traceable approach to address ethically oriented regulatory obligations in the design of autonomous intelligent systems.

Summarizing this section, from the above, the idea of using “humanistic” (or user-centered) design principles for HRI emphasizes the point that ethical, moral, and legal issues are often impacted by how humans interact with robots at the level of interpersonal interactions. And employing user-centered principles from robots during the process of design may not only limit the impact of harm-generating behavior by robots but also may encourage people to change their behavior as they interact with robots by widening their range of options and choices.Footnote 40 According to the different aims of design for robots (from functional to ethical), it seems that lawmakers should consider policies and regulations for HRI that lead to a fair and just society given our interactions with expressive, AI-enabled, humanoid, and anthropomorphic robots.

1.4 Conclusions

In this chapter we introduced AI-enabled, expressive, humanoid, and anthropomorphic robots that are currently entering society and challenging established principles of law, policy, and regulations. It is undeniable that as time goes by, robots will continue to gain intelligence and human-like abilities, essentially becoming more like us in form and behavior. As robots become more like us, so too will our interactions with them raise issues of law and policy that are similarly triggered by human interactions with other people. We already see this happening with criminal and civil law disputes that involve robots, to commercial law and human rights violations which have been impacted by algorithmic decision-making. And extending the ability of AI-enabled robots further into the future, we may eventually reach an inflection point (or singularity) where robots exceed human levels of intelligence which will surely raise new and significant issues of law for entities that may someday argue for rights themselves.

The main position taken in this introductory chapter has been that as robots gain social skills and interact with people at an interpersonal level, HRI becomes the focus point where issues of law, policy, and regulations are impacted. For example, an assault involves physical contact, conversations with robots can lead to privacy violations, and robot-made decisions about individuals may violate the individual’s human rights. So, from these examples, how to regulate risks and challenges from AI-enabled and anthropomorphic robots will be an important and complex challenge to legislators and designers of robotic technology. We close by calling for more scholarship which focuses on how humans interact with robots at an interpersonal level and we think HRI is a key concern for the development of law, policy, and regulations which relates to robots.