1 Introduction

Psychological measures, tests, and assessments are ubiquitous in many societies (Reference Oakland, Douglas and KaneOakland et al., 2016; Reference Zlatkin-Troitschanskaia, Toepper, Pant, Lautenbach and KuhnZlatkin-Troitschanskaia et al., 2018). One widespread use has been for tracking academic progress. In the United States, scores on standardized tests contribute to progression to the next grade level and decisions about admission to college as well as to rankings of schools and evaluations of teachers (Reference LemannLemann, 2000; Reference Moss, Pullin, Gee and HaertelMoss et al., 2005; Reference YoungYoung, 2021). Similar uses in England include standardized testing in primary grades, high-stakes examinations at the end of secondary school, and publicly available rating systems (Reference Rimfeld, Malanchini and HanniganRimfeld et al., 2019; Reference SantoriSantori, 2020). In China, a tradition of examinations extends back centuries, and contemporary National College Entrance Examinations determine college entry (Reference Bodenhorn, Burns and PalmerBodenhorn et al., 2020; Reference RotbergRotberg, 2010). Beyond testing academic progress, school psychologists also use assessments of social, behavioral, and emotional behaviors to screen children for referrals to intervention in countries around the world (Reference Oakland, Douglas and KaneOakland et al., 2016). And, these school-based usages intersect with clinical and organizational psychology use of tests, as a component of diagnoses of psychiatrically defined disorders and of workplace hiring and promoting (Reference BenjaminBenjamin, 2005; Reference Rothstein and GoffinRothstein & Goffin, 2006).

Measures, tests, and assessments address the challenge that many key concepts are not directly observable in the psychological sciences, and related social, health, and educational sciences (i.e., are latent). It is therefore common to measure latent constructs using things that are observable, such as a series of questions about knowledge, behaviors, expressions, and attitudes that individuals can report. For instance, the Aggression Questionnaire measures individuals’ tendencies toward aggression through their answering on a five-point scale how characteristic of them (labeled extremely uncharacteristic, uncharacteristic, neither characteristic nor uncharacteristic, characteristic, and extremely characteristic) are a few dozen actions such as “I flare up quickly but get over it quickly” and “If somebody hits me, I hit back” (Reference Buss and PerryBuss & Perry, 1992; Reference Buss and WarrenBuss & Warren, 2000). As another example, the Achenbach System of Empirically Based Assessment (ASEBA, n.d.) includes versions that ask parents, teachers, and children to report about children’s behaviors. Responses to various subsets of items contribute to summary scores in relation to empirically based syndromes and psychiatric diagnostic classifications – for example, contributing to scores on an aggressive behavior syndrome for young children are statements like “Easily frustrated,” “Doesn’t seem to feel guilty after misbehaving” and “Physically attacks people” which are reported as being not true, somewhat/sometimes true, or very/often true of the child.

Because of the ways such measures gatekeep access to opportunities and mark individuals with prestigious or stigmatizing statuses, their use has been contested (Reference LemannLemann, 2000; Reference Moss, Pullin, Gee and HaertelMoss et al., 2005; Reference YoungYoung, 2021). Social movements in the 1960s, for instance, heightened attention to the question of whether measures fairly assessed abilities across groups, such as between those who were assigned as female versus male or as Black versus White (Reference Byrne, Oakland and LeongByrne et al., 2009; Reference Davidov, Meuleman, Cieciuch, Schmidt and BillietDavidov et al., 2014). Considerations of fairness addressed questions such as: Do various groups define a construct in the same way? Do the groups view similar knowledge, behaviors, expressions, and attitudes as reflective of the construct? Do groups vary in how they interpret or report about a particular expression or behavior? Continuing the example of tendencies toward aggression introduced earlier, considerations of fairness might include asking whether some groups interpret aggressive conduct as reflecting a behavioral disorder and others do not – for example, would some groups view hitting someone back after being hit as reflective of such a disorder, and other groups consider such a response as a reasonable defense of self? Considerations of fairness might also concern what has been termed social desirability bias – the tendency for a person to adjust their responses to be in line with what the person thinks is the expected answer (Reference Duckworth and YeagerDuckworth & Yeager, 2015). In other words, for some individuals and some contexts, affirmation of the statement “If somebody hits me, I hit back” might be viewed as a sign of strength, and thus potentially overreported (i.e., endorsed by some people who do not actually hit back when hit), and for others such affirmation might be seen as a weakness and thus potentially underreported (i.e., not endorsed by some people who do actually hit back when hit).

Such complexities in how concepts are defined and interpreted and controversies about how tests are used have led psychometricians to expand strategies for assessing fairness, including the central concept of measurement invariance (also known as a lack of measurement bias; AERA/APA/NCME, 2014; Reference Camilli and BrennanCamilli, 2006; Reference XiXi, 2010). Formally defined in subsequent paragraphs, measurement invariance broadly entails the degree to which a measure’s questions operate similarly across groups. As early twenty-first century societies again contend with systemic inequities, and movements call for equity and antiracism, the need is urgent for psychologists to comprehensively consider measurement invariance (Reference Han, Colarelli and WeedHan et al., 2019).

Despite the need for examining measurement invariance as an aspect of fairness, the capacity of the field to do so is limited. Partly the limited field capacity reflects minimal training of students and scholars in psychometrics, and particularly item response theory (IRT) approaches. Over a decade ago, a national survey of graduate programs in psychology in the United States, for instance, found that two-fifths offered no training in IRT and less than one in ten offered full coverage (Reference Aiken, West and MillsapAiken et al., 2008). A Canadian survey likewise found few offerings in advanced statistics, including structural equation modeling (Reference Golinski and CribbieGolinski & Cribbie, 2009). Limited coverage was again identified in a recent US national survey that found nearly one quarter of graduate programs completely lacked coverage of psychometrics in introductory statistics courses and another fifth restricted coverage to a single class period or less (Reference Sestir, Kennedy, Peszka and BartleySestir et al., 2021). The consequences of limited field capacity are amplified by the complexity of early strategies for empirically identifying measurement invariance. Iterative approaches for measurement invariance testing are particularly labor intensive, especially when groups are numerous (Reference Cheung and LauCheung & Lau, 2012). Implementing these approaches therefore required particularly advanced levels of programming skill. And, substantive scholars required basic understanding of the techniques in order to best understand the rationale for such investment of time and effort and in order to draw inferences for theory and practice from the volumes of results.

These challenges may contribute to the relative lack of publications documenting invariance for measures commonly used in psychology. For instance, in a large-scale analysis of fifteen widely used measures in social and personality psychology (such as the Rosenberg Self-Esteem Scale), whereas nearly all measures demonstrated good evidence of internal consistency, only one demonstrated good evidence of measurement invariance (Reference Hussey and HughesHussey & Hughes, 2020). A review of a representative sample of articles from the Journal of Personality and Social Psychology also revealed that the majority of articles reported only reliability coefficients as structural validity evidence; the review authors noted that although they “observed numerous studies which tested hypotheses about numerous populations (e.g., age-groups, cultures) … only one tested measurement invariance” (Reference Flake, Pek and HehmanFlake et al., 2017).

The purpose of this tutorial is to support developmental scientists in using and interpreting one recently developed technique for empirically identifying measurement invariance and adjusting for the invariance that is revealed, the alignment method (Reference Asparouhov and MuthénAsparouhov & Muthén, 2014, Reference Asparouhov and Muthén2023; Reference Muthén and AsparouhovMuthén & Asparouhov, 2014). The alignment method was developed for cross-national research (Reference Asparouhov and MuthénAsparouhov & Muthén, 2014; Reference Marsh, Guo and ParkerMarsh et al., 2018; Reference Muthén and AsparouhovMuthén & Asparouhov, 2014), and has been applied more extensively in that field than in other areas of psychology (e.g., Reference Bansal, Babinski, Waxmonsky and WaschbuschBansal et al., 2022; Reference Bordovsky, Krueger, Argawal and GruczaBordovsky et al., 2019; Reference Bratt, Abrams, Swift, Vauclair and MarquesBratt et al., 2018; Reference Gordon, Davidson, Jones, Lesaux and BarnesGordon et al., 2022; Reference Lansford, Rothenberg and RileyLansford et al., 2021; Reference Rescorla, Adams and IvanovaRescorla et al., 2020). The alignment method differs from other measurement invariance techniques in making it straightforward to allow for partial invariance in which some questions similarly reflect a construct across groups and other questions differ in their relationship to the construct across groups. Other measurement invariance techniques have been designed to detect whether invariance holds or not, and offer less guidance or require more complicated strategies when invariance is rejected.

We not only provide an accessible introduction to the key concepts undergirding the alignment method but we also: (a) show how to implement it in the software package Mplus using algorithms written by the alignment approach’s authors, (b) provide an R package for reading the volumes of results (openly accessible through GitHub), and (c) detail how to interpret the results. Importantly, our focus is on the kinds of multi-category (e.g., Reference LikertLikert, 1932) questions common in psychology (such as the five- and three-category response options in the examples of measuring tendencies toward aggression provided earlier). In contrast, existing tutorials and applications of the alignment method have primarily focused on continuous and dichotomous items (e.g., Reference Sirganci, Uyumaz and YandiSirganci et al., 2020). We also differ from prior coverage of the alignment approach with categorical items (e.g., Reference Svetina, Rutkowski and RutkowskiSvetina et al., 2020) in demonstrating how to convert the results to probability units. Probability units help make the results meaningful to substantive scholars and broader stakeholders. In other words, percentages are familiar to many given widespread use, whereas a model coefficient (such as a logit) may be less familiar. In the context of measurement invariance, a difference of 50 to 30 percent may be seen as large, whereas a difference of 41 to 39 percent may be seen as small, when comparing the chances that members of one group versus another would be rated to have “hitting back” behavior be extremely characteristic of them, despite being estimated to have equal latent tendency toward aggression. To illustrate how to implement the alignment method and interpret its results, we offer an empirical example, with code and data available in supplementary materials. Before considering this empirical example, we begin with a conceptual introduction to measurement invariance followed by a formal presentation of central mathematical models.

1.1 Introduction to Measurement Fairness

Much has been written about fairness in measurement, including from those contesting historical uses of standardized testing, from those suggesting ways to conceptualize cross-cultural variations in concepts and their measurement, and from those proposing specific strategies to psychometrically test for invariance and to address its absence (Reference Dorans and CookDorans & Cook, 2016; Reference Hui and TriandisHui & Triandis, 1985; Reference Johnson and GeisingerJohnson & Geisinger, 2022; Reference MossMoss, 2016). In this section, we introduce a portion of these writings relevant to understanding the alignment method. Given the limited training in psychometrics across the field of psychology reviewed earlier, we start with a general introduction to concepts of measurement and then discuss the importance of considering intersectionality and categorical items when testing for measurement invariance.

1.1.1 General Measurement Concepts

Similar to regression models allowing psychologists to see if empirical evidence is consistent with theoretical expectations about how one construct relates to another, psychometric models allow psychologists to see if empirical evidence is consistent with theoretical expectations about which knowledge, behaviors, expressions, and attitudes reflect a latent construct. Different from the core fundamentals of regression modeling, however, terminology and epistemology vary considerably across the psychometric literature. In the limited space of a tutorial, we are selective in what we cover.

One way we are selective is related to terminology, where we prioritize the term measure whenever possible as we are discussing concepts and offering interpretations. Some psychometric writing instead uses the terms tests or assessments. Likewise, we aim to use the term questions whenever possible to encompass what are sometimes referred to as items or prompts. One reason for our prioritization of the terms measure and question is to avoid implying that the alignment method can only be used with standardized or academic tests and assessments. Another reason is that we find the terms measure and question can be received by some audiences as more neutral. In contrast, the terms test and assessment can call to mind uses that are high stakes or that imply universally defined and expressed constructs. When introducing terminology and discussing mathematical models, however, we use the words test and item when doing so reflects conventions (e.g., item response theory; differential item functioning, item-level invariance). Here, our goal is to make this tutorial accessible to those already familiar with these conventional terms and to make the cited references accessible to those who want to learn more after reading the tutorial. Even as we do so, we encourage continued reflection and renaming in the field to prioritize inclusivity of terminology.

Another way we are selective in the context of the tutorial is in our focus on measurement invariance in general and the alignment method in particular. This focus allows us to limit our presentation to a set of concepts and techniques that can be covered within space constraints. At the same time, this focus can place out of sight the ways in which testing for measurement invariance is one aspect of a broader project of continuous measure improvement. We thus emphasize that we do in fact see measurement invariance testing as one component of an iterative process of accumulating and considering multiple pieces of evidence before any particular use of a measure. This process may include evidence provided by a measure’s developers, yet would also include evidence in the local use context and sharing of ownership, data, and interpretations with an array of stakeholders, including those responding to the measure and those impacted by its scores. Such consideration of local evidence and inclusion of an array of stakeholders are central components of fairness in general, and are relevant to considerations of measurement invariance in particular. The need to revisit the evidence for each potential use reflects the reality that the groups relevant to consider in relation to measurement invariance will differ across applications, and those being measured and impacted by scores will have insights into the meaning of constructs and their expressions. This inclusivity is especially important in fields where measures were historically developed by and with persons of limited diversity, and a critical gaze can illuminate areas of historical bias in the field itself and opportunities for future equity.

With such a critical gaze, we can draw from the body of psychometric models and writings to be part of a more inclusive approach, while recognizing historical biases. The field of psychometrics has itself evolved over time in understanding, reflecting, and changing its approach to fairness. As an example, the Standards for Educational and Psychological Testing, published collaboratively by the American Psychological Association along with the major educational and measurement societies (AERA/APA/NCME, 2014), reflects the latest in a series of publications dating back to the 1950s. The most recent standards elevated fairness as a “fundamental validity issue” that is an “overriding foundational concern” with a central issue being “equivalence of the construct being assessed” across groups (p. 49). This fundamental nature of fairness is in contrast to the prior standards, published in 1999, which limited fairness to specific populations (e.g., persons with disabilities, English language learners; Reference Johnson and GeisingerJohnson & Geisinger, 2022).

The latest standards also embrace a unified validity framework (Reference MessickMessick, 1989). What had been seen as distinct types of validity (e.g., content, criterion, consequential) are now recognized as multiple pieces of validity evidence that are brought together when making a decision about whether a measure is suitable for a particular use. Fairness in general, and measurement invariance in particular, can be seen as one aspect of this body of validity evidence. The body of validity evidence is also now seen as continually accumulating, rather than static at the time a test was published. The latest standards advise decisionmakers to use a range of strategies, including various psychometric models, as they make a determination regarding the extent to which the full body of evidence supports a proposed use for a measure. In other words, whereas historically a decisionmaker might have cited internal consistency reliability or factor analyses reported in a publisher’s manual, contemporary decisionmakers would be encouraged to either locate evidence of validity for their specific use or, if none was available, to build such evidence. This evidence would include demonstrating that the measure’s questions demonstrated measurement invariance across the relevant groups and in the local context of a specific application.

Tests of measurement invariance in general, and the alignment method in particular, thus offer empirical evidence related to a measure’s validity. Results from testing measurement invariance, including with the alignment method, can be combined with other aspects of validity evidence to inform conclusions about multiple aspects of measurement fairness (Reference Byrne, Oakland and LeongByrne et al., 2009; Reference Davidov, Meuleman, Cieciuch, Schmidt and BillietDavidov et al., 2014). At a conceptual level, if the alignment method indicated very little evidence of measurement invariance, decisionmakers might want to reconsider whether and how the construct is defined across groups. If partial invariance was identified, the instances of non-invariance might be probed to consider whether groups differed in terms of what knowledge, behaviors, expressions, or attitudes were reflective of varying levels of the construct. This probing might include how group members interpret the measure’s questions that demonstrate non-invariance. This probing might also include considering the extent to which their responses are affected by social stereotypes and norms related to the measured construct. And, this probing might include considering whether the context in which the measure is administered heightens aspects of social desirability.

Strategies to use might include those from the field of measurement theory and practice for using psychometric model results to iteratively improve measures, including by engaging substantive experts to precisely define constructs and to write questions to reflect those constructs (Reference Boulkedid, Abdoul, Loustau, Sibony and AlbertiBoulkedid et al., 2011; Reference Evers, Muñiz and HagemeisterEvers et al., 2013; Reference Lane, Raymond and HaladynaLane et al., 2016; Reference Wolfe and SmithWolfe & Smith, 2007a, Reference Wolfe and Smith2007b). Examining patterns of results, in dialogue with diverse stakeholders and informed by scientific and indigenous concepts, literatures, and practices can lead to tentative interpretations (Reference ChilisaChilisa, 2020; Reference SablanSablan, 2019; Reference SpragueSprague, 2016; Reference Walter and AndersenWalter & Andersen, 2016). Such tentative interpretations might be examined through future revisions of the measure. Complementary methods such as cognitive interviewing, item reviews, and focus groups can also inform interpretations. Throughout this process, collaborators may see that many aspects of measurement fairness are interrelated, as scrutinizing a measure’s questions may lead to revised understandings of concepts, and altered definitions of concepts may result in updating a measure’s questions. Engaging a range of stakeholders and variety of methods supports iterative and continuous improvement, including representatives from those being measured as well as content and methods experts, all inclusive of the groups being assessed.

As an example, we collaborated with a school district to iteratively improve a measure of students’ social-emotional competencies using psychometric approaches including the alignment method. The school district engaged students, teachers, principals, and other stakeholders in interpreting the results. In a Student Voice Data Summit, for instance, students thought different patterns of socialization influenced why high school boys were more likely to endorse a question about “staying calm when stressed” as easy to do compared to high school girls, believing that boys were less likely to admit feeling stress compared to girls, who were more often socially encouraged to discuss their emotions freely. Following findings indicating measurement non-invariance between students who identified as Latino and Latina, the district’s research and practice teams partnered on a project to adapt lessons in their social-emotional learning curriculum based on the findings (Reference Gordon, Davidson, Jones, Lesaux and BarnesGordon & Davidson, 2022).

1.1.2 Importance of Considering Group Intersections

A limitation in historical considerations of measurement fairness, including in psychology, has been a focus on a small number of groups. Indeed, early methods and tutorials often assumed two groups, one focal and one reference (Reference FinchFinch, 2016). With the critical gaze discussed previously, this approach can be seen as problematic, by assuming a binary and privileging one group as focal and othering the second reference group. Cross-national research in contrast more often considered measurement invariance across many groups. The alignment method arose in the latter context, designed to facilitate empirical identification and adjustment of measurement invariance with many groups. Although this cross-national application still tended to consider groups of a single type (multiple nations), the alignment method can be further extended to consider groups defined by layering together multiple aspects of identities, what we refer to as multilayered groups.

One way to define such multilayered groups would be to cross-classify multiple variables. If an existing data source had classifications of sex (e.g., male, female) and race-ethnicity (e.g., Black, White, Asian, Latino/a), then eight multilayered groups might be defined (i.e., Black male, Black female, White male, White female, Asian male, Asian female, Latino, Latina), for instance. Groups could also be defined in flexible ways, such as if some participants preferred to label their own identities or to not use labels, including those identifying as queer, nonbinary, or fluid. And, groups could be defined using theoretical paradigms that consider how systems of power intersect in ways that may amplify, mute, or transform one another dynamically, as in the concept of intersectionality (Reference CrenshawCrenshaw, 1989). By facilitating this flexibility, the alignment methods might be used by scholars to interrogate measurement fairness from a range of theoretical perspectives and incorporate social-justice oriented modern data science, (Reference Covarrubias, Vélez, Lynn and DixsonCovarrubias & Vélez, 2013; Reference Garcia, López and VélezGarcia et al., 2018; Reference SablanSablan, 2019). To achieve larger sample sizes in various groups of interest, integrative analyses of multiple datasets might be used (Reference Fujimoto, Gordon, Peng and HoferFujimoto et al., 2018).

1.1.3 Importance of Accounting for Multi-Category Items

Many psychological measures include questions that have multiple categories, such as Likert-type response structures and the five- and three-category response options offered in earlier examples. Yet, similar to the origins of regression modeling, numerous psychometric methods were first developed assuming continuous variables, and psychologists often continue to rely on these methods. We demonstrate how to use the alignment method with multi-category items. Doing so better conforms the model assumptions with the data. Doing so also allows for presentation of results in ways that are meaningful to substantive scholars and to a range of stakeholders: the probabilities of choosing various categories. Doing so additionally reduces the chance of overlooking important aspects of measurement invariance that are revealed in the category probabilities.

Although the statement that psychometric models used should be designed, implemented, and interpreted recognizing the questions’ multi-category structure seems obvious, it has been common for scholars and analysts to adopt models designed for continuous items when questions are multi-category (Reference Rhemtulla, Brosseau-Liard and SavaleiRhemtulla et al., 2012). Even when models designed for multi-category questions are used, interpretations of the substantive meaning of results can be incomplete (Reference GordonGordon, 2015; Reference Meitinger, Davidov, Schmidt and BraunMeitinger et al., 2020; Reference Seddig and LomazziSeddig & Lomazzi, 2019). Analogous to applying regression models designed for continuous versus multi-category outcomes, results can sometimes be robust across specifications (Reference LongLong, 1997; Reference Long and FreeseLong & Freese, 2014). Yet, robustness across specifications should be evaluated in any particular application and not assumed. And, when models appropriate to multi-category outcomes are used, interpretation requires additional steps to convert to substantively meaningful metrics (e.g., probabilities vs. logits; Reference LongLong, 1997; Reference Long and FreeseLong & Freese, 2014).

In other words, when a measure asks individuals to choose among a set of categorical responses to a question, results are meaningful when reported in terms of response probabilities. We might find, for instance, that 60 percent of one group versus 40 percent of another group are predicted to “strongly agree” with a statement, despite both groups being estimated to have the same level of the underlying construct being measured. We could contrast this result with another where, say, the predicted percentages were 51 percent for the first group and 49 percent for the second group. Here, we discuss how the alignment method makes such calculations. Again, our goal is to make it easier to take this step from estimation to interpretation, given the volumes of results produced by the alignment method and given the need to convert results to meaningful metrics. This goal is consistent with implementation science and related strategies for encouraging the adoption of advanced methods (Reference King, Pullman, Lyon, Dorsey and LewisKing et al., 2019; Reference SharpeSharpe, 2013). Reporting in meaningful units makes results more accessible to a range of stakeholders, including those being measured and the substantive scholars, practitioners, policymakers, family, peers, and community members who draw inferences from the scores (Reference Gordon, Davidson, Jones, Lesaux and BarnesGordon & Davidson, 2022; Reference MossMoss, 2016). In other words, many will be familiar with percentages and probabilities from day-to-day usage, whereas fewer may be familiar with the logits. In line with modern statistical reporting standards, probabilities also allow stakeholders to consider the real-world importance of a difference, beyond its statistical significance.

1.2 Introduction to Psychometric Methods for Testing Measurement Invariance

There are two general types of psychometric models that can accommodate multi-category questions: item factor analysis (IFA) and item response theory (IRT; Reference Embretson and ReiseEmbretson & Reise, 2000; Reference Liu, Millsap and WestLiu et al., 2017; Reference MillsapMillsap, 2011). Each has been used to test for measurement invariance. The alignment method uses IFA during estimation. The alignment method also allows results to be translated to IRT format. By presenting both approaches, we support readers connecting to their own prior study of one or both of these methods as well as to the related literatures on each method. We also demonstrate the ways in which each tradition offers insights into measurement invariance.

1.2.1 Review of General Concepts of Item Factor Analysis and Item Response Theory

Psychometric models generally aim to produce empirical evidence regarding the extent to which responses to a measure’s set of questions are consistent with the presence of the proposed latent construct. Many psychologists will be familiar with factor analysis, although likely with its most typical presentation assuming continuous items. Here, factor loadings are often of focus, capturing the strength of the association between the item and the latent construct. Underlying the factor analytic model are a series of regressions of the continuous item responses on the latent construct, with the loadings being the regression coefficient and a factor intercept also being present. As we formalize in the next section, IFA uses a similar formulation, although when items are recognized as having multiple categories (as opposed to being continuous), there are multiple factor thresholds rather than a single intercept. The specification we feature is analogous to ordinal logistic regression, which may be familiar to some readers. Just as ordinal logistic regression can produce predicted probabilities for each level of a categorical outcome variable, so too can IFA predict the probabilities of a respondent choosing each category of a multi-category item.

As we show next, the specification we focus upon is also mathematically equivalent between IRT and IFA formulations – that is, the graded response model applied to items in which participants can choose one and only one response from a presented set of categories designed to reflect an ordinal progression (Reference SamejimaSamejima, 1969, Reference Samejima, van der Linden and Hambleton1996, Reference Samejima, Nering and Ostini2010). What differs between the IRT and IFA formulations is how the focal parameters are defined. IRT defines item discriminations (rather than the factor loadings of IFA) and item difficulties (rather than the factor intercepts/thresholds of IFA). We provide the standard formulas that allow for calculation of item discriminations from factor loadings and the calculation of item difficulties from factor intercepts/thresholds. Despite the mathematical equivalence of IRT and IFA models, they developed separately, and there is considerable terminology unique to each. Within IRT, it is also the case that numerous subliteratures exist, and various writers adopt different terminology. We introduce a subset of the terms most relevant to understanding measurement invariance (see Reference Nering and OstiniNering & Ostini, 2010, for comprehensive coverage and cross-walking of terminologies across psychometric models for multi-category items).

Both IRT and IFA models can produce summary scores that differ from a traditional approach of simply summing or averaging item responses. Doing so is important both for accurately estimating group differences on the contrasts and for conveying results to stakeholders. In terms of accuracy of estimation, under IRT and IFA, summary scores are the estimated locations on the latent construct of the people being measured, often in a log-odds (logit) metric. One advantage of the logit-metric estimates is that they are on an interval scale, thus well meeting the assumptions for calculating statistics such as means, standard deviations, and regression coefficients. In contrast, the spacing between an items’ categories is unknown. The labels of a question’s categories typically imply ordinality (e.g., very uncharacteristic, uncharacteristic, neither characteristic nor uncharacteristic, etc.), yet respondents may interpret categories in ways that differ from ordinality and, even when used in an ordinal fashion, the distances between adjacent categories can vary. Psychometric models can test for and reveal such usages, and estimate placement on a latent interval scale. Doing so increases precision of estimation and statistical power.

The person location estimates in a logit metric can also be readily compared to estimates of the latent locations of the items being used to measure the people, which are calculated to be on the same scale. Comparisons between person locations and item locations can facilitate interpretation, including by displaying results graphically to a wide range of stakeholders (Reference Crowder, Gordon, Brown, Davidson and DomitrovichCrowder et al., 2019; Reference Morrell, Collier, Black and WilsonMorrell et al., 2017). For instance, graphs can reveal when questions tend to fall above or below the latent locations of a sample’s respondents – in common parlance, when questions tend to be “hard” or “easy” for sample members. And, graphs can reveal whether questions tend to be spread out along the latent construct, or concentrated in certain regions. In our work with school districts in assessing students’ social-emotional competencies, we have used such graphs to help stakeholders think about where questions might need to be added to better distinguish among students of different competency levels. Practitioners resonated with an analogy to a ruler. For instance, we found initially that many items fell below a set of students’ competency levels, indicating that most students endorsed all of the competencies asked about in the set of questions, but few items fell in a range that would well differentiate among students in terms of competency level. We looked to the district’s standards for social-emotional competency at higher grade levels, and used focus groups with students and teachers, in an effort to develop items spread across the higher levels of competency (Reference Gordon, Davidson, Jones, Lesaux and BarnesGordon & Davidson, 2022).

We have also found that various stakeholders resonate with viewing the results in terms of the probabilities of choosing the response categories of a multi-category item. Key to measurement invariance is that such probabilities are predicted for children with the same estimated level of the latent construct. For instance, in the measure of young children’s aggressive behaviors noted earlier, we might find that the model predicted for one group of students that teachers had a 0.25 probability of choosing the option of “not true” for the question “Easily frustrated,” a probability of 0.40 for the option of “somewhat/sometimes true,” and a probability of 0.35 for the option of “very/often true.” These results might be contrasted to those for another group of children being 0.50, 0.30, and 0.20, respectively, despite these students having an estimated level of the latent tendency for aggression equivalent to the first group of students.

Such probabilities are often: (a) first predicted from the model across a range of person locations on the latent construct, and (b) then presented graphically in what are referred to as category probability curves. The reason for making calculations at a range of person locations on the latent construct is that we want to interpret the extent to which response probabilities vary across the groups despite focusing on individuals from each group who have the same latent level of the construct. In the example of the item “Easily frustrated,” we would use formulas from the model to make the calculations of category probabilities and cumulative probabilities for children estimated to be located at low-, mid-, and high- levels of tendencies toward aggressive behavior. Within any given latent location of persons, the probabilities sum to one across the categories, consistent with our focus on items that allow participants to choose one and only one response.

Sometimes, cumulative probability curves are also graphed. Cumulative probabilities sum probabilities across a range of a variable’s values. In the models for ordinal (e.g., Likert-type) response structures considered in this manuscript, the range is a set starting with a certain category and then including any category above it (Reference Embretson and ReiseEmbretson & Reise, 2000; Reference Samejima, Nering and OstiniSamejima, 2010). These cumulative probabilities are featured in some models rather than the individual category probabilities because constraints on the cumulative probabilities can simplify models and ensure that the model’s estimates conform with an ordinal assumption.

For a three-category item, there would be three such sets for cumulative probability calculations. For instance, in our previous example of a measure of young children’s aggressive behaviors, the individual categories would be 1 = not true, 2 = somewhat/sometimes true, and 3 = very/often true. In the first cumulative set, we would start with category 1 and then also include the two categories above it, leading to the set

![]() . This first cumulative set thus includes all three of the individual categories. In the second set, we would start with category 2 and then also include the one category above it, leading to the set

. This first cumulative set thus includes all three of the individual categories. In the second set, we would start with category 2 and then also include the one category above it, leading to the set

![]() . This second cumulative set thus includes the two higher categories. In the third set, we would start with category 3 and there would be no categories above it, leading to the set

. This second cumulative set thus includes the two higher categories. In the third set, we would start with category 3 and there would be no categories above it, leading to the set

![]() . This third cumulative set thus includes only the highest category.

. This third cumulative set thus includes only the highest category.

To calculate the cumulative probabilities, the probabilities of the individual categories in each set are summed together. Earlier, we offered, for the example of a measure of young children’s aggressive behaviors, a 0.25 probability of choosing the first option of “not true,” a probability of 0.40 for the second option of “somewhat/sometimes true,” and a probability of 0.35 for the third option of “very/often true.” We can symbolically represent these individual category probabilities as

![]() ,

,

![]() , and

, and

![]() . The cumulative probability for the first set of {1,2,3} would then be

. The cumulative probability for the first set of {1,2,3} would then be

![]() . Note that for the models featured in this manuscript, the sum of the probabilities for all categories will always be one because participants must choose one and only one response. As a result, this set including all categories is typically not shown in graphs, as its probability would always be one. The cumulative probability for the second set of {2,3} would be

. Note that for the models featured in this manuscript, the sum of the probabilities for all categories will always be one because participants must choose one and only one response. As a result, this set including all categories is typically not shown in graphs, as its probability would always be one. The cumulative probability for the second set of {2,3} would be

![]() . In other words, the probability is 0.75 that a teacher selects either the second or the third category for the example item. The cumulative probability for the third set of {3} would be

. In other words, the probability is 0.75 that a teacher selects either the second or the third category for the example item. The cumulative probability for the third set of {3} would be

![]() . This cumulative probability for the highest category is often graphed, although it’s helpful to keep in mind that the highest category’s cumulative probability curve will be the same as its individual category probability curve. And, of course, the greater the number of categories, the more cumulative probability curves there are between the curve for the lowest and highest values. In other words, whereas for the example of a three-category response structure, there was only one “middle” category, there would be five “middle” categories for a seven-category response structure.

. This cumulative probability for the highest category is often graphed, although it’s helpful to keep in mind that the highest category’s cumulative probability curve will be the same as its individual category probability curve. And, of course, the greater the number of categories, the more cumulative probability curves there are between the curve for the lowest and highest values. In other words, whereas for the example of a three-category response structure, there was only one “middle” category, there would be five “middle” categories for a seven-category response structure.

1.2.2 Conventional Strategies to Empirically Identify Measurement Invariance

Before turning to the alignment method in the next section, we introduce conventional strategies for empirically identifying measurement invariance and estimating its substantive size, both with the IFA and the IRT frameworks. We do so because the alignment method shares some terminology with these approaches and uses some of the conventional models as a starting point. Each of the IFA and IRT approaches to measurement invariance allows us to examine the core fairness question of whether response probabilities are statistically equivalent across groups within levels of the latent construct. However, each uses somewhat different terminologies and approaches.

Item Factor Analysis.

In factor analysis, the term measurement invariance is common, here meaning that the factor loadings and intercepts/thresholds are statistically equivalent across groups (Reference MillsapMillsap, 2011). A standard approach to examining model-level measurement invariance under the IFA framework has emerged that involves estimating a series of nested models. The configural model allows each group to have its own estimates, including of the factor loadings and thresholds for every item. The metric model constrains the loadings to be equal across groups for all items, but allows thresholds to be freely estimated for each group on each item. The scalar model constrains both the loadings and the thresholds to be equal across groups for every item. If the metric or scalar models show meaningfully worse fit (in ways illustrated herein), then model-level invariance is rejected, referred to as measurement non-invariance (Reference MillsapMillsap, 2011). Estimating group-specific models such as these is often referred to as multi-group confirmatory factor analysis.

In situations of non-invariance, the next step would be to probe item-level measurement invariance to see which parameters (loadings, intercepts/thresholds) differ for which groups on which items. If a subset of items’ parameters is invariant across groups, then a partially invariant model might be specified (Reference Byrne, Shavelson and MuthénByrne et al., 1989). The advantage of this partially invariant model is that scale scores can be linked across groups through the items that operate equivalently across groups. At the same time, other items’ parameter estimates are allowed to differ across groups. No single approach to establishing partial invariance under the IFA framework has achieved consensus as preferred, however. One iterative strategy involves first estimating a metric or scalar model and then using modification indices (expected changes in model fit) in order to choose a first parameter to free (i.e., to allow its value to differ across groups). The parameter selected to be freed has the largest modification index. The process is repeated iteratively until a good fitting model is established. Criticisms of this approach, and other multi-step processes with numerous decision points include: (a) the approaches become unwieldy with many groups and many items, (b) the selected model may not be unique in the current sample (if any judgment is needed in selecting among similar modification indices), and (c) the selected model may be best fitting for the particular sample but not likely to replicate across other samples (Reference Cheung and LauCheung & Lau, 2012).

Item Response Theory. Numerous IRT approaches have also been developed that focus on item-level invariance testing, referred to as empirical tests of differential item functioning (DIF; Reference Osterlind and EversonOsterlind & Everson, 2009). Although akin to the spirit of IFA tests of measurement-invariance, the traditions producing IRT-based DIF tests used a different logic. First, persons from different groups (e.g., usually two, such as those assigned as female and those assigned as male) but with equivalent levels of the latent construct were identified. Second, within each set of individuals sharing the same level of the latent construct, item response probabilities were compared between the groups (e.g., between those assigned as female and male, among the set identified as having a lower level of the latent construct; between those assigned as female and male, among the set identified as having a higher level of the latent construct). A key challenge of these approaches is that the strategy for identifying equivalent levels of the latent construct either requires making assumptions or requires using iterative strategies. For instance, sometimes all but one of the items are assumed to be DIF-free, so that summary scores based on those items can be used to identify equivalent groups. Then, DIF is tested for the remaining item. If a subset of DIF-free items can be identified, then they are used as anchors in a model that allows the remaining items to have their own parameters across groups. Estimating a model anchored in this way is often referred to as concurrent calibration, with the anchor items serving as the link putting scores on the same scale across the groups (Reference Kolen and BrennanKolen & Brennan, 2014). As in the example of individuals assigned as female and male, it is also the case that early tests for DIF assumed two groups, treating one as reference and one as focal, whereas psychologists may be interested in numerous groups (potentially defined by the intersection of multiple variables) and may be interested in all of the pairwise comparisons among these groups (including to avoid privileging one group over another).

Substantive Size. The final topic we introduce from conventional approaches to testing measurement invariance is strategies to calculate the substantive size of identified differences (Reference MeadeMeade, 2010). In other words, substantive size would be larger if the difference in predictive probabilities was 0.20 points (0.60 vs. 0.40) in comparison to 0.02 points (0.51 vs. 0.49). Although there is no single agreed upon strategy for doing so, some assessment of substantive size should be a priority given the complexity of statistical power in the measurement non-invariance context. Whereas model-level tests of measurement invariance have been found to be underpowered when sample sizes are not balanced across groups (Reference Yoon and LaiYoon & Lai, 2018), item-level approaches are often vulnerable to accumulation of Type I error across numerous tests within a single estimation and across iterative estimations. Scholars have also debated whether item-level invariance matters if groups do not differ statistically in their average measure-level scores. This situation can occur if item-level invariance is offsetting across groups. In other words, on some items, members of Group A may have higher probabilities of choosing higher response options than Group B, despite equivalent latent construct levels; yet, on other items, the reverse may be true. Later, we illustrate one approach for considering substantive size at the measure and item levels.

1.3 Introduction to the Alignment Method

The alignment method was developed to address limitations of conventional approaches to testing measurement invariance (Reference Asparouhov and MuthénAsparouhov & Muthén, 2014; Reference Muthén and AsparouhovMuthén & Asparouhov, 2014). Whereas prior methods focused on two groups, the alignment method allowed many groups. Whereas prior methods required users to implement iterative methods manually, the alignment method’s built-in algorithms automated an iterative process. The algorithm also uses a loss function designed to identify an optimal solution, more likely to be replicable. And, the developers programmed the alignment method in Mplus in such a way that many desired results to support interpretation are available in the output. Our R package makes it easy to read the voluminous results produced for measures with categorical questions to present the results in graphical or tabular form, and to make additional calculations.

1.3.1 Nontechnical Description of the Alignment Method

As detailed later, the alignment method relies on an algorithm to identify a set of parameters that minimizes item-level differences across groups. Sets of groups with invariant and non-invariant parameters are identified for each parameter of each item. Then, any such item-level differences across groups are taken into account when group-level means on the latent construct are compared. Although the alignment method estimates the model using IFA, the IFA loadings and thresholds can be translated to the IRT scale of discriminations and difficulties. Predicted probabilities of category responses can also be calculated. Together, the IFA and IRT frameworks allow scholars to draw upon a wide range of established literatures and interpretive strategies.

1.3.2 Nontechnical Description of Strengths and Limitations Relative to Other Approaches

A key strength of the alignment method is that it facilitates identification of a partially invariant solution, with the algorithm programmed in an automated way, avoiding the need for the user to make iterative choices. The benefits of such automation increase as the number of groups (and number of items) increase. The alignment method also can bridge the IFA and IRT traditions. Whereas the alignment method is estimated under the IFA metric, the developers provide equations to translate results to IRT metrics. We have programmed the equations for translation from the IFA to the IRT metric into an R package to facilitate their use. At the same time, the alignment method has limitations. One limitation is that current implementations build specific default criteria into its automated algorithm, such as criteria of p < 0.01 and p < 0.001 for identifying group differences, discussed shortly. Another limitation is that the alignment method has been implemented to date in Mplus for some but not all types of multi-category models. And, the alignment method is expected to work best when item-level invariance is moderate – that is, found in some but not most items. An approximate limit of 25 percent non-invariance has been suggested to ensure that alignment method results are trustworthy, especially if sample size is small (e.g., n = 100 vs. 1000; Reference Muthén and AsparouhovMuthén & Asparouhov, 2014, p. 3). Although existing simulations suggest the alignment method can identify population-level measurement invariance and well match results from conventional approaches (e.g., Reference FinchFinch, 2016), more work is needed into its algorithmic criteria and assumptions, as we discuss later. The Mplus output from the alignment method is also voluminous, and one objective of this tutorial is to offer strategies to facilitate presentation and interpretation of these results.

One important alternative approach that has been increasingly used by developmental scholars to examine measurement invariance is the multiple-indicator multiple-cause (MIMIC) model and its offshoots, which predict latent variables and their indicators by observed variables (Reference BauerBauer, 2017; Reference Hauser and GoldbergerHauser & Goldberger, 1971). The MIMIC model implements differential item functioning in the context of a structural equation model. For simplicity of the presentation, we consider a single latent variable (i.e., factor) regressed on an observed grouping variable (e.g., sex, male and female) with the goal to estimate group mean differences on the factor. Each item that contributes to the factor is also tested for DIF by regressing the item on the grouping variable (e.g., sex). Differential item function is indicated when group membership significantly predicts item responses in this structural equation modeling context that also accounts for group mean difference at the factor level. There is no consensus in the literature of how MIMIC models should be used to test for DIF. But with some small differences, the common procedure is as follows, similar to the iterative procedure already described for identifying partial invariance (Reference WoodsWoods, 2009). First, fit a baseline model assuming no DIF in any item. Then use modification indices to determine if freely estimating a parameter that was fixed would contribute improvement of the model fit. In relation to DIF, items with a “large” modification index for the path between the observed characteristic and the item are flagged. One obvious challenge is how to decide how large a modification index must be. An alternative approach to using modification indices to identify parameters to free is to iteratively test each item for DIF by assuming that all other items are invariant. Under this approach, the baseline model is statistically compared with each one of the subsequent models.

Both the alignment method and the MIMIC model have value, and here we emphasize some reasons why developmental scientists might choose the alignment method. At one level, the two approaches can be seen as nearly equivalent. As is the case in structural regression models, the explicit definition of multilayered groups defined by their values across several separate variables can, with the appropriate specification, be statistically equivalent to defining interactions among the separate variables. Yet, the alignment method is designed in ways that readily accommodate multilayered groups, whereas interactions are more common in the MIMIC models. And, the two approaches vary in what information is foregrounded – the parameters estimated for each unique group (as in the alignment method), or, the significance of differences in parameters among groups (as in the MIMIC model with interactions). Again, the approaches can give equivalent information – differences among group parameters can be tested in the former approach and the multilayered group estimates can be recovered from the interactions. Yet, when three of more separate variables are considered, the numbers of interactions, interpretations of their parameters, and calculations of multilayered groups’ estimates become increasingly complex. In other words, a MIMIC model might include a three-way interaction, three two-way interactions, and three terms for the three component variables. If some of the interactions are significant, then postestimation calculations such as simple slopes and predicted values would be needed for interpretation. The results for multilayered groups would be revealed through careful calculation and presentation of such results. In contrast, the separately defined multilayered groups center the identities of the persons forming the groups and retain explicit meaning – for example, Black females living in the rural South of the United States, Black females living in the urban North of the United States. The alignment method not only facilitates elevating these multilayered groups, but also supports getting the kind of information an interaction model would offer, by automating the detection of which groups’ estimates are statistically equivalent and statistically different. As we come back to in the discussion, the alignment method still has room for improvement. One of its current limitations is that it allows for only one set of groups with equivalent parameters. Yet, the promise of the alignment method encourages future developments to address this constraint.

Of course, in either the alignment method or a MIMIC model, a limiting factor in defining multilayered groups is sample size. One advantage of the alignment method is that small group sizes are made salient, because the sample size for each group is listed. Some groups defined by multiple characteristics may be clearly too few to support estimation. Limited sample size in certain intersections of separate variables also reduces support for testing interactions, although potentially less saliently (e.g., by noticing standard errors are large, if not explicitly checking the sample sizes in relevant cross-classifications of categorical variables or regions of continuous variables). This relative salience is akin to the way in which propensity score matching makes areas of support and lack thereof more explicit than covariate controls in structural regression models. A limiting factor in the multilayered groups approach is that it is best suited for capturing the intersection of categorical variables. Whereas continuous variables could be categorized, doing so can suffer from the well-recognized loss of information in categorizing continuous variables (Reference Royston, Altman and SauerbreiRoyston et al., 2005). At the same time, when interactions of continuous and categorical variables are examined, nonlinearities in the continuous variables’ moderating effect require strategies such as using varying functional forms (i.e., polynomials, splines, or logarithmic transformations). Adding such terms complicates interpretation. Best practice would be to calculate predicted values for each group, because the parameter estimates shown in the output would not directly indicate when one group’s estimate falls above or below another group’s. For instance, if polynomials were allowed to vary across groups, one group’s pattern might be quadratic and another group’s cubic. Increasingly complex functional forms may mask areas of small sample size and thus limited support. In other words, the better fit of a quadratic shape relative to a linear shape might be sensitive to a single outlying case that pulls the slope upward. Although it is possible to use careful data screening methods to detect such outliers and to use postestimation calculations to interpret results, some users may find the alignment method easier to implement and interpret given the sample sizes for each multilayered group are directly presented and given output provides both the within-group estimates and the across-group comparisons.

In short, we see the alignment method and MIMIC models as complementary. As psychology and other disciplines encourage attention to replication and robustness of results and related sharing of data and code, the complementary methods can be used within and across research teams. Our goal in the tutorial is to present the alignment method so that more teams can use it in these complementary efforts.

2 Formal Presentation of Psychometric Models

We begin the formal mathematical representation of the graded response model using its typical presentation from the IRT framework where it was developed. We then present it with an IFA parameterization, showing how parameters can be translated between the two frameworks. We then cover the alignment method, specific to the graded response model. We end with one approach to gauging the substantive size of group differences in item response probabilities, Raju’s signed and unsigned areas.

2.1 The Graded Response Model: Item Response Theory Parameterization

The common IRT representation of the graded response model’s cumulative probabilities is

(1)

(1)

Notice that the two equivalent equations differ in the sign on

![]() . We provide both since each appears in the literature we cite. In the formulas, four indices account for: (a) persons, k; (b) items,

. We provide both since each appears in the literature we cite. In the formulas, four indices account for: (a) persons, k; (b) items,

![]() ; (c) response categories, c; and (d) the boundaries between adjacent response categories (i.e., difficulties), j. The number of response categories (

; (c) response categories, c; and (d) the boundaries between adjacent response categories (i.e., difficulties), j. The number of response categories (

![]() ) equals the number of difficulties (

) equals the number of difficulties (

![]() ) plus one (i.e.,

) plus one (i.e.,

![]() ). There is one discrimination parameter per item,

). There is one discrimination parameter per item,

![]() . There are

. There are

![]() difficulties for each item. The cumulative probability,

difficulties for each item. The cumulative probability,

![]() , is specific to each category of each item and is conditional on

, is specific to each category of each item and is conditional on

![]() , the location for person

, the location for person

![]() on the latent construct. Notice that the difference between the person location on the latent construct and the difficulty,

on the latent construct. Notice that the difference between the person location on the latent construct and the difficulty,

![]() , is a central determinant of the probability. When the person is located above the threshold (

, is a central determinant of the probability. When the person is located above the threshold (

![]() , the probability will be higher; when the person is located below the threshold (

, the probability will be higher; when the person is located below the threshold (

![]() ), the probability will be lower. In other words, if a person has more of the latent construct than is represented in the difficulty parameter, they will be more likely to choose the higher categories. That the discrimination,

), the probability will be lower. In other words, if a person has more of the latent construct than is represented in the difficulty parameter, they will be more likely to choose the higher categories. That the discrimination,

![]() , acts as a slope is reflected as it multiplies this difference. Thus, a large discrimination magnifies the difference between the location of the person and the location of the difficulty parameter.

, acts as a slope is reflected as it multiplies this difference. Thus, a large discrimination magnifies the difference between the location of the person and the location of the difficulty parameter.

The category probabilities,

![]() , are calculated by taking the difference between adjacent cumulative probabilities. For instance, when there are three categories, the probability of the middle category would be calculated as

, are calculated by taking the difference between adjacent cumulative probabilities. For instance, when there are three categories, the probability of the middle category would be calculated as

![]() . The probability of the lowest category is calculated as

. The probability of the lowest category is calculated as

![]() (the presence of one in this equation reflects the probability of choosing at least one of the three categories being one, as noted previously). The probability of the highest category is calculated as

(the presence of one in this equation reflects the probability of choosing at least one of the three categories being one, as noted previously). The probability of the highest category is calculated as

![]() . The presence of zero in this equation reflects there being no categories higher than three (and thus zero probability of choosing such nonexistent categories). The cumulative probabilities can also be calculated as sums of category probabilities (e.g.,

. The presence of zero in this equation reflects there being no categories higher than three (and thus zero probability of choosing such nonexistent categories). The cumulative probabilities can also be calculated as sums of category probabilities (e.g.,

![]() ).

).

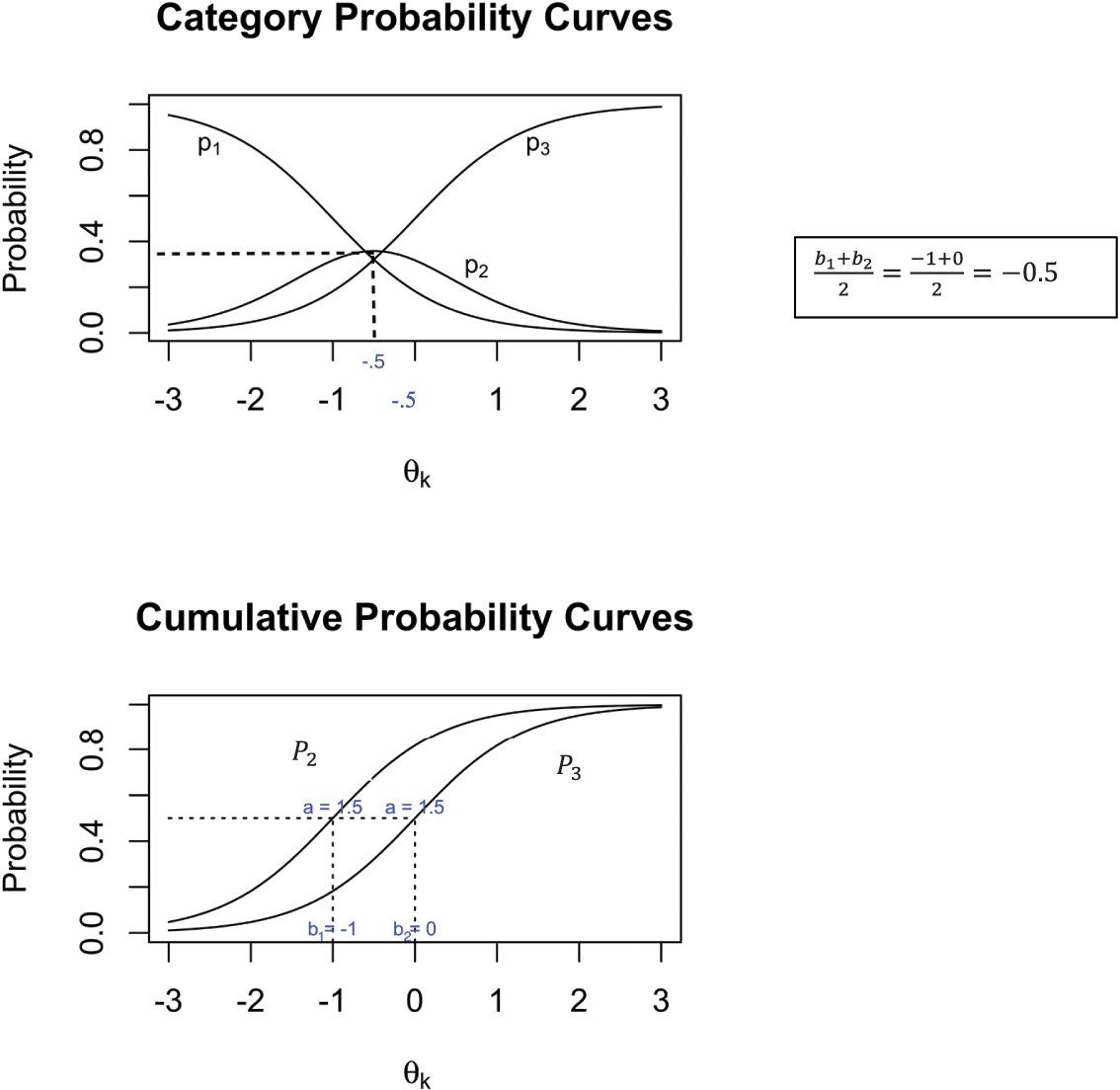

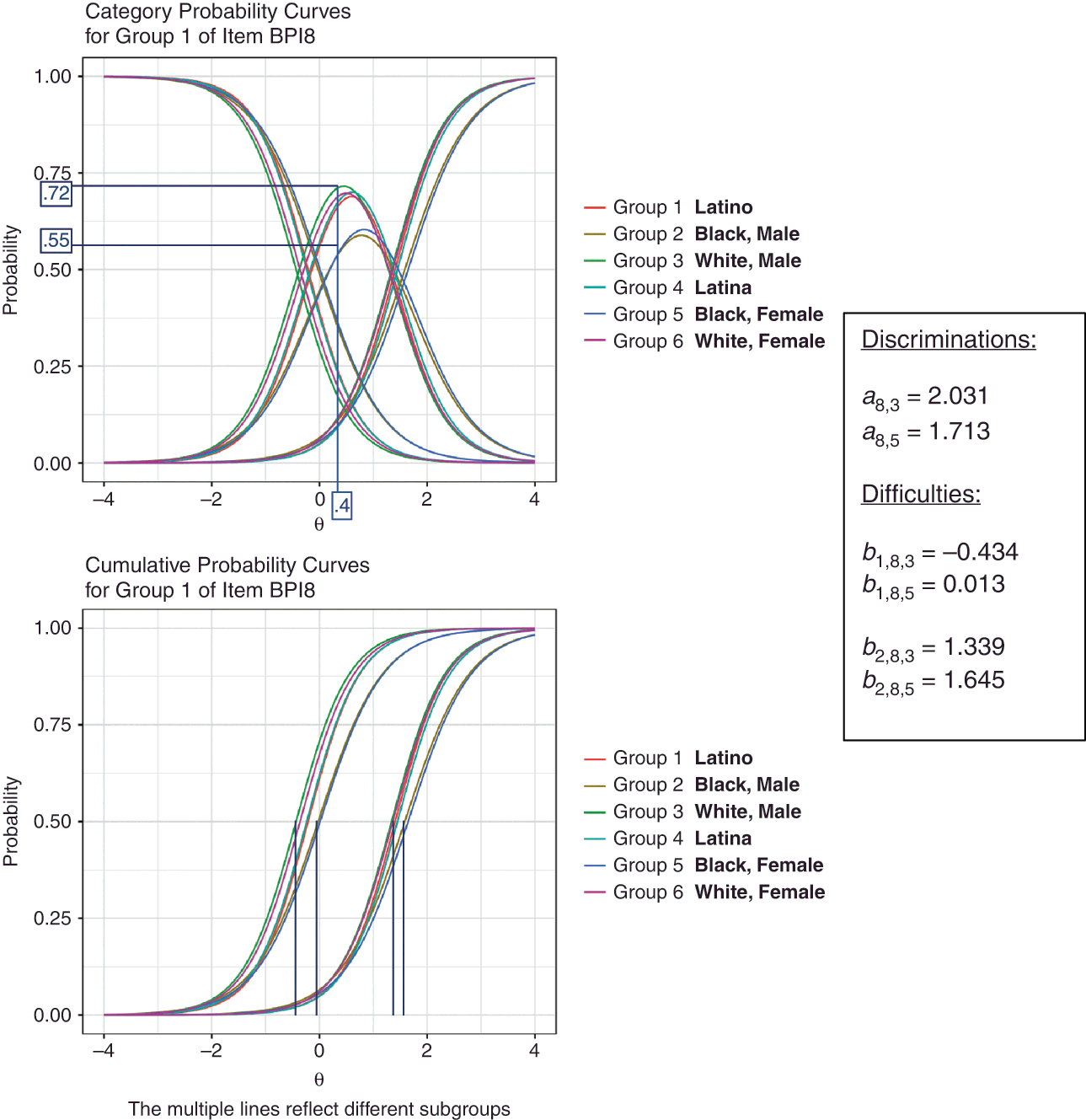

We show in Figure 1 examples of category probability curves (top graph) and cumulative probability curves (bottom graph), calculated for a range of the latent construct (

![]() ) from −3 to 3 and using

) from −3 to 3 and using

![]() ,

,

![]() , and

, and

![]() . Recall that the category probability curves show the probabilities of choosing each of an item’s categories, conditional of a given latent level of the construct. The latent construct is represented on the x-axis, and the category probabilities sum to one at each level of the latent construct. The cumulative probabilities are sums of category probabilities. The category and cumulative probabilities are identical for the top (third) category. The cumulative probability for the middle (second) category is the sum of the category probabilities for the second and third categories. The cumulative probability for the bottom (first) category is always one (thus not shown in the graph).

. Recall that the category probability curves show the probabilities of choosing each of an item’s categories, conditional of a given latent level of the construct. The latent construct is represented on the x-axis, and the category probabilities sum to one at each level of the latent construct. The cumulative probabilities are sums of category probabilities. The category and cumulative probabilities are identical for the top (third) category. The cumulative probability for the middle (second) category is the sum of the category probabilities for the second and third categories. The cumulative probability for the bottom (first) category is always one (thus not shown in the graph).

Figure 1 Illustration of Category Probability Curves and Cumulative Probability Curves

Note. The category probability curves (top graph) show the probabilities of choosing each of an item’s categories, conditional on a given latent level of the construct. The cumulative probability curves (bottom graph) are sums of category probabilities. In the graphs, the probabilities are calculated using Equation 1 for a range of the latent construct (

![]() ) from −3 to 3 and using ɑ = 1.5, b1 = −1, and b2 = 0. The latent construct is represented on the x-axis, and the category probabilities sum to one at each level of the latent construct. The category and cumulative probabilities are identical for the top (3rd) category. The cumulative probability for the middle (2nd) category is the sum of the category probabilities for the 2nd and 3rd categories. The cumulative probability for the bottom (1st) category is always one (thus not shown in the graph).

) from −3 to 3 and using ɑ = 1.5, b1 = −1, and b2 = 0. The latent construct is represented on the x-axis, and the category probabilities sum to one at each level of the latent construct. The category and cumulative probabilities are identical for the top (3rd) category. The cumulative probability for the middle (2nd) category is the sum of the category probabilities for the 2nd and 3rd categories. The cumulative probability for the bottom (1st) category is always one (thus not shown in the graph).

Beginning with the top graph, notice that the shapes of the three curves are as we would expect for ordinal items. These shapes reflect the graded response model’s assumptions of ordinality. That is, the probability of choosing the lowest category (

![]() ) is high for those with the least amount of the latent construct,

) is high for those with the least amount of the latent construct,

![]() , and then falls off to zero at the highest levels of the latent construct. The probability of the highest category (

, and then falls off to zero at the highest levels of the latent construct. The probability of the highest category (

![]() ) follows the reverse pattern. The probability of the middle category (

) follows the reverse pattern. The probability of the middle category (

![]() ) rises and then falls. As noted earlier, at each level of

) rises and then falls. As noted earlier, at each level of

![]() (i.e., for persons located at various levels of the latent construct) these three category probabilities sum to one. The item parameters determine the shape and location of the lines. The higher the level of discrimination, the more peaked (narrower and higher) are the middle-category curves and the steeper are the downward and upward slopes of the curves for the lowest and highest categories. For the graded response model, the average of adjacent thresholds determines where the middle categories peak. In Figure 1, the middle category peaks at

(i.e., for persons located at various levels of the latent construct) these three category probabilities sum to one. The item parameters determine the shape and location of the lines. The higher the level of discrimination, the more peaked (narrower and higher) are the middle-category curves and the steeper are the downward and upward slopes of the curves for the lowest and highest categories. For the graded response model, the average of adjacent thresholds determines where the middle categories peak. In Figure 1, the middle category peaks at

![]() since

since

![]() .

.

In the bottom graph of cumulative probabilities, the curve on the right repeats the curve for the highest category (

![]() ) from the top graph. The curve on the left is the sum of the middle and highest curves (

) from the top graph. The curve on the left is the sum of the middle and highest curves (

![]() and

and

![]() ) from the top graph. For both curves, the discrimination parameter, again acts as a slope, determining the steepness of the curves (i.e., higher discriminations reflect steeper slopes). Because each item has a single discrimination parameter, the two curves in the bottom graph have the same shape. The difficulties determine the positioning of these curves, from left to right. If the thresholds are farther apart, then the curves will be more spread out. Specifically, the difficulties,

) from the top graph. For both curves, the discrimination parameter, again acts as a slope, determining the steepness of the curves (i.e., higher discriminations reflect steeper slopes). Because each item has a single discrimination parameter, the two curves in the bottom graph have the same shape. The difficulties determine the positioning of these curves, from left to right. If the thresholds are farther apart, then the curves will be more spread out. Specifically, the difficulties,

![]() , fall at the level of

, fall at the level of

![]() at which a person is equally likely to choose the sets of higher versus lower categories. We show this property using dashed lines that cross the y-axis at probability of 0.5 and cross the x-axis at the two difficulty levels (−1 and 0).

at which a person is equally likely to choose the sets of higher versus lower categories. We show this property using dashed lines that cross the y-axis at probability of 0.5 and cross the x-axis at the two difficulty levels (−1 and 0).

2.2 The Graded Response Model: Item Factor Analysis Parameterization

Many readers will be familiar with the standard representation of factor analysis in which a continuous item is regressed upon the latent factor, such as

(2)

(2)

Here,

![]() is the intercept,

is the intercept,

![]() is the factor loading,

is the factor loading,

![]() is the latent factor score for a specific individual, and

is the latent factor score for a specific individual, and

![]() is the residual for the ith item.

is the residual for the ith item.

For categorical items, multiple thresholds (

![]() ) replace the single intercept, being motivated in a way akin to ordinal logistic regression (Reference LongLong, 1997), whereby someone who would have responded above the threshold on a continuous item chooses the corresponding category of a multi-category item; that is,

) replace the single intercept, being motivated in a way akin to ordinal logistic regression (Reference LongLong, 1997), whereby someone who would have responded above the threshold on a continuous item chooses the corresponding category of a multi-category item; that is,

Mplus estimates models with this IFA parameterization, and it is straightforward to calculate the IRT parameters using the following formulas (Reference Asparouhov and MuthénAsparouhov & Muthén, 2020),

(3)

(3)

(4)

(4)

The IFA and IRT parameterizations of the graded response model relate as follows,

where

![]() and the other parameters are as defined previously. Note that in Eqs. (3) and (4) we have assumed that the factor mean and variance are fixed at zero and one, respectively. In the alignment context, some groups have estimated means and variances. For these groups, our R package relies upon Eqs. (21) and (22) from Reference Asparouhov and MuthénAsparouhov and Muthén (2020) when converting between IFA and IRT parameterizations. Note also that when the individuals’ locations on the latent construct are saved from Mplus’ default alignment method specification, the locations will reflect the IFA parameterization rather than the IRT parameterization.

and the other parameters are as defined previously. Note that in Eqs. (3) and (4) we have assumed that the factor mean and variance are fixed at zero and one, respectively. In the alignment context, some groups have estimated means and variances. For these groups, our R package relies upon Eqs. (21) and (22) from Reference Asparouhov and MuthénAsparouhov and Muthén (2020) when converting between IFA and IRT parameterizations. Note also that when the individuals’ locations on the latent construct are saved from Mplus’ default alignment method specification, the locations will reflect the IFA parameterization rather than the IRT parameterization.

2.3 Statistical Identification of Parameter Estimates

Before turning to the alignment method, we discuss the constraints that must be placed on any IFA and IRT model (including outside of the alignment method context) in order to statistically identify the scale of the latent construct. In doing so, we again emphasize that whereas some psychologists may be used to focusing on factor loadings, with categorical items it is also important to consider the factor intercepts/thresholds. In a multi-group analysis, it is the invariance of these factor intercepts/thresholds that is required to fairly compare the groups’ mean levels on the latent variable. Invariance of factor loadings is what is required to fairly consider how the latent variable associates with other variables across groups. For statistical identification purposes, we must therefore consider both means and variances in the distribution of the latent variable.

As in factor analysis with continuous items, recall as well that the reason we need identifying constraints is that the metric of the latent variable is unknown. When modeling the factor means as well as the factor variances, we must establish two aspects of the metric: (a) its origin, meaning the starting point of the metric, and (b) its units, meaning how much “one more” represents. To set the origin, a parameter is constrained to be zero. To set the units, a parameter is constrained to be one. The constraint of zero is placed either on a latent mean or a threshold/difficulty. The constraint of one is placed either on the latent variance or a loading/discrimination. Although the choice of which parameter to constrain typically produces equivalent results for a single-group IFA or IRT model, the choices of which parameters to constrain have greater implications in the multi-group measurement invariance context. We discuss in the next section how the alignment method achieves statistical identification.

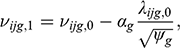

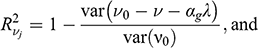

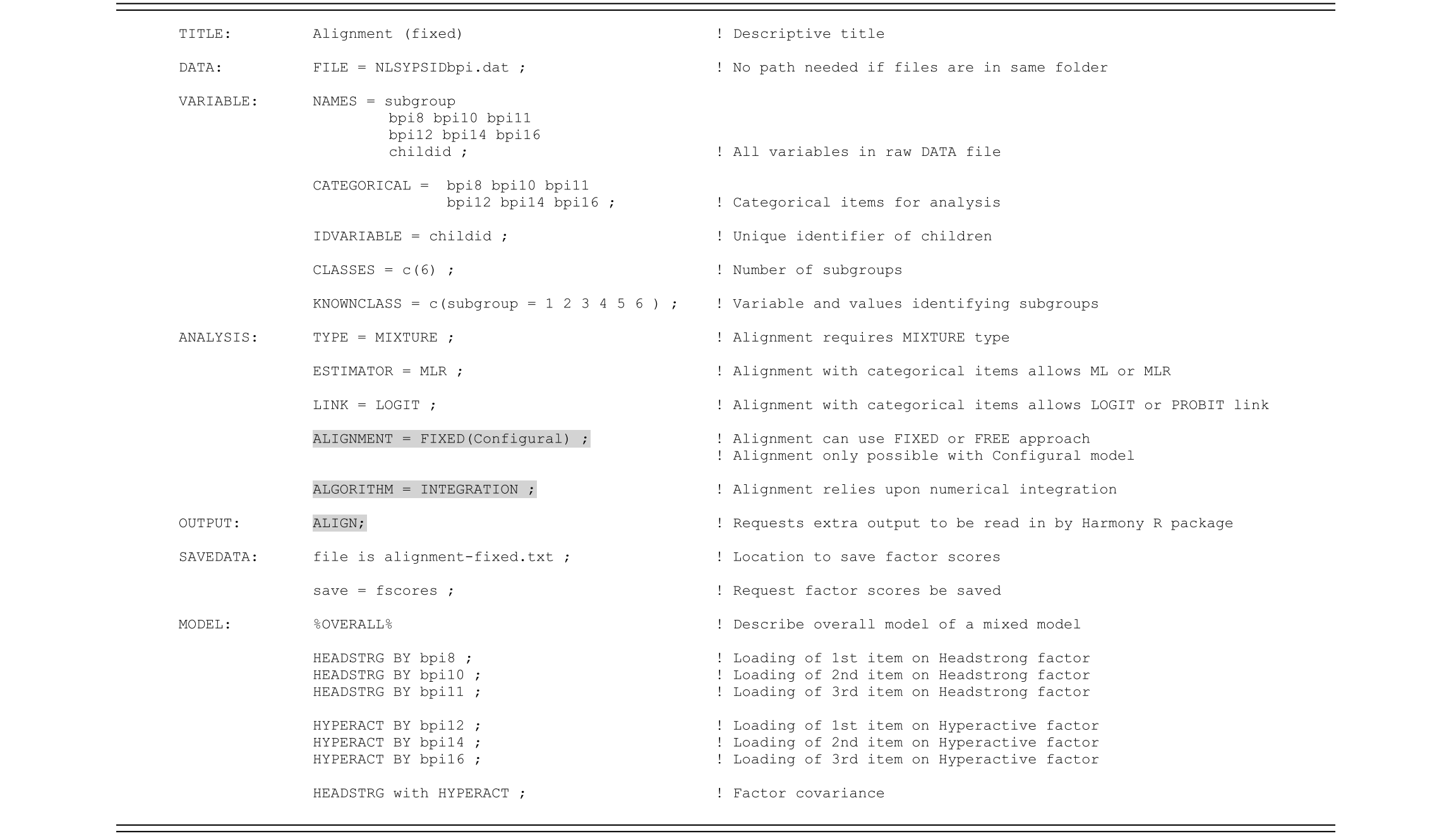

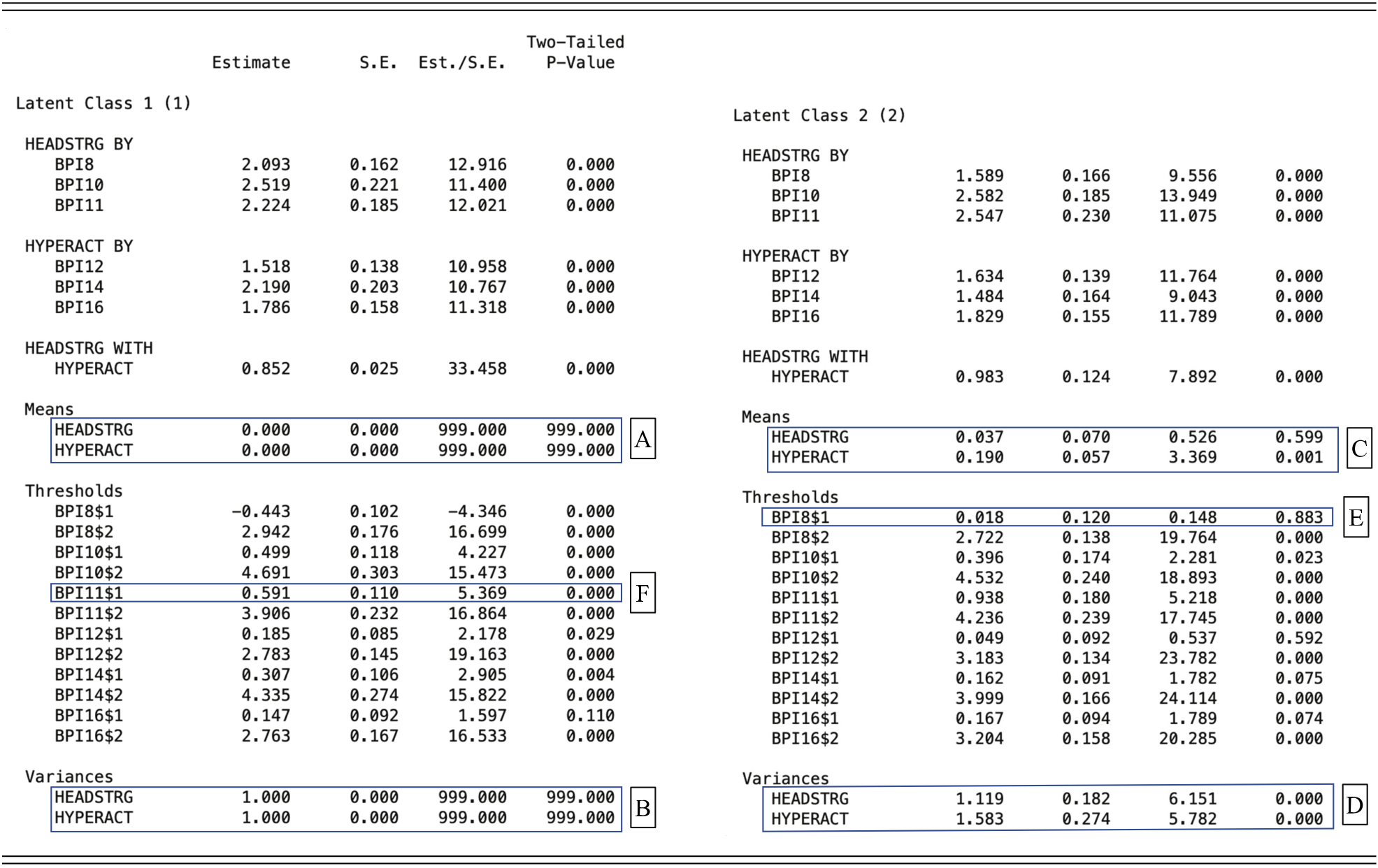

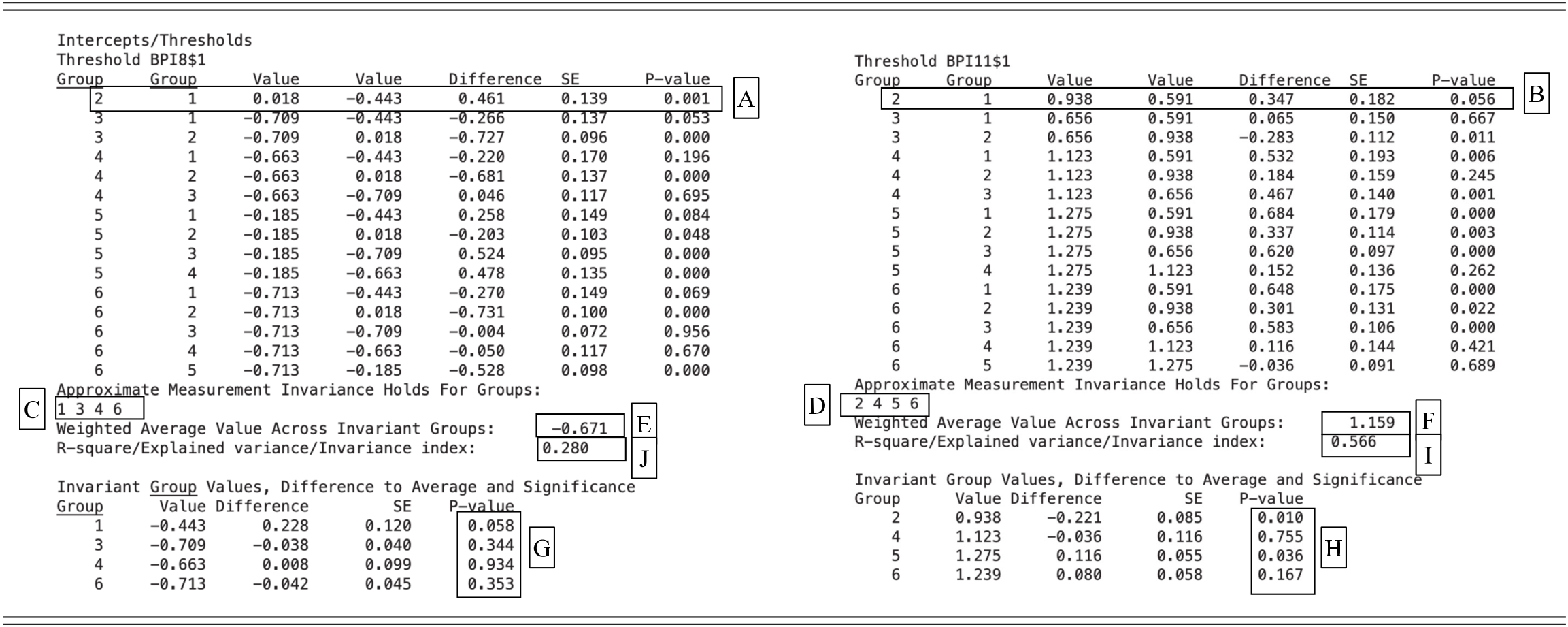

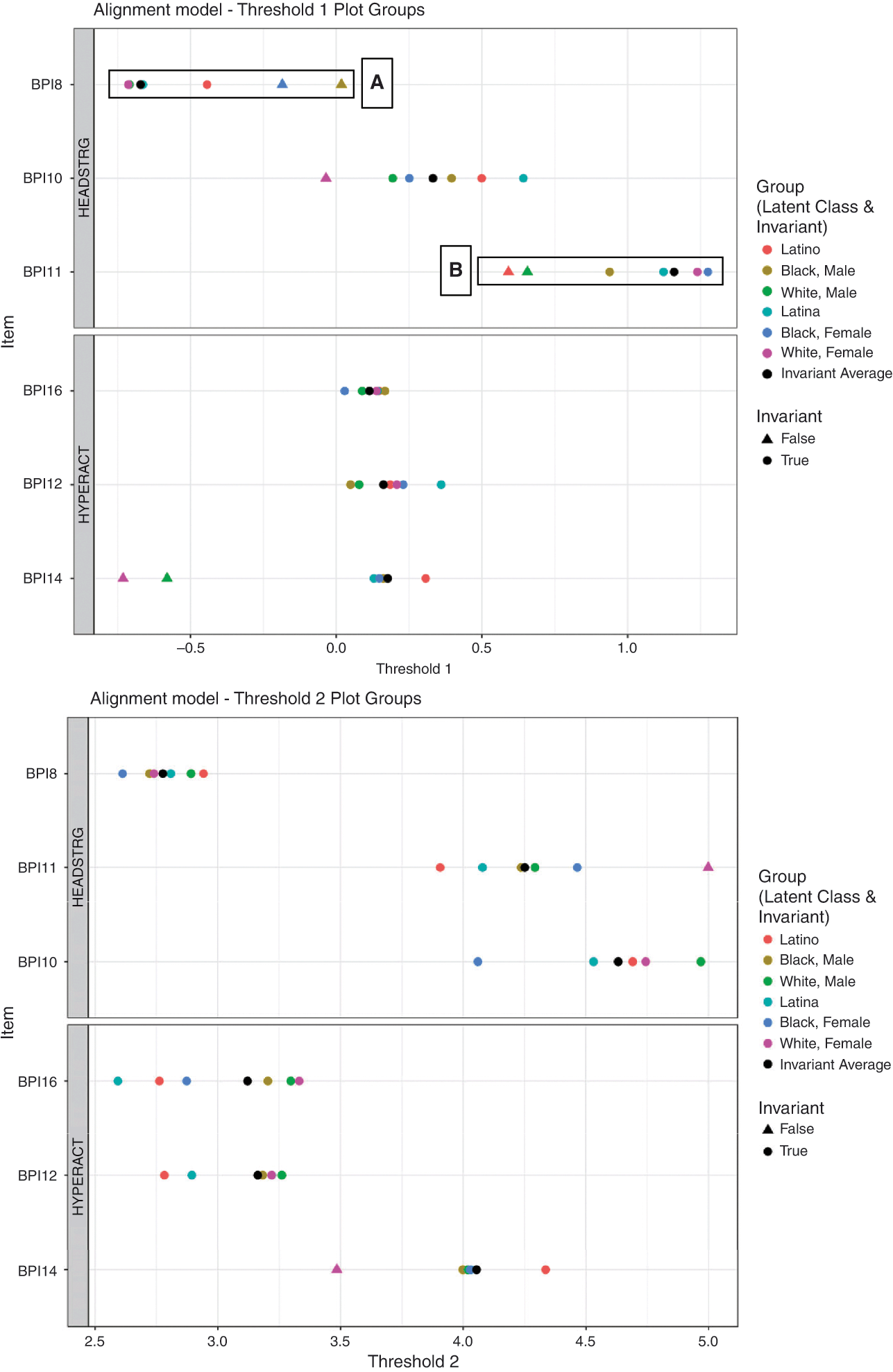

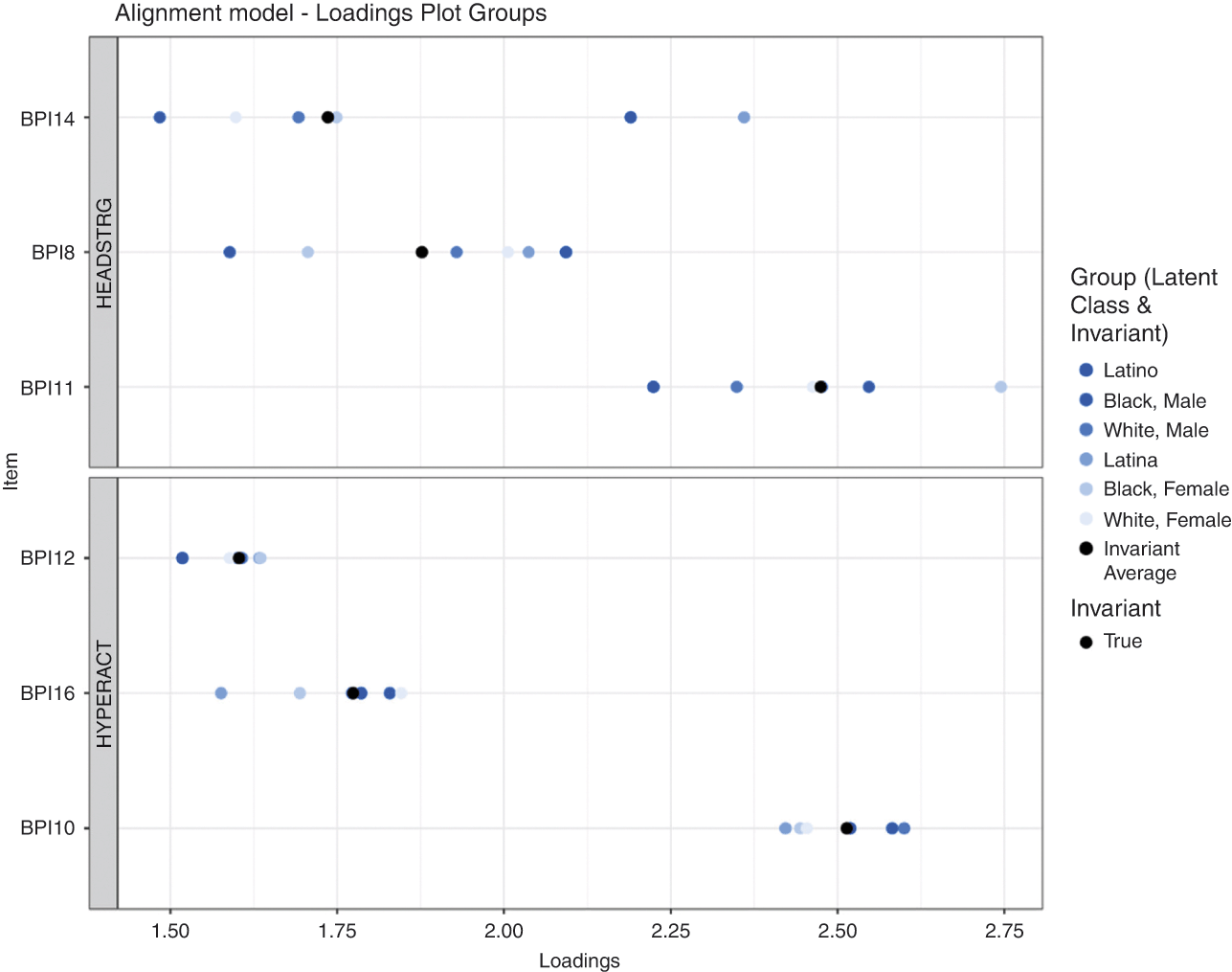

2.4 The Alignment Method