1 Introduction

This Element is about agent-based macroeconomics in general, and in particular about a family of evolutionary Agent-Based Models (ABMs), which we call ‘Schumpeter meeting Keynes’ (or K+S). There are four fundamental features of the K+S family of agent-based models. The first is the complementarity between a Schumpeterian engine of innovation and a Keynesian engine of demand generation. Second, the models entail the intrinsic duality of wages, which are an item of cost for individual firms but also a component of aggregate demand. Third, there is a permanent duality between the labour-shedding effects of technical change via productivity improvements and its employment generation via the introduction of new products. Finally, fourth, ubiquitous institutions shape the rules of behaviours of individual agents and their pattern of interaction. As with all well-constructed ABMs, the K+S models are populated by a multiplicity of agents which interact on the grounds of quite simple, empirically based, behavioural rules, whose collective outcomes are ‘emergent properties’ which cannot be imputed to the intention of any single agent.

All this modelling perspective would sound quite straightforward were it not for the dismal state of current macroeconomics. Thus, given the latter, it might be appropriate to start by arguing why we need a ‘macro’ level of analysis well nested into valid microfoundations in the first place, which is perfectly obvious in all other natural and social disciplines, but not in economics. Next we shall place our presentation of ABMs against the background of the vicissitudes of modern macroeconomics as prolegomena to the core of this Element, the K+S family of macro ABMs.

With the noticeable exception of a good deal of contemporary economics, almost all scientific disciplines, both natural and social ones, distinguish between ‘lower’, more micro, levels of description of whatever phenomenon, and ‘high-level’ ones, regarding collective outcomes, which are typically not isomorphic to the former.Footnote 1 So, in physics, thermodynamics is not postulated on the kinetic properties of some ‘representative’ or ‘average’ molecules! And even more so in biology, ethology, or medicine. This is a fundamental point repeatedly emphasised by Kirman (Reference Kirman2016) and outside our discipline by Anderson (Reference Anderson1972) and Prigogine (Reference Prigogine1980), among a few outstanding others. The basic epistemological notion is that the aggregate of interacting entities yields emergent properties, which cannot be mapped down to the (conscious or unconscious) behaviours of some identifiable underlying components.Footnote 2 This is so obvious to natural scientists that it would be an insult to them to remind them that the dynamics of a beehive cannot be summarised by the dynamic adjustment of a ‘representative bee’ (the example is discussed, again, in Kirman, Reference Kirman2016). The relation between ‘micro’ and ‘macro’ has been at the very centre of all social sciences since their inception. Think of one of the everlasting questions, namely the relationship between agency and structure, which is at the core of most interpretations of social phenomena. Or, nearer to our concerns here, consider the (often misunderstood) notion of Adam Smith’s invisible hand: this is basically a proposition about the lack of isomorphisms between the greediness of individual butchers and bakers, on the one hand, and the relatively orderly delivery of meat and bread across markets.

Unfortunately, what is obvious for the scientific community is unknown or ignored by current macroeconomics. Indeed, after a fruitful infancy in which the early Keynesian macroeconomic theories focused on the laws of motion of capitalist dynamics, also embedding some notions of disequilibrium and coordination failures, the discipline in the 1970s took a perverse path, relying on models grounded on fictitious rational representative agents in a pathetic attempt to circumvent aggregation and coordination problems. Such fraudulent microfoundations shrink the macro level to the optimising behaviour of one agent, thus losing all the complex dynamics emerging when one moves to the higher levels. The Dark Age of macroeconomics reached the abyss with the Great Recession of 2008, the biggest downturn that had hit developed economies since 1929. Not only was representative-agent macroeconomic theory unable to explain what happened in 2008, but it was instrumental to the crisis. Indeed, if macroeconomic models are grounded on a lonely agent, how could one study the rising of income inequality and financialisation which paved the way to the subprime mortgage crisis? A new macroeconomics paradigm is thus urgently needed.

In the new alternative paradigm, which inspires this Element, macroeconomics should consider the economy as a complex, evolving system, an ecology populated by heterogeneous agents (e.g. firms, workers, banks) whose far-from-equilibrium local interactions yield some collective order, even if the structure of the system continuously changes (more on that in Farmer & Foley, Reference Farmer and Foley2009; Kirman, Reference Kirman2010b, Reference Kirman2016; Rosser, Reference Rosser2011; Dosi, Reference Dosi2014, Reference Dosi2023; Dosi & Virgillito, Reference Dosi and Virgillito2017; Dosi & Roventini, Reference Dosi and Roventini2019). In such a framework, first, more is different (Anderson, Reference Anderson1972): to repeat, there is not any isomorphism between the micro- and macroeconomic levels, and higher levels of aggregation can lead to the emergence of new phenomena (e.g. business cycles and self-sustained growth), new statistical regularities (e.g. Kaldor–Verdoorn and Okun’s laws), and completely new structures (i.e. new firms, new industries, new markets, and new institutions).

Second, the economic system exhibits self-organised criticality: imbalances can build over time, leading to the emergence of tipping points which can be triggered by apparently innocuous shocks. (This is straightforward in climate change, see Steffen et al., Reference Steffen, Rockström, Richardson, Folke, Liverman, Summerhayes, Barnosky, Cornell, Crucifix, Donges, Fetzer, Lade, Scheffer, Winkelmann and Schellnhuber2018; but with regard to other fields in economics, see Bak et al., Reference Bak, Chen, Scheinkman and Woodford1992 and Battiston et al., Reference Battiston, Farmer and Flache2016.)

Third, in a complex world, deep uncertainty (Keynes, Reference Keynes1921, Reference Keynes1936; Knight, Reference Knight1921; Dosi, Reference Dosi2023) is so pervasive that agents cannot build the ‘right’ model of the economy, and, even less so, share it among them as well as with the modeller (Kirman, Reference Kirman2014).

Fourth, behaviours typically rely on heuristics (Simon, Reference Simon1955, Reference Simon1959; Cyert & March,Reference Cyert and March1992; Dosi, Reference Dosi2023), which turns out to be a robust set of tools for inference and actions (Gigerenzer & Brighton, Reference Gigerenzer and Brighton2009; Haldane, Reference Haldane2012; Dosi et al., Reference Dosi, Napoletano, Roventini, Stiglitz and Treibich2020a).

Of course, fifth, local interactions among purposeful agents cannot be generally assumed to lead to efficient outcomes or optimal equilibria.

Finally, from a normative point of view, when complexity is involved, policy makers ought to aim at resilient systems which often require redundancy and degeneracy (Edelman & Gally, Reference Edelman and Gally2001). To put it in a provocative way: would someone fly on a plane designed by a team of New Classical macroeconomists, who sound much like the early aerodynamic scholars who conclusively argued that, in equilibrium, airplanes cannot fly?

Once complexity is seriously taken into account in macroeconomics, one, of course, has to rule out Dynamic Stochastic General Equilibrium (DSGE) models. A natural alternative candidate, we shall argue, is Agent-Based Computational Economics (ACE; Tesfatsion, Reference Tesfatsion, Tesfatsion and Judd2006; LeBaron & Tesfatsion, Reference LeBaron and Tesfatsion2008; Fagiolo & Roventini, Reference Fagiolo and Roventini2017; Caverzasi & Russo, Reference Dawid, Delli Gatti, Hommes and LeBaron2018; Dawid & Delli Gatti, Reference Caverzasi and Russo2018), which straightforwardly embeds heterogeneity, bounded rationality, endogenous out-of-equilibrium dynamics, and direct interactions among economic agents. In so doing, ACE provides an alternative way to build macroeconomic models with genuine microfoundations, which take seriously the problem of aggregation and are able to jointly account for the emergence of self-sustained growth and business cycles punctuated by major crises. Furthermore, on the normative side, due to the flexibility of their set of assumptions regarding agent behaviours and interactions, ACE models represent an exceptional laboratory to design policies and to test their effects on macroeconomic dynamics.

As recalled by Haldane and Turrell (Reference Haldane and Turrell2019), the first prototypes of agent-based models were developed by Enrico Fermi in the 1930s in order to study the movement of neutrons. (Of course, Fermi, a Nobel laureate physicist, never thought to build a model sporting a representative neutron!) With adoption of Monte Carlo methods, ABMs flourished in many disciplines, ranging from physics, biology, ecology, epidemiology, all the way to the military (more on that in Turrell, Reference Turrell2016). Recent years have also seen a surge of agent-based models in macroeconomics (see Fagiolo & Roventini, Reference Fagiolo and Roventini2012, Reference Fagiolo and Roventini2017 and Dawid & Delli Gatti, Reference Dawid, Delli Gatti, Hommes and LeBaron2018 for surveys): an increasing number of papers involving macroeconomic ABMs have also addressed the policy domain concerning, for example, fiscal policy, monetary policy, macroprudential policy, labour market policy, and climate-change policy. And ABMs have been increasingly part of the policy tools in, for example, central banks and other institutions in ways complementary to older macroeconomic models (Haldane & Turrell, Reference Haldane and Turrell2019).Footnote 3

The rest of the Element is organised as follows. In Section 2, we will provide a short story of macroeconomics focusing on the problem of aggregation and, more generally, on its relationship with microeconomics. Section 3 discusses the insurmountable limits of neoclassical macroeconomics, focusing on its latest incarnation, the DSGE models. In Section 4 we introduce agent-based macroeconomics, and in Section 5 we present the family of Keynes meeting Schumpeter agent-based models. The empirical validation of the K+S models is performed in Section 6, while the impacts of different combinations of innovation, industrial, fiscal, and monetary policies for different labour-market regimes and inequality scenarios are assessed in Section 7. Finally, in Section 8 we conclude with a brief discussion on the future of macroeconomics.

2 A Dismal Short Story of Macroeconomics: From Robinson Crusoe to Complex Evolutionary Economies

The relationship between the micro and the macro is at the core of the (lack of) evolution of macroeconomics and it has a fundamental role in explaining why the 2008 financial crisis was also a crisis for macroeconomic theory (Kirman, Reference Kirman2010b). Indeed, standard DSGE models not only failed to forecast the crisis, but they did not even admit the possibility of such an event, leaving policy makers without policy solutions (Krugman, Reference Krugman2011). In this section, we briefly outline the path that macroeconomics has been following for the last ninety years (Sections 2.1–2.4).Footnote 4 Together, we will shed light on the dismal status of the discipline, which appears incapable of explaining the phenomena – that is, crises and depressions – for which it was born (see Section 2.5).

2.1 The Happy Childhood of Macroeconomics

Roughly speaking, macroeconomics first saw the light of day with Keynes. For sure, enlightening analyses came before, including Wicksell’s, but the distinctiveness of macro levels of interpretation came with him. Indeed, up to the 1970s, there were basically two ‘macros’.

One was equilibrium growth theories. While it is the case that, for example, models á la Solow invoked maximising behaviours in order to establish equilibrium input intensities, no claim was made that such allocations were the work of any ‘representative agent’ in turn taken to be the ‘synthetic’ (??) version of some underlying General Equilibrium (GE). By the same token, the distinction between positive (that is, purportedly descriptive) and normative models, before Lucas and his companions, was absolutely clear to the practitioners. Hence, the prescriptive side was kept distinctly separated. Ramsey (Reference Ramsey1928) – type models, asking what a benevolent central planner would do, were reasonably kept apart from any question on the ‘laws of motion’ of capitalism, á la Harrod (Reference Harrod1939), Domar (Reference Domar1946), Kaldor (Reference Kaldor1957), and indeed Solow (Reference Solow1956). Finally, in the good and in the bad, technological change was kept separate from the mechanisms of resource allocation: the famous ‘Solow residual’ was, as is well known, the statistical counterpart of the drift in growth models with an exogenous technological change.

Second, in some fuzzy land between purported GE ‘microfoundations’ and equilibrium growth theories, lived for at least three decades a macroeconomics sufficiently ‘Keynesian’ in spirit and quite neoclassical in terms of tools. It was the early ‘neo-Keynesianism’ (also known as the Neoclassical Synthesis) – pioneered by Hicks (Reference Hicks1937), and shortly thereafter by Modigliani, Samuelson, Patinkin, and a few other American ‘Keynesians’ – whom Joan Robinson contemptuously defined as ‘bastard Keynesians’. It is the short-term macro which students used to learn up to the 1980s, with IS-LM curves, which are meant to capture the aggregate relations between money supply and money demand, interest rates, savings, and investments; Phillips curves on the labour market; and a few other curves as well. In fact, the curves were (are) a precarious compromise between the notion that the economy is supposed to be in some sort of equilibrium – albeit of a short-term nature – and the notion of a more ‘fundamental’ equilibrium path to which the economy is bound to tend in the longer run.

That was a kind of mainstream, especially on the other side of the Atlantic. There was also a group of thinkers whom we could call (as they called themselves) genuine Keynesians. They were predominantly in Europe, especially in the UK and in Italy: see Pasinetti (Reference Pasinetti1974), (Reference Pasinetti1983) and Harcourt (Reference Harcourt2007) for an overview.Footnote 5 The focus was only on the basic laws of motions of capitalist dynamics. They include the drivers of aggregate demand; the multiplier leading from the ‘autonomous’ components of demand such as government expenditures and exports to aggregate income; the accelerator, linking aggregate investment to past variations in aggregate income itself; and the relation between unemployment, wage/profits shares, and investments.Footnote 6 Indeed, such a stream of research is alive and progressing, refining upon the modelling of the ‘laws of motion’ and their supporting empirical evidence: see Lavoie (Reference Lavoie2009); Lavoie and Stockhammer (Reference Lavoie and Stockhammer2013); and Storm and Naastepad (Reference Storm and Naastepad2012a, Reference Storm and Naastepad2012b), among quite a few others.

Indeed, a common characteristic of the variegated contributions from ‘genuine Keynesianism’, often known also as post-Keynesian, is the scepticism about any microfoundation, to its own merit and also to its own peril. Part of the denial comes from a healthy rejection of methodological individualism and its axiomatisation as the ultimate primitive of economic analysis. Part of it, in our view, comes from the misleading notion that microfoundations necessarily mean methodological individualism (as if the interpretation of the working of a beehive had to necessarily build upon the knowledge of ‘what individual bees think and do’, or indeed ‘should do’). On the contrary, microfoundations might well mean how the macro structure of the beehive influences the distribution of the behaviours of the bees, a sort of macrofoundation of the micro. All this entails a major terrain of dialogue between the foregoing stream of Keynesian models and agent-based ones.

Now, back to the roots of modern macroeconomics. The opposite extreme to ‘bastard Keynesianism’ was not Keynesian at all, even if it sometimes took up the IS-LM-Phillips discourse. The best concise synthesis is Friedman (Reference Friedman1968). Historically it went under the heading of monetarism, but basically it was the pre-Keynesian view that the economy left to itself travels on a unique equilibrium path in the long- and short-run. Indeed, in a barter, pre-industrial economy where Say’s law and the quantitative theory of money hold, monetary policy cannot influence the interest rate and fiscal policy completely crowds out private consumption and investment: ‘there is a natural rate of unemployment which policies cannot influence’; see the deep discussion in Solow (Reference Solow2018). Milton Friedman was the obvious ancestor of Lucas and his companions, but he was still too far from the subsequent axioms, awaiting any empirical proof of plausibility.Footnote 7

2.2 ‘New Classical (??)’ Talibanism and Beyond

What happened next? Starting from the beginning of the 1970s, we think that everything which could get worse got worse and more: accordingly, we agree with Krugman (Reference Krugman2011) and Romer (Reference Romer2016) that macroeconomics plunged into a Dark Age.Footnote 8

First, ‘new classical economics’ (even if the reference to the Classics could not be further far away) fully abolished the distinction between the normative and positive domains – between models á la Ramsey and models á la Harrod-Domar, Solow, and so on (notwithstanding the differences amongst the latter ones). In fact, the striking paradox for theorists who are in good part market talibans is that they start with a model which is essentially of a benign, forward-looking, central planner, and only at the end, by way of an abundant dose of hand-waving, claim that the solution of whatever intertemporal optimisation problem is in fact supported by a decentralised market equilibrium.

Things could be much easier for this approach if one could build a genuine ‘general equilibrium’ model (that is, with many agents, heterogeneous at least in their endowments and preferences). However, this is not possible for the well-known, but ignored Sonnenschein (Reference Sonnenschein1972), Mantel (Reference Mantel1974), and Debreu (Reference Debreu1974) theorems (more in Kirman, Reference Kirman1989). Assuming by construction that the coordination problem is solved by resorting to the ‘representative agent’ fiction is simply a pathetic shortcut which does not have any theoretical legitimacy (Kirman, Reference Kirman1992).

Anyhow, the ‘New Classical’ restoration went so far as to wash away the distinction between ‘long-term’ and ‘short-term’ – with the latter as the locus where all ‘frictions’, ‘liquidity traps’, Phillips curves, some (temporary!) real effects of fiscal and monetary policies, and so on had precariously survived before. Why would a representative agent endowed with ‘rational’ expectations able to solve sophisticated inter-temporal optimization problems from here to infinity display any friction or distortion in the short-run, if competitive markets always clear? We all know the outrageously silly propositions, sold as major discoveries, also associated with an infamous ‘rational expectation revolution’ concerning the ineffectiveness of fiscal and monetary policies and the general properties of markets to yield Pareto first-best allocations. (In this respect, of course, it is easier for that to happen if ‘the market’ is one representative agent: coordination and allocation failures would involve serious episodes of schizophrenia by that agent itself!).

While Lucas and Sargent Reference Lucas and Sargent1978 wrote an obituary of Keynesian macroeconomics, we think that in other times, nearly the entire profession would have reacted to such a ‘revolution’ as Bob Solow once did when asked by Klamer (Reference Klamer1984) why he did not take the ‘new Classics’ seriously:

Suppose someone sits down where you are sitting right now and announces to me he is Napoleon Bonaparte. The last thing I want to do with him is to get involved in a technical discussion of cavalry tactics at the battle of Austerlitz. If I do that, I am tacitly drawn in the game that he is Napoleon. Now, Bob Lucas and Tom Sargent like nothing better than to get drawn in technical discussions, because then you have tacitly gone along with their fundamental assumptions; your attention is attracted away from the basic weakness of the whole story. Since I find that fundamental framework ludicrous, I respond by treating it as ludicrous — that is, by laughing at it — so as not to fall in the trap of taking it seriously and passing on matters of technique.

The reasons why the profession, and even worse, the world at large took these ‘Napoleons’ seriously, we think, have basically to do with a Zeitgeist where the hegemonic politics was that epitomized by Ronald Reagan and Margaret Thatcher, and their system of beliefs on the ‘magic of the market place’, ‘society does not exist, only individuals do’, et similia. And, tragically, this set of beliefs became largely politically bipartisan, leading to financial deregulation, massive waves of privatisation, tax cuts and surging inequality, and so on. Or think of the disasters produced for decades around the world by the IMF-inspired Washington Consensus or by the latest waves of austerity and structural-reform policies in the European Union as another such creed on the magic of markets, the evil of governments, and the miraculous effects of blood, sweat, and tears (Fitoussi & Saraceno, Reference Fitoussi and Saraceno2013, refers to this as the Berlin–Washington consensus). The point we want to make is that the changes in the hegemonic (macro) theory should be primarily interpreted in terms of the political economy of power relations among social and political groups, with little to write home about ‘advancements’ in the theory itself ... On the contrary!

The Mariana Trench of fanaticism was reached with Real Business Cycle (RBC) models (Kydland & Prescott, Reference Kydland and Prescott1982) positing optimal Pareto business cycles driven by economywide technological shocks [sic]. The natural question immediately arising concerns the nature of such shocks. What are they? Are recessions driven by episodes of technological regress (e.g. people going back to wash clothes in rivers or suddenly using candles for lighting)? The candid answer provided by one of the founding fathers of RBC theory is that: ‘They’re that traffic out there’ (‘there’ refers to a congested bridge, meaning some mysterious crippling of the ‘invisible hand’, as cited in Romer, Reference Romer2016: p. 5). Needless to say, the RBC propositions were (and are) not supported by any empirical evidence. But the price paid by macroeconomics for this sort of intellectual trolling was and still is huge!

2.3 New Keynesians, New Monetarists and the New Neoclassical Synthesis

Since the 1980s, ‘New Keynesian’ economists, instead of following Solow’s advices,Footnote 9 basically accepted the New Classical and RBC framework and worked on the edges of auxiliary assumptions. So, they introduced monopolistic competition and a plethora of nominal and real rigidities into models with representative-agent cum rational-expectations microfoundations. New Keynesian models restored some basic results which were undisputed before the New Classical Middle Ages, such as the non-neutrality of money. However, the price paid to talk just to ‘New Classicals’ and RBC talibans was tall. The methodological infection was so deep that Mankiw and Romer (Reference Mankiw and Romer1991) claimed that New Keynesian macroeconomics should be renamed New Monetarist macroeconomics, and De Long (Reference De Long2000) discussed ‘the triumph of monetarism’. ‘New Keynesianism’ of different vintages represents what we could call homeopathic Keynesianism: the minimum quantities to be added to the standard model, sufficient to mitigate the most outrageous claims of the latter.

Indeed, the widespread sepsis came with the appearance of a New Neoclassical Synthesis (NNS) (Goodfriend, Reference Goodfriend2007), grounded upon DSGE models (Clarida, Galí, & Gertler, Reference Clarida, Galí and Gertler1999; Woodford, Reference Woodford2003; Galí & Gertler, Reference Galí and Gertler2007). In a nutshell, such models have a RBC core supplemented with monopolistic competition, nominal imperfections, and a monetary policy rule, and they can accomodate various forms of ‘imperfections’, ‘frictions’, and ‘inertias’. To many respects DSGE models are simply the late-Ptolemaic phase of the theory: add epicycles at full steam without any theoretical and empirical discipline in order to better match a particular set of the data. Of course, in the epicycles frenzy one is never touched by a sense of the ridiculous in assuming that the mythical representative agent at the same time is extremely sophisticated when thinking about future allocations, but falls into backward-looking habits when deciding about consumption or, when having to change prices, is tangled by sticky prices! (Caballero, Reference Caballero2010: offers a thorough picture of this surreal state of affairs.) Again, Bob Solow gets straight to the point:Footnote 10

But I found the advent of – Dynamic Stochastic General Equilibrium models to be, not so much as a step backwards, but as a step out of economics. In fact, I’ve been criticized, probably justly, for making jokes about it, but it struck me as funny, as not something you could take seriously. There were times when you start reading an article in that vein, and it would start by saying, “Well, we are going to write down a micro-founded model.” It meant an economy with one person in it, and organized so that the economy carried out the wishes of that person. Well, when I was growing up and getting interested in economics, the essence of economics was that there were people and groups of people in the economy who had conflicting interests. Not only did they want different things, opposing things, they believed different things. And there’s no place for that in what passes, or what was passing a dozen years ago, for a micro-founded model.

Not all New Keynesian economists deliberately choose to step out of economics. Indeed, ‘New Keynesianism’ is a misnomer, in that sometimes it is meant to cover also simple but quite powerful models whereby Keynesian system properties are obtained out of otherwise standard microfoundations, just taking seriously ‘imperfections’ as structural, long-term characteristics of the economy. Pervasive asymmetric information requires some genuine heterogeneity and interactions among agents (see Akerlof & Yellen, Reference Akerlof and Yellen1985; Greenwald & Stiglitz, Reference Greenwald and Stiglitz1993a, Reference Greenwald and Stiglitz1993b; Akerlof, Reference Akerlof2002, Reference Akerlof2007, among others) yielding Keynesian properties as ubiquitous outcomes of coordination failures (for more detailed discussions of Stiglitz’s contributions, see Dosi & Virgillito, Reference Dosi and Virgillito2017).Footnote 11 All that, still without considering the long-term changes in the so-called fundamentals, technical progress, and so on.

2.4 What about Innovation Dynamics and Long-Run Economic Growth?

We have argued that even the coordination issue has been written out of the agenda by assuming it as basically solved by construction. But what about change? What about the ‘Unbound Prometheus’ (Landes, Reference Landes1969) of capitalist search, discovery, and indeed destruction?

In Solow (Reference Solow1956) and subsequent contributions, technical progress appears by default, but it does so in a powerful way as the fundamental driver of long-run growth, to be explained outside the sheer allocation mechanism.Footnote 12 On the contrary, in the DSGE workhorse, there is no Prometheus: ‘innovations’ come as exogenous technology shocks upon the aggregate production function, with the same mythical agent optimally adjusting its consumption and investment plans. And the macroeconomic time series generated by the models are usually de-trended to focus on their business cycle dynamics.Footnote 13 End of the story.

The last thirty years have seen also the emergence of new growth theories (see e.g. Romer, Reference Romer1990; Aghion & Howitt, Reference Aghion and Howitt1992), bringing – as compared to the original Solow model – some significant advancements and, in our view, equally significant drawbacks. The big plus is the endogenisation of technological change: innovation is endogenised into economic dynamics. But that is done just as either a learning externality or as the outcome of purposeful expensive efforts by profit-maximising agents. In the latter case, the endogenisation comes at what we consider the major price (although many colleagues would deem it as a major achievement) of reducing innovative activities to an equilibrium outcome of optimal intertemporal allocation of resources, with or without (probabilisable) uncertainty. Hence, by doing that, one loses also the genuine Schumpeterian notion of innovation as a disequilibrium phenomenon (at least as a transient!).

Moreover, ‘endogenous growth’ theories do not account for business cycles’ fluctuations. This is really unfortunate, as Bob Solow (Reference Solow2005) puts it:

Neoclassical growth theory is about the evolution of potential output. In other words, the model takes it for granted that aggregate output is limited on the supply side, not by shortages (or excesses) of effective demand. Short-run macroeconomics, on the other hand, is mostly about the gap between potential and actual output. ... Some sort of endogenous knitting-together of the fluctuations and growth contexts is needed, and not only for the sake of neatness: the short run and its uncertainties affect the long run through the volume of investment and research expenditure, for instance, and the growth forces in the economy probably influence the frequency and amplitude of short-run fluctuations. ... To put it differently, it would be a good thing if there were a unified macroeconomics capable of dealing with trend and fluctuations, rather than a short-run branch and a long-run branch operating under quite different rules. My quarrel with the real business cycle and related schools is not about that; it is about whether they have chosen an utterly implausible unifying device.

And the separation between growth and business cycle theories is even more problematic given the bourgeoning new empirical literature on hysteresis (among many others, see Dosi et al., Reference Dosi, Pereira, Roventini and Virgillito2018a; Cerra, Fatás, & Saxena Reference Cerra, Fatás and Saxena2023, and the literature cited therein), which convincingly shows the existence of many interlinkages between short-run and long-run phenomena. In particular, in the presence of hysteresis, recessions can permanently depress output, thus undermining the very growing capabilities of economies. On the policy side, this calls for a more active role of fiscal and monetary interventions during downturns, and, more generally, to consider the impact of policies across the whole spectrum of frequencies. On the theoretical side, hysteresis is not due to market imperfections, but rather to the very functioning of decentralised economies characterised by coordination externalities and dynamic increasing returns. In that respect, this Element shows that evolutionary agent-based models are genuinely able to account for the emergence of hysteresis, while providing a unified analysis of technological change and long-run growth together with coordination failures and business cycles.

2.5 From the ‘Great Moderation’ to the Great Recession

In the beginning of this century, under the new consensus reached by the New Neoclassical Synthesis (NNS), Robert E. Lucas, Jr, (Reference Lucas2003) without any embarrassment, declared ‘The central problem of depression prevention had been solved’, and Prescott religiously believed that: ‘This is the golden age of macroeconomics’.Footnote 14 Moreover, a large number of NNS contributions claimed that economic policy was finally becoming more of a science (!?), (Galí & Gertler, Reference Galí and Gertler2007; Goodfriend, Reference Goodfriend2007; Mishkin, Reference Mishkin2007; Taylor, Reference Taylor2007).Footnote 15 This was made possible by the ubiquitous presence of DSGE models in academia and a universe of politicians and opinion-makers under the ‘free market’ / ‘free Wall Street’ globalization spell,Footnote 16 and helped by the ‘divine coincidence’, whereby inflation targeting, performed under some form of Taylor rule, appeared to be a sufficient condition for stabilising the whole economy. During this Panglossian period, some economists went as far as claiming that the ‘scientific approach’ to macroeconomics policy incarnated in DSGE models was the ultimate cause of the so-called Great Moderation (Bernanke, Reference Bernanke2004), namely the fall of GDP volatility experienced by most developed economists since the beginning of the 1980s, and that only minor refinements to the consensus workhorse model were needed.

Unfortunately, as happened with the famous statement made by Francis Fukuyama (Reference Fukuyama1992) about an alleged ‘end of history’,Footnote 17 these positions have been proven to be substantially wrong by subsequent events. Indeed, a relatively small ‘micro’ event, the bankruptcy of Lehman Brothers in 2008, triggered a major financial crisis which caused the Great Recession, the deepest downturn experienced by developed economies since 1929.

In that respect, the Great Recession turned out to be a ‘natural experiment’ for economic analysis, showing the inadequacy of the predominant theoretical frameworks. Indeed, as Krugman (Reference Krugman2011) points out, not only did DSGE models not forecast the crisis, but they did not even admit the possibility of such an event and, even worse, they did not provide any useful advice to policymakers on how to put the economy back on a steady growth path (see also Turner, Reference Turner2010; Stiglitz, Reference Stiglitz2011, Reference Stiglitz2015; Bookstaber, Reference Bookstaber2017; Caverzasi & Russo, Reference Caverzasi and Russo2018; Haldane & Turrell, Reference Haldane and Turrell2019).

Scholars of DSGE have reacted to such a failure by trying to amend their models with a new legion of epicycles; for example, financial frictions, homeopathic doses of agent heterogeneity, bounded rationality, and exogenous fat-tailed shocks (see e.g. Lindé, Smets, & Wouters, Reference Lindé, Wouters, Taylor and Uhlig2016, and the discussion in Section 3.4). Conversely, an increasing number of economists have claimed that the 2008 ‘economic crisis is a crisis for economic theory’ (Colander et al., Reference Colander, Folmer and Haas2009; Farmer & Foley, Reference Farmer and Foley2009; Krugman, Reference Krugman2009, Reference Krugman2011; Caballero, Reference Caballero2010; Kirman, Reference Kirman2010b, Reference Kirman2016; Kay, Reference Kay2011; Stiglitz, Reference Stiglitz2011, Reference Stiglitz2015; Dosi, Reference Dosi2014; Romer, Reference Romer2016; Stiglitz, Reference Stiglitz2017). Indeed, history is the smoking gun against the deeply flawed basic assumptions of mainstream DSGE macroeconomics, for example rational expectations and representative agents, which prevents, by construction, the understanding of the emergence of deep downturns together with standard fluctuations (Stiglitz, Reference Stiglitz2015) and, more generally, the very dynamics of economies. Indeed, resorting to representative-agent microfoundations, how can one understand central phenomena such as rising inequality, bankruptcy cascades and systemic risks, innovation, structural change, and their co-evolution with climate dynamics?

3 The Emperor Is Still Naked: The Intrinsic Limits of DSGE Models

In line with the RBC tradition, the backbone of DSGE models (Clarida et al., Reference Clarida, Galí and Gertler1999; Woodford, Reference Woodford2003; Galí & Gertler, Reference Galí and Gertler2007) is a standard stochastic equilibrium model with variable labour supply: the economy is populated by an infinitely everlasting representative household, and by a representative firm, whose homogenous production technology is hit by exogenous shocks.Footnote 18 All agents form their expectations rationally (Muth, Reference Muth1961). The New Keynesian flavor of the model is provided by money, monopolistic competition, and sticky prices. Money has usually only the function of unit of account, and the nominal rigidities incarnated in sticky prices allow monetary policy to affect real variables in the short run. The RBC scaffold of the model allows the computation of the ‘natural’ level of output and real interest rate, that is, the equilibrium values of the two variables under perfectly flexible prices. In line with the Wickselian tradition, the ‘natural’ output and interest rate constitute a benchmark for monetary policy: the central bank cannot persistently push the output and the interest rate away from their ‘natural’ values without creating inflation or deflation. Finally, imperfect competition and possibly other real rigidity imply that the ‘natural’ level of output might not be socially efficient.

Dynamic Stochastic General Equilibrium models are commonly represented by means of vector auto-regression (VAR) models usually estimated by employing full-information Bayesian techniques (see e.g. Smets & Wouters, Reference Smets and Wouters2003, Reference Smets and Wouters2007). Different types of shocks are usually added to improve the estimation. Moreover, as the assumption of forward-looking agents prevents DSGE models from matching the econometric evidence on the co-movements of nominal and real variables (e.g., the response of output and inflation to a monetary policy shock is too fast to match the gradual adjustment showed by the corresponding empirical impulse-response functions), a legion of ‘frictions’ – generally not justified on theoretical grounds – is introduced, such as predetermined price and spending decisions, indexation of prices and wages to past inflation, sticky wages, habit formation in preferences for consumption, adjustment costs in investment, variable capital utilization, among others. Once the parameters of the model are estimated and the structural shocks are identified, policy-analysis exercises are carried out assuming that the DSGE model is the ‘true’ data-generating process of the available time series.

The usefulness of DSGE models is undermined by plenty of theoretical, empirical, and political-economy problems. Let us discuss each of them in turn (see Fagiolo & Roventini, Reference Fagiolo and Roventini2017: for a more detailed analysis).

3.1 Theoretical Issues

As already mentioned previously, DSGE models suffer the same well-known problems as Arrow–Debreu general-equilibrium models (see Kirman, Reference Kirman1989: for a classical reference) and more. Neglecting them does not mean solving them. On the contrary!

More specifically, the well-known Sonnenschein (Reference Sonnenschein1972), Mantel (Reference Mantel1974), and Debreu (Reference Debreu1974) theorems show that neither the uniqueness nor, and even less so, the stability of the general equilibrium can be attained, even if one employs stringent and unrealistic assumptions about agents, even under amazing information requirements. Indeed, Saari and Simon (Reference Saari and Simon1978) show that an infinite amount of information is required to reach the equilibrium for any initial price vector.

The representative agent (RA) shortcut has been taken to circumvent any aggregation problem. The RA assumption implies that there is isomorphism between micro- and macro-economics, with the latter shrunk to the former. This, of course, is far from being innocent: there are (at least) four reasons for which it cannot be defended (Kirman, Reference Kirman1992).Footnote 19

First, individual rationality does not imply ‘aggregate rationality’: one cannot provide any formal justification to support the assumption that the macro level behaves as a maximising individual.

Second, even if one forgets also that one cannot safely perform policy analyses with RA macro models, the reactions of the representative agent to shocks or parameter changes may not coincide with the aggregate reactions of the represented agents.

Third, the lack of micro/macro isomorphism is revealed even in terms of aggregation of preferences: the representative agent might appear to prefer

![]() , even if all the ‘represented’ individuals might well prefer

, even if all the ‘represented’ individuals might well prefer

![]() .

.

Fourth, the RA assumption also induces additional difficulties on testing grounds, because whenever one tests a proposition delivered by a RA model, one is also jointly testing the very RA hypothesis. Hence, one tests the rejection of the latter together with the rejection of the specific model proposition (more on that in Forni & Lippi, Reference Forni and Lippi1997, Reference Forni and Lippi1999; Pesaran & Chudik, Reference Pesaran and Chudik2014).

Finally, the last theoretical issue concerns the existence and determinacy of the system of rational-expectation equilibrium conditions of DSGE models. If the exogenous shocks and the fluctuations generated by the monetary policy rule are ‘small’, and the ‘Taylor principle’ holds, the rational-expectation equilibrium of the DSGE model exists and is locally determinate (Woodford, Reference Woodford2003). This result allows one to compute impulse-response functions in the presence of ‘small’ shocks or parameter changes and to safely employ log-linear approximations around the steady state. Unfortunately, the existence of a local determinate equilibrium does not rule out the possibility of multiple equilibria at the global level (see e.g. Schmitt-Grohè & Uribe, Reference Schmitt-Grohé and Uribe2000; Benhabib, Schmitt-Grohè, & Uribe, 2001; Ascari & Ropele, Reference Ascari and Ropele2009). This is a serious issue because there is always the possibility, for example if the laws of motion of the shocks are not properly tuned, that the DSGE model enters in an explosive path, thus preventing the computation of impulse-response functions and the adoption of the model for policy analysis exercises.

3.2 Empirical Issues

Even neglecting the theoretical absurdities of the basic DSGE edifice, already mentioned previously, equally serious pitfalls concern their empirical use.

The estimation and testing of DSGE models are usually performed assuming that they represent the ‘true’ data generating process (DGP) of the observed data (Canova, Reference Canova, Mills and Patterson2008). This implies that the ensuing inference and policy experiments are valid only if the DSGE model mimics the unknown DGP of the data.Footnote 20 Notice that such an epistemology widespread in economics but unique to it (and to theology!), assumes that the world is ‘transparent’ and thus the ‘model’ faithfully reflects it. This is well epitomised by the grotesque Scientology-like remark of Sargent about rational expectations: ‘All agents inside the model, the econometrician, and God share the same model’ (Sargent, Reference Sargent2005). All other scientific disciplines are basically there to conjecture, verify, and falsify models of the world. Not our own! So, also concerning DSGE models, their econometric performance is assessed along the identification, estimation, and evaluation dimensions (Fukac & Pagan, Reference Fukac and Pagan2006).

Given the large number of non-linearities present in the structural parameters, DSGE models are hard to identify (Canova, Reference Canova, Mills and Patterson2008). This leads to a large number of identification problems, which can affect the parameter space either at the local or at the global level.Footnote 21 Identification problems lead to biased and fragile estimates of some structural parameters and do not allow one to rightly evaluate the significance of the estimated parameters applying standard asymptotic theories. This opens a ridge between the real and the DSGE DGP, depriving parameter estimates of any economic meaning and making policy analysis exercises useless (Canova, Reference Canova, Mills and Patterson2008).

Such identification problems also affect the estimation of DGSE models. Bayesian methods apparently address the estimation (and identification) issues by adding a prior function to the (log) likelihood function in order to increase the curvature of the latter and obtain a smoother function. However, this choice is not harmless: if the likelihood function is flat – and thus conveys little information about the structural parameters – the shape of the posterior distribution resembles the one of the prior, reducing estimation to a more sophisticated calibration procedure carried out on an interval instead of on a point (see Fukac & Pagan, Reference Fukac and Pagan2006; Canova, Reference Canova, Mills and Patterson2008). Indeed, the likelihood functions produced by most DSGE models are quite flat (see e.g. the exercises performed by Fukac & Pagan, Reference Fukac and Pagan2006).Footnote 22

Evaluating a DSGE model, as well as any other models, implies in principle assessing its capability to reproduce a large set of stylized facts, in our case macroeconomic ones (microeconomic regularities cannot be attained by construction given the representative-agent assumption). Fukac and Pagan (Reference Fukac and Pagan2006) performed such exercises on a popular DSGE model with disappointing results. Moreover, DSGE models might seem to do well in ‘normal’ time, but they cannot account even in principle for crises and deep downturns (Stiglitz, Reference Stiglitz2015), even when fat-tailed shocks are assumed (Ascari, Fagiolo, & Roventini, Reference Ascari, Fagiolo and Roventini2015).Footnote 23

The results just described seem to support Favero’s (Reference Favero2007) claim that modern DSGE models are exposed to the same criticisms advanced against the old-fashioned macroeconometric models belonging to the Cowles Commission tradition: they pay too much attention to the identification of the structural model (with all the problems described earlier) without testing the potential misspecification of the underlying statistical model (see also Johansen, Reference Johansen and Colander2006; Juselius & Franchi, Reference Juselius and Franchi2007). If the statistical model is misspecified, policy analysis exercises lose significance, because they are carried out in a ‘virtual’ world whose data-generating process is different from the one underlying observed time-series data.

More generally, the typical assertion made by DSGE modelers that their theoretical frameworks are able to replicate real-world evidence is at odds with a careful scrutiny of how the empirical evaluation of DSGE models is actually done. Dynamic Stochastic General Equilibrium modelers, indeed, typically select ex ante the set of empirical criteria that their models should satisfy in such a way as to be sure that these restrictions are met. However, they usually refrain from confronting their models with the wealth of fundamental features of growth over the capitalist business cycles, which DSGE models are not structurally able to replicate.

3.3 Political-Economy Issues

Given the theoretical problems and the puny empirical performance of DSGE models, their assumptions cannot be defended by invoking arguments either of parsimonious modelling or data matching. This opens a Pandora’s box on the links between the legion of assumptions of DSGE models and their policy conclusions. But behind all that, one of the crucial issues concerns the relationship between the information the representative agent is able to access, ‘the model of the world’ she has, and her ensuing behaviours.

Dynamic Stochastic General Equilibrium models assume a very peculiar framework, whereby representative agents are endowed with a sort of ‘olympic’ rationality, and have free access to an unbounded information set.Footnote 24 Moreover, rational expectation is the common shortcut employed by DSGE models to deal with uncertainty. Such utterly strong assumptions, however, raise more question marks than answers.

First, even assuming individual rationality, how does it carry over through aggregation, yielding rational expectations (RE) at the system level? For sure, individual rationality is not a sufficient condition for letting the system converge to the RE fixed-point equilibrium (Howitt, Reference Howitt2012). Relatedly, while it is in general unreasonable to assume that agents possess all the information required to attain the equilibrium of the whole economy (Caballero, Reference Caballero2010), this applies even more so to periods of strong structural transformation, like the Great Recession, that require policies never tried before (e.g. quantitative easing; see Stiglitz, Reference Stiglitz2011, Reference Stiglitz2015).

Second, agents can also have the ‘wrong’ model of the economy, but available data may corroborate it (see the seminal contribution of Woodford, Reference Woodford1990: among the rich literature on sunspots).

Third, as Hendry and Minzon (Reference Hendry and Minzon2010) point out, when ‘structural breaks’ affect the underlying stochastic process that governs the evolution of the economy, the learning process of agents introduces further non-stationarity into the system, preventing the economy from reaching an equilibrium state, if there is one. More generally, in the presence of genuine uncertainty (Knight, Reference Knight1921; Keynes, Reference Keynes1936), ‘rational’ agents should follow heuristics, as they always outperform more complex expectation formation rules (Gigerenzer & Brighton, Reference Gigerenzer and Brighton2009; Dosi et al., Reference Dosi, Napoletano, Roventini, Stiglitz and Treibich2020a). But, if this is so, then the modelers should assume that agents behave according to how the psychological and sociological evidence suggests that they actually behave (Akerlof, Reference Akerlof2002; Akerlof & Shiller, Reference Akerlof and Shiller2009). Conversely, given such circumstances, it is no wonder that empirical tests usually reject the full-information, rational expectation hypothesis (see e.g. Coibion & Gorodnichenko, Reference Coibion and Gorodnichenko2015; Gennaioli, Ma, & Shleifer, Reference Gennaioli, Ma and Shleifer2015).

The representative-agent (RA) assumption prevents DSGE models from reaching any distributional issue, even if they are intertwined with the major causes of the Great Recession and, more generally, they are fundamental for studying the effects of policies. So, for example, increasing inequalities in income (Atkinson, Piketty, & Saez, Reference Atkinson, Piketty and Saez2011) and wealth inequalities (Piketty & Zucman, Reference Piketty and Zucman2014) might have contributed to inducing households to hold more and more debt, paving the way to the subprime mortgage crisis (Fitoussi & Saraceno, Reference Fitoussi and Saraceno2010; Stiglitz, Reference Stiglitz2011). Redistribution matters and different policies have a different impact on the economy according to the groups of people they are designed for (e.g. unemployed benefits have larger multipliers than do tax cuts for high-income individuals; see Stiglitz, Reference Stiglitz2011). However, the study of redistributive policies requires models with heterogeneous agents.

The RA assumption coupled with the implicit presence of a Walrasian auctioneer, which sets prices before exchanges take place, rules out by construction the possibility of interactions among heterogeneous individuals. This prevents DSGE models also from accurately studying the dynamics of credit and financial markets. Indeed, the assumption that the representative agent always satisfies the transversality condition removes the default risk from the models (Goodhart, Reference Goodhart2009). As a consequence, agents face the same interest rate (no risk premia) and all transactions can be undertaken in capital markets without the need of banks. Abstracting from default risks prevents DSGE models from contemplating the conflict between price and financial stability faced by central banks (Howitt, Reference Howitt2012). As they do not consider the huge costs of financial crises, they deceptively appear to work fine only in ‘normal’ times (Stiglitz, Reference Stiglitz2011, Reference Stiglitz2015); basically when one does not need them!

In the same vein, DSGE models are not able to account for involuntary unemployment. Indeed, even if they are meant to study the welfare effects of macroeconomic policies, unemployment is not present or, when it is, it only stems from frictions in the labour market or from wage rigidities. Such explanations are especially hard to believe during deep downturns like, for example, the Great Recession. In DSGE models, the lack of heterogeneous, interacting firms and workers/consumers prevents the study of the possibility of massive coordination failures (Leijonhufvud, Reference Leijonhufvud1968, Reference Leijonhufvud2000; Cooper & John, Reference Cooper and John1988), which could lead to an insufficient level of aggregate demand and to involuntary unemployment.

In fact, the macroeconomics of DSGE models does not appear to be genuinely grounded on any microeconomics (Stiglitz, Reference Stiglitz2011, Reference Stiglitz2015) they do not even take into account the micro and macro implications of imperfect information, while the behaviour of agents is often described with arbitrary specifications of the functional forms (e.g. Dixit–Stiglitz utility function, Cobb–Douglas production function).

More generally, DSGE models suffer from a sort of internal contradiction. On the one hand, strong assumptions such as rational expectations, perfect information, and complete financial markets are introduced ex ante to provide a rigorous and formal mathematical treatment and to allow for policy recommendations. On the other hand, many imperfections (e.g., sticky prices, rule-of-thumb consumers) are introduced ex post without any theoretical justification only to allow the DSGE model to match the data (see also the discussion later in this Element). Along these lines Chari et al. (Reference Chari, Kehoe and McGrattan2009) argue that the high level of arbitrariness of DSGE models in the specifications of structural shocks may leave them exposed to the so-called Lucas critique, preventing them from being usefully employed for policy analysis.

An assumption of DSGE models is that business cycles stem from a plethora of exogenous shocks. As a consequence, DSGE models do not explain business cycles, preferring instead to postulate them as a sort of deus ex machina mechanism. This could explain why even in ‘normal times’ DSGE models are not able to match many business cycle stylized facts or need to assume serially correlated shocks to produce fluctuations resembling the ones observed in reality (cf. Zarnowitz, Reference Zarnowitz1985, Reference Zarnowitz1997; Cogley & Nason, Reference Cogley and Nason1993; Fukac & Pagan, Reference Fukac and Pagan2006). Even worse, phenomena like the subprime mortgage crisis clearly show how bubbles and, more generally, endogenously generated shocks are far more important for understanding economic fluctuations (Stiglitz, Reference Stiglitz2011, Reference Stiglitz2015).

Moving to the normative side, one supposed advantage of the DSGE approach is the possibility of deriving optimal policy rules. However, when the ‘true’ model of the economy is not known, rule-of-thumb policy rules can perform better than optimal policy rules (Brock et al., Reference Brock, Durlauf, Nason and Rondina2007; Orphanides & Williams, Reference Orphanides and Williams2008). Indeed, in complex worlds with pervasive uncertainty (e.g. in financial markets), policy rules should be simple (Haldane, Reference Haldane2012), while ‘redundancy’ and ‘degeneracy’ (Edelman & Gally, Reference Edelman and Gally2001) are required to achieve resiliency.

3.4 Post-Crisis DSGE Models: Some Fig Leaves Are Not a Cloth

The failure of DSGE models to account for the Great Recession sparked the search for refinements, which were also partly trying to address the critiques discussed in the previous three sections. More specifically, researchers in the DSGE camp have tried to include a financial sector into the barebones model, consider some forms of (very mild) heterogeneity and bounded rationality, and explore the impact of rare exogenous shocks on the performance of DSGE models.

Let us provide a bird’s-eye view of such recent developments. (Another overview is Caverzasi & Russo, Reference Caverzasi and Russo2018.)

The new generation of DSGE model with financial frictions are mostly grounded on the so-called financial accelerator framework (Bernanke, Gertler, & Gilchrist, Reference Bernanke, Gertler, Gilchrist, Taylor and Woodford1999), which provides a straightforward explanation why credit and financial markets can affect real economic activity. The presence of imperfect information between borrowers and lenders introduces a wedge between the cost of credit and those of internal finance. In turn, the balance-sheets of lenders and borrowers can affect the real sector via the supply of credit and the spread on loan interest rates (see Gertler & Kiyotaki, Reference Gertler, Kiyotaki, Friedman and Woodford2010: for a survey). For instance, Curdia and Woodford (Reference Curdia and Woodford2010) introduce patient and impatient consumers to justify the existence of a stylized financial intermediary, which copes with default risk charging a spread on its loan subject to exogenous, stochastic disturbances. From the policy side, they conclude that central banks should keep on controlling the short-term interest rate (see also Curdia & Woodford, Reference Curdia and Woodford2016). In the model of Gertler and Karadi (Reference Gertler and Karadi2011), households can randomly become workers or bankers. In the latter case, they provide credit to firms, but as they are constrained by deposits and the resources they can raise in the interbank market, a spread emerges between loans’ and deposits’ interest rates (see also Christiano, Motto, & Rostagno, Reference Christiano, Motto and Rostagno2013). They find that during (exogenous) recessions, unconventional monetary policy (i.e. the central bank providing credit intermediation) is welfare enhancing (see also Gertler and Kiyotaki, Reference Gertler, Kiyotaki, Friedman and Woodford2010 and Curdia and Woodford, Reference Curdia and Woodford2011 for other types of credit policies).

The foregoing contributions allow for some form of mild heterogeneity among agents. Some DSGE models consider two classes of agents in order to explore issues such as debt deflations or inequality. For instance, Eggertsson and Krugman (Reference Eggertsson and Krugman2012) introduce patient and impatient agents and expose the latter to exogenous debt limit shocks, which force them to deleverage. In such a framework, there can be debt deflations, liquidity traps, and fiscal policies can be effective. Kumhof, Ranciere, and Winant (Reference Kumhof and Rancière2015) try to study the link between rising inequality and financial crises employing a DSGE model where exogenously imposed income distribution guarantees that top earner households (5% of the income distribution) lend to the bottom ones (95% of the income distribution). Exogenous shocks induce low-income households to increase their indebtedness, raising their ‘rational’ willingness to default and, in turn, the probability of a financial crisis. More recent works consider a continuum of heterogenous households in an incomplete market framework. For instance, in the Heterogenous Agent New Keynesian (HANK) model developed by Kaplan, Moll, and Violante (Reference Kaplan, Moll and Violante2018), the assumptions of uninsurable income shocks and multiple assets with different degrees of liquidity and returns lead to wealth distributions and marginal propensities to consume more in tune with the empirical evidence. In this framework, monetary policy is effective only if it provokes a general-equilibrium response of labour demand and household income, and as the Ricardian equivalence breaks down, its impact is intertwined with fiscal policy.

An increasing number of DSGE models allow for various forms of bounded rationality (see Dilaver, Jump, & Levine, Reference Dilaver, Jump and Levine2018 for a survey) albeit to homeopathic degrees. In one stream of literature, agents know the equilibrium of the economy and form their expectations as if they were econometricians, by using the available observations to compute their parameter estimates via ordinary least square (in line with Evans & Honkapohja, Reference Evans and Honkapohja2001). Other recent contributions have relaxed the rational expectations assumption preserving maximisation (Woodford, Reference Woodford2013). For instance, an increasing number of works assume ‘rational inattention’, that is, optimising agents rationally decide not to use all the available information because they have finite processing capacity (Sims, Reference Sims, Friedman and Woodford2010). Along this line, Gabaix (Reference Gabaix2014) models ‘bounded rationality’, assuming that agents have a simplified model of the world, but, nonetheless, they can jointly maximise their utility and their inattention. Drawing inspiration from artificial intelligence programs, Woodford (Reference Woodford2018) develops a DSGE model where rational agents can forecast up to

![]() steps ahead. Finally, building on Brock and Hommes (Reference Brock and Hommes1997), in an increasing number of DSGE models, agents can form their expectations using an ecology of different learning rules, usually fundamentalist versus extrapolative rules (see e.g. Branch & McGough, Reference Branch and McGough2011; De Grauwe, Reference De Grauwe2012; Anufriev et al., Reference Anufriev, Assenza, Hommes and Massaro2013; Massaro, Reference Massaro2013). As the fraction of agents following different expectations rules changes over time, ‘small’ shocks can give rise to persistent and asymmetric fluctuations and endogenous business cycles may arise.

steps ahead. Finally, building on Brock and Hommes (Reference Brock and Hommes1997), in an increasing number of DSGE models, agents can form their expectations using an ecology of different learning rules, usually fundamentalist versus extrapolative rules (see e.g. Branch & McGough, Reference Branch and McGough2011; De Grauwe, Reference De Grauwe2012; Anufriev et al., Reference Anufriev, Assenza, Hommes and Massaro2013; Massaro, Reference Massaro2013). As the fraction of agents following different expectations rules changes over time, ‘small’ shocks can give rise to persistent and asymmetric fluctuations and endogenous business cycles may arise.

Finally, a new generation of DSGE models try to account for deep downturns and disasters. Curdia, Del Negro, and Greenwald (Reference Curdia, Del Negro and Greenwald2014) estimate the Smets & Wouters (Reference Smets and Wouters2007) model, assuming Student’s

![]() -distributed shocks. They find that the fit of the model improves and rare deep downturns become more relevant (see also Fernandez-Villaverde & Levintal, Reference Fernandez-Villaverde and Levintal2018: for a DSGE model with exogenous time-varying rare disaster risk). A similar strategy is employed to Canzoneri et al. (Reference Canzoneri, Collard, Dellas and Diba2016) to allow the effects of fiscal policies to change over time and get state-dependent fiscal multipliers higher than one in recessions.

-distributed shocks. They find that the fit of the model improves and rare deep downturns become more relevant (see also Fernandez-Villaverde & Levintal, Reference Fernandez-Villaverde and Levintal2018: for a DSGE model with exogenous time-varying rare disaster risk). A similar strategy is employed to Canzoneri et al. (Reference Canzoneri, Collard, Dellas and Diba2016) to allow the effects of fiscal policies to change over time and get state-dependent fiscal multipliers higher than one in recessions.

3.4.1 Taking Stock of New DSGE Developments

The new generation of DSGE models tries to address some of the problems mentioned in the previous section. But do post-crisis DSGE models go beyond the intrinsic limits of such an approach and provide a satisfactory account of macroeconomics dynamics? We mantain that the answer is definitely negative.

The major advance of the new class of models is the recognition that agents can be heterogeneous in terms of their rationality, consumption preferences (patient vs. impatient), incomes, and so on. However, DSGE models 2.0 can handle only rough forms of heterogeneity and they still do not contemplate direct interactions among agents. Without interactions, they just scratch the surface of the impact of credit and finance on real economic dynamics without explicitly modeling the behaviour of banks (e.g. endogenous risk-taking), network dynamics, financial contagion, the emergence of bankruptcy chains, and the implications of endogenous money. A complex machinery is built just to introduce into the model a new epicycle: exogenous credit shocks.

Similar remarks apply to the other directions of ‘advancements’. So, bounded rationality is introduced in homeopathic quantities in order to get quasi-Rational Expectations equilibrium models (Caverzasi & Russo, Reference Caverzasi and Russo2018) with just marginally improved empirical performance. But one can’t be a little bit pregnant! The impact of bounded rationality, à la Simon (Reference Simon1959), on macroeconomic dynamics can be pervasive well beyond what can be accounted for by DSGE (see the discussion later in the Element).

Similarly, DSGE models superficially appear able to face both mild and deep downturns, but they in fact only assume them, increasing the degrees of freedom of the models. Indeed, business cycles are still triggered by exogenous shocks, which come from an ad hoc fat-tailed distribution or they are assumed to have massive negative effects.Footnote 25 More generally, no DSGE model has ever tried to jointly account for the endogenous emergence of long-run economic growth and business cycles punctuated by deep downturns. Regarding that, the plea of Solow (Reference Solow2005) is still unanswered.

Summing up, we suggest that the recent developments in the DSGE camp are just patches added to a torn cloth. But how many patches can one add before trashing it? For instance, Lindé, Smets, and Wouters (Reference Lindé, Wouters, Taylor and Uhlig2016), after having expanded the benchmark DSGE model to account for the zero-lower bound, non-Gaussian shocks, and the financial accelerator, conclude that such extensions ‘do not suffice to address some of the major policy challenges associated with the use of non-standard monetary policy and macroprudential policies’.Footnote 26 More radically, we do think, using Kirman’s expression, that the emperor is still naked:Footnote 27 DSGE models are simply post-real (Romer, Reference Romer2016). Additional patches are a waste of intellectual (and economic) resources that pushes macroeconomics deeper and deeper into a ‘Fantasyland’ (Caballero, Reference Caballero2010).

Macroeconomics, we shall argue, should be built on very different grounds based on an understanding of the foundations of complex evolving systems. In the construction of that macroeconomics, evolutionary economics and agent-based computational models represent core building blocks. We present such an alternative paradigm in the rest of this Element.

4 Macroeconomic Agent-Based Models

Agent-based computational economics (ACE) can be defined as the computational study of economies thought of as complex evolving systems (Tesfatsion, Reference Tesfatsion, Tesfatsion and Judd2006; although in fact, Nelson & Winter, Reference Nelson and Winter1982 have been the genuine contemporary root of evolutionary ACE, before anyone called them that).

Contrary to DSGE models, and indeed to many other models in economics, ACE provides an alternative methodology to build macroeconomic models from the bottom up with sound microfoundations based on realistic assumptions as far as agent behaviours and interactions are concerned, where realistic here means rooted in the actual empirical micro-economic evidence (Simon, Reference Simon1977; Kirman, Reference Kirman2016). The state of economics discipline nowadays is such that it is already subversive in its view that in modeling exercises agents should have the same information as do the economists modeling the economy.

Needless to say, such an epistemological prescription is a progressive step vis-à-vis the idea that theorists, irrespective of the information they have, must know as much as God – take or leave some stochastic noise – and agents must know as much as the theorists (or better, theologians and God). However, such a methodology is not enough. First, it is bizarre to think of any isomophism between the knowledge embodied in the observer and that embodied in the object of observation: it is like saying that ants or bees must know as much as the student of anthills and beehives! Second, in actual fact human agents behave according to rules, routines and heuristics which have little to do with either the ‘Olympic rationality’ or even the ‘bounded’ one (Gigerenzer, Reference Gigerenzer2007; Gigerenzer & Brighton, Reference Gigerenzer and Brighton2009; Dosi, Faillo, & Marengo, Reference Dosi, Faillo and Marengo2018). The big challenge here, largely still unexplored, concerns the regularities on what people, and especially organisations do, concerning for example pricing, investment rules, R&D, hiring and firing, and so on. Half a century ago, we knew much more on mark-up pricing, scrapping and expansionary investment, and more, because there were micro inquiries asking firms ‘what do you actually do ...’ (more on that in Dosi, Reference Dosi2023: chapter 8). This is mostly over, because at least since the 1980s, the conflict between evidence and theory was definitely resolved: theory is right, evidence must be wrong (or at least well massaged)!Footnote 28 With that, for example, no responsible advisor would suggest a PhD student to undertake case studies, and no research grant would be requested, on the subject. However, for ABMs all this evidence is the crucial micro behavioural foundation, compared to which current ‘calibration exercises’Footnote 29 look frankly pathetic.

All this regarding behaviours. Another crucial tenet concerns interactions.

The ABM, evolutionary, methodology is prone to build whatever macro edifice, whenever possible, upon actual micro interactions. They concern what happens within organisations – a subject beyond the scope of this Element – and across organisations and individuals, that is, the blurred set of markets and networks. Admittedly, one is very far from any comprehensive understanding of ‘how the market works’, basically for the same (bogus) reasons as earlier: if one can prove the existence of some market fixed point, why should one bother to show how particularly market mechanics lead there? And, again here, ABMs badly need the evidence on the specific institutional architecture of interactions and their outcomes. Kirman and Vriend (Reference Kirman and Vriend2001) and Kirman (Reference Kirman2010a) offer vivid illustrations of the importance of particular institutional set-ups (see also Dosi, Reference Dosi2023: chapter 8).

Fully fledged ABMs require also full-fledged markets. For example, one explores within the K+S family labour markets in Dosi et al. (Reference Dosi, Pereira, Roventini and Virgillito2017, Reference Dosi, Pereira, Roventini and Virgillito2018b). Other exercises on the market have been undertaken by Alan Kirman and colleagues (see chapter 8 of Dosi, Reference Dosi2023).

Short of that, much more concise (and more blackboxed) representations come from network theory (e.g., Albert & Barabasi, Reference Albert and Barabasi2002) and models of social interactions (e.g., Brock & Durlauf, Reference Brock, Durlauf, Heckman and Leamer2001) which, however, move away from trivial interaction patterns, such as those often implied by game-theoretic frameworks. This together with evidence on persistent heterogeneity and turbulence characterising markets and economies focus the investigation on out-of-equilibrium dynamics endogenously fuelled by the interactions among heterogenous agents.

All those building blocks are more than sufficient to yield the properties of complex environments. But what about evolution? Basically, that means the emergence of novelty entailing, in the economists’ jargon, steady changes in the ‘fundamentals’, and econometrically ubiquitous ‘structural breaks’; that is, new technologies, new products, new organisational forms, new behaviours, etc;Footnote 30 emerging at some point along the arrow of time, which were not those from the start. Formally, all this may well be captured by endogenous dynamics on the ‘fundamentals’ of the economy. Or, better still, an ever-expanding dimensionality of the state-space and its dynamics (more in Dosi & Winter, Reference Dosi, Winter, Augier and March2002 and Dosi & Virgillito, Reference Dosi and Virgillito2017).

4.1 The Basics

Every macroeconomic ABM typically possesses the following structure. There is a population – or a set of populations – of heterogenous agents (e.g., consumers, firms, banks), possibly hierarchically organised, whose size may change or not in time. The evolution of the system is observed in discrete time steps,

![]() . Time steps may be days, quarters, years, and so on. At each

. Time steps may be days, quarters, years, and so on. At each

![]() , every agent

, every agent

![]() is characterised by a finite number of microeconomic variables

is characterised by a finite number of microeconomic variables

![]() which may change across time (e.g., production, consumption, wealth) and by a vector of microeconomic parameters

which may change across time (e.g., production, consumption, wealth) and by a vector of microeconomic parameters

![]() (e.g., mark-ups, propensity to consume). In turn, the economy may be well characterised by some macroeconomic (fixed) parameters

(e.g., mark-ups, propensity to consume). In turn, the economy may be well characterised by some macroeconomic (fixed) parameters

![]() (even mimicking policy like tax rates, the Basel capital requirements, etc.).

(even mimicking policy like tax rates, the Basel capital requirements, etc.).

Given some initial conditions

![]() (e.g., wealth, technology) and a choice for micro and macro parameters, at each time step, one or more agents are chosen to update their microeconomic variables. This may happen randomly or can be triggered by the state of the system itself. Agents picked to perform the updating stage might collect their available information (or not) about the current and past states (i.e., micro-economic variables) of a subset of other agents, typically those they directly interact with. They typically use their knowledge about their own history, their local environment, as well as, possibly, the (limited) information they can gather about the state of the whole economy, and feed them into their heuristics, routines, and other algorithmic behavioural rules. Interactions occur within the population of heterogenous agents. Interactions can involve different agents of the same type (e.g., firms from the same industry) or entities from different types (e.g., trading relationship between firms and consumers in the good market). Such a stream of interactions leads to the emergence of a multi-layer network structure that endogenously evolves over time. At the same time, in truly evolutionary environments, technologies, organisations, behaviours, and markets collectively co-evolve.

(e.g., wealth, technology) and a choice for micro and macro parameters, at each time step, one or more agents are chosen to update their microeconomic variables. This may happen randomly or can be triggered by the state of the system itself. Agents picked to perform the updating stage might collect their available information (or not) about the current and past states (i.e., micro-economic variables) of a subset of other agents, typically those they directly interact with. They typically use their knowledge about their own history, their local environment, as well as, possibly, the (limited) information they can gather about the state of the whole economy, and feed them into their heuristics, routines, and other algorithmic behavioural rules. Interactions occur within the population of heterogenous agents. Interactions can involve different agents of the same type (e.g., firms from the same industry) or entities from different types (e.g., trading relationship between firms and consumers in the good market). Such a stream of interactions leads to the emergence of a multi-layer network structure that endogenously evolves over time. At the same time, in truly evolutionary environments, technologies, organisations, behaviours, and markets collectively co-evolve.

After the updating round has taken place, a new set of microeconomic variables is fed into the economy for the next-step iteration: aggregate variables

![]() are computed by simply summing up or averaging individual characteristics. Once again, the definitions of aggregate variables closely follow those of statistical aggregates (i.e., GDP, unemployment).

are computed by simply summing up or averaging individual characteristics. Once again, the definitions of aggregate variables closely follow those of statistical aggregates (i.e., GDP, unemployment).

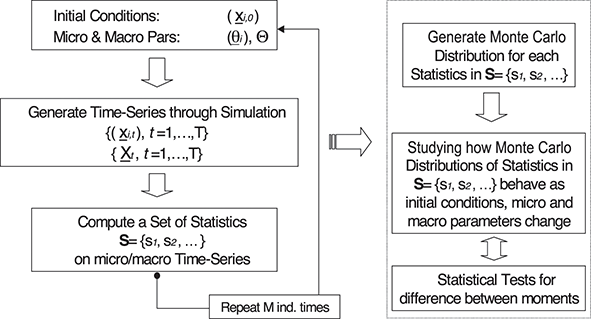

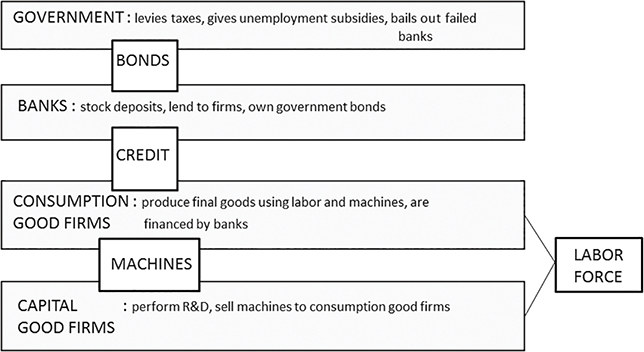

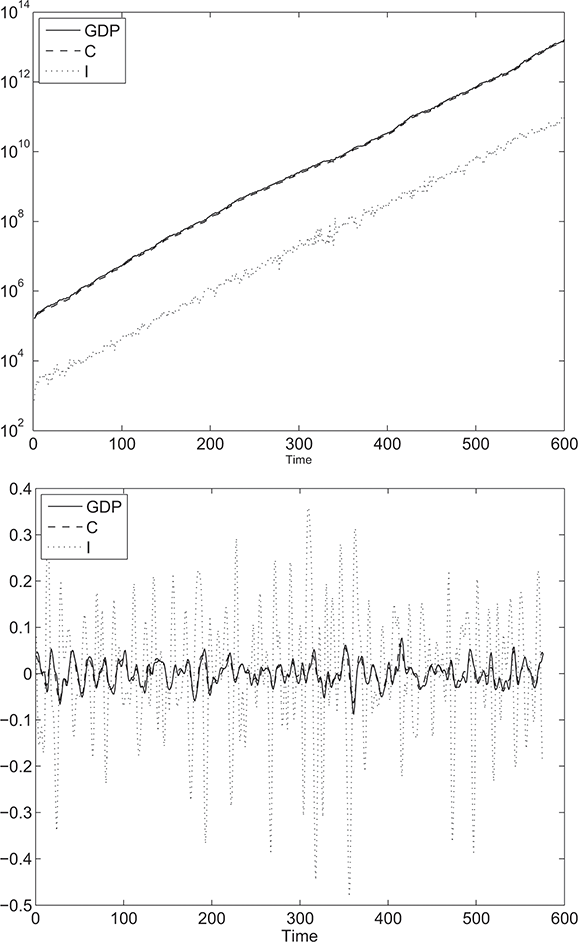

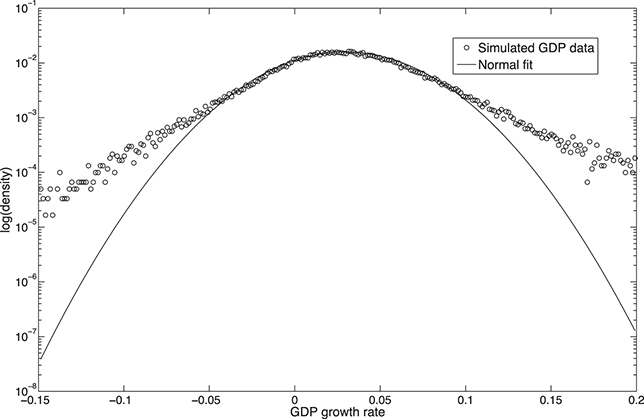

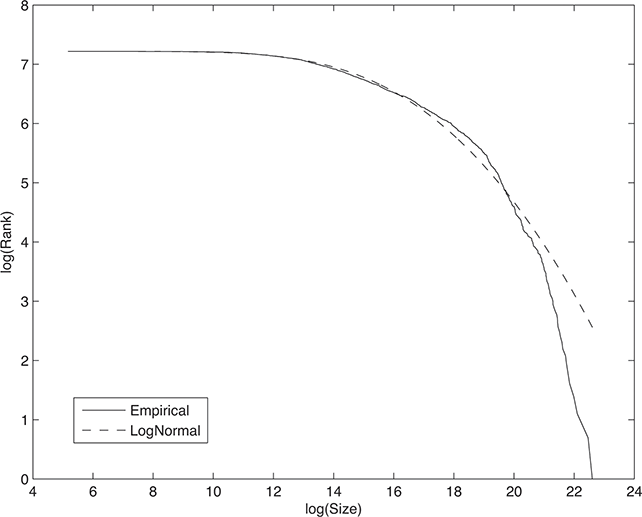

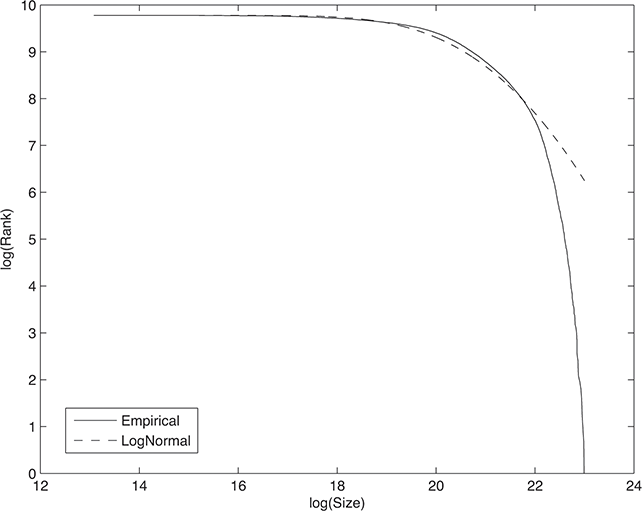

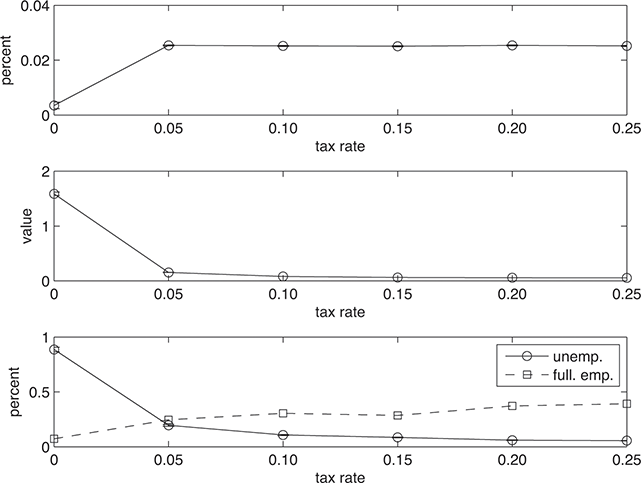

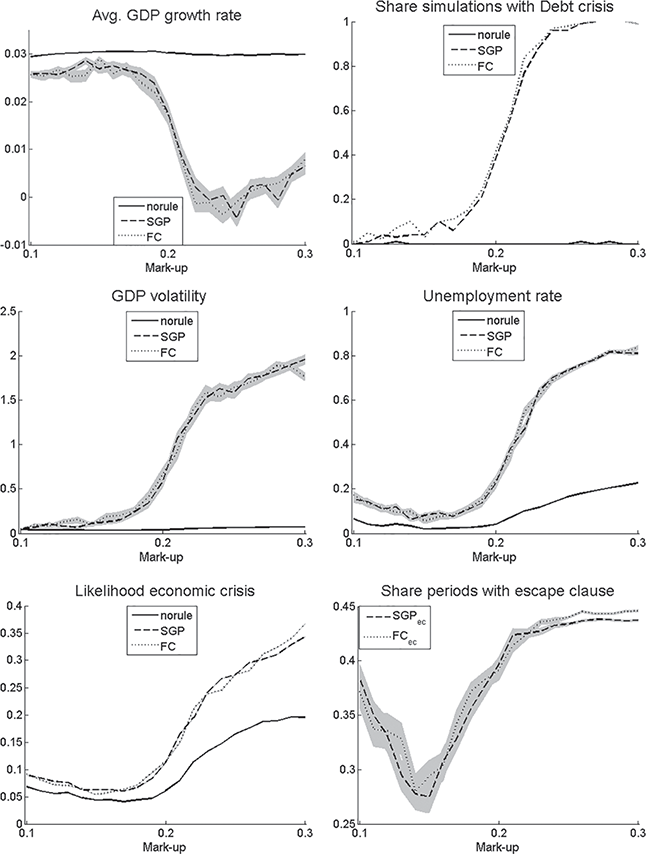

4.2 Emergence and Validation