Highlights

-

• What is already known: The best-performing frequentist methods for random-effects meta-analysis can be highly imprecise in small meta-analyses, and can provide biased estimates of the heterogeneity.

-

• What is new: We conduct a large simulation study evaluating two forms of the Jeffreys prior for meta-analysis, which correspond to the Firth bias correction to the maximum likelihood estimator.

-

• Potential impact for RSM readers: For small meta-analyses of binary outcomes, the Jeffreys2 prior may offer advantages over standard frequentist methods for point and interval estimation for the mean parameter.

1 Introduction

Standard random-effects meta-analysis involves estimating the heterogeneity of studies’ population effects (e.g., their standard deviation) and obtaining an inverse-variance-weighted estimate of the meta-analytic mean, in which studies’ weights depend on the estimated heterogeneity.Reference DerSimonian and Laird 1 Commonly used methods to estimate the heterogeneity include semiparametric method-of-moments estimatorsReference DerSimonian and Laird 1 – Reference Raudenbush and Bryk 5 and parametric likelihood-based estimators.Reference DerSimonian and Laird 1 , Reference Harville 6 The theoretical justification for these methods relies on asymptotics, yet in some scientific disciplines, the majority of meta-analyses include a relatively small number of studies. Meta-analyses of healthcare interventions in the Cochrane Database for Systematic Reviews include a median of only 3 studies (75th percentile: 6, 90th percentile: 10).Reference Langan, Higgins and Jackson 7 In psychology, meta-analyses published in Psychological Bulletin include a median of 12 studies, though some meta-analyses are much larger (75th percentile: 33, 90th percentile: 76).Reference Van Erp, Verhagen and Grasman 8 , Reference Röver 9

On the one hand, previous simulation studies indicate that even in very small meta-analyses (here defined as those with

![]() $\le 5$

studies), many existing methods provide nearly unbiased point estimates for the meta-analytic mean, termed

$\le 5$

studies), many existing methods provide nearly unbiased point estimates for the meta-analytic mean, termed

![]() $\mu $

.Reference Kontopantelis and Reeves

10

On the other hand, confidence intervals that are based on asymptotic normality (e.g., Wald intervals) can have less than nominal coverage in small meta-analyses (

$\mu $

.Reference Kontopantelis and Reeves

10

On the other hand, confidence intervals that are based on asymptotic normality (e.g., Wald intervals) can have less than nominal coverage in small meta-analyses (

![]() $\le 20$

studies), and coverage can decline further in very small meta-analyses.Reference Langan, Higgins and Jackson

7

,

Reference Langan, Higgins and Simmonds

11

,

Reference IntHout, Ioannidis and Borm

12

Using Hartung–Knapp–Sidik–Jonkman’s (HKSJ) method to adjust standard errorsReference Sidik and Jonkman

13

,

Reference Knapp and Hartung

14

can provide better-calibrated intervals in many settings, though existing simulation studies have yielded somewhat mixed findings regarding whether these intervals consistently achieve nominal coverage.Reference Langan, Higgins and Jackson

7

,

Reference Langan, Higgins and Simmonds

11

,

Reference IntHout, Ioannidis and Borm

12

,

Reference Bodnar, Link and Arendacká

15

–

Reference Seide, Röver and Friede

17

Moreover, such intervals can be extremely wide for meta-analyses of typical sample sizes.Reference Bodnar, Link and Arendacká

15

–

Reference Michael, Thornton and Xie

18

For example, even when the true heterogeneity is zero, moments estimators with HKSJ standard errors yielded 95% confidence intervals with average widths of approximately 4–5 in simulated meta-analyses of 5 studies.Reference Michael, Thornton and Xie

18

This suggests that for a point estimate of 0.5 on the standardized mean difference scale, a typical confidence interval would be approximately

$\le 20$

studies), and coverage can decline further in very small meta-analyses.Reference Langan, Higgins and Jackson

7

,

Reference Langan, Higgins and Simmonds

11

,

Reference IntHout, Ioannidis and Borm

12

Using Hartung–Knapp–Sidik–Jonkman’s (HKSJ) method to adjust standard errorsReference Sidik and Jonkman

13

,

Reference Knapp and Hartung

14

can provide better-calibrated intervals in many settings, though existing simulation studies have yielded somewhat mixed findings regarding whether these intervals consistently achieve nominal coverage.Reference Langan, Higgins and Jackson

7

,

Reference Langan, Higgins and Simmonds

11

,

Reference IntHout, Ioannidis and Borm

12

,

Reference Bodnar, Link and Arendacká

15

–

Reference Seide, Röver and Friede

17

Moreover, such intervals can be extremely wide for meta-analyses of typical sample sizes.Reference Bodnar, Link and Arendacká

15

–

Reference Michael, Thornton and Xie

18

For example, even when the true heterogeneity is zero, moments estimators with HKSJ standard errors yielded 95% confidence intervals with average widths of approximately 4–5 in simulated meta-analyses of 5 studies.Reference Michael, Thornton and Xie

18

This suggests that for a point estimate of 0.5 on the standardized mean difference scale, a typical confidence interval would be approximately

![]() $[-1.5, 2.5]$

, which is so wide that it might be considered uninformative. Additionally, standard point estimates for the heterogeneity can be substantially biased and imprecise in small meta-analyses.Reference Langan, Higgins and Jackson

7

,

Reference Langan, Higgins and Simmonds

11

Many existing simulation studies on heterogeneity estimation do not seem to have evaluated the coverage or width of confidence intervals for the heterogeneityReference Langan, Higgins and Simmonds

11

(but see Viechtbauer (2007)Reference Viechtbauer

19

).

$[-1.5, 2.5]$

, which is so wide that it might be considered uninformative. Additionally, standard point estimates for the heterogeneity can be substantially biased and imprecise in small meta-analyses.Reference Langan, Higgins and Jackson

7

,

Reference Langan, Higgins and Simmonds

11

Many existing simulation studies on heterogeneity estimation do not seem to have evaluated the coverage or width of confidence intervals for the heterogeneityReference Langan, Higgins and Simmonds

11

(but see Viechtbauer (2007)Reference Viechtbauer

19

).

In this paper, we investigate the frequentist performance of alternative Bayesian methods that use the invariant Jeffreys prior.Reference Jeffreys

20

In general, Bayesian estimation proceeds by specifying a prior on the unknown parameters and obtaining the posterior of those parameters, given the observed data. This essentially involves updating the prior based on the likelihood of the observed data.Reference Gelman, Carlin and Stern

21

Various types of point estimates and credible intervals can then be obtained from the posterior. For an arbitrary distribution with unknown parameters

![]() $\boldsymbol {\Psi }$

and expected Fisher information

$\boldsymbol {\Psi }$

and expected Fisher information

![]() $\mathcal {I}(\boldsymbol {\Psi })$

, the Jeffreys prior is proportional to

$\mathcal {I}(\boldsymbol {\Psi })$

, the Jeffreys prior is proportional to

![]() $\sqrt { \text {det} \; \mathcal {I}(\boldsymbol {\Psi })}$

.Reference Jeffreys

20

An original motivation for this prior was its invariance to transforming the parameters,Reference Jeffreys

20

a property that does not hold for all priors.Reference Cencov

22

,

Reference Datta and Ghosh

23

,

Footnote

i

For example, letting

$\sqrt { \text {det} \; \mathcal {I}(\boldsymbol {\Psi })}$

.Reference Jeffreys

20

An original motivation for this prior was its invariance to transforming the parameters,Reference Jeffreys

20

a property that does not hold for all priors.Reference Cencov

22

,

Reference Datta and Ghosh

23

,

Footnote

i

For example, letting

![]() $\tau $

denote the standard deviation of studies’ population effects, the Jeffreys prior on

$\tau $

denote the standard deviation of studies’ population effects, the Jeffreys prior on

![]() $(\mu , \tau )$

is the same as the Jeffreys prior on

$(\mu , \tau )$

is the same as the Jeffreys prior on

![]() $(\mu , \tau ^2)$

, so the resulting posterior estimates and intervals would not depend on the analyst’s arbitrary choice of parameterization. This desirable property has led some to describe the Jeffreys prior as “noninformative,” though we agree with others’ critiques of this term.Reference Kass and Wasserman

24

,

Reference Bernardo

25

$(\mu , \tau ^2)$

, so the resulting posterior estimates and intervals would not depend on the analyst’s arbitrary choice of parameterization. This desirable property has led some to describe the Jeffreys prior as “noninformative,” though we agree with others’ critiques of this term.Reference Kass and Wasserman

24

,

Reference Bernardo

25

An interesting, underappreciated property of the Jeffreys prior is that the resulting posterior can alternatively be motivated from a solely frequentist perspective.Reference Firth

26

In particular, it is well-known that the maximum likelihood (ML) estimate has an

![]() $O(n^{-1})$

bias, essentially due to the curvature of the score function.Reference Firth

26

Firth (1993)Reference Firth

26

showed that for exponential family distributions, an appropriate penalty on the likelihood to correct this bias coincides with estimation under the Jeffreys prior. This is essentially because the Jeffreys prior introduces a bias in the score function that compensates for the bias due to its curvature.Reference Firth

26

In particular, the posterior mode under this prior can be viewed in frequentist terms as a bias-corrected ML estimate; consequently, the posterior mode under the Jeffreys prior has sometimes been termed the “Firth correction.” The Firth correction has demonstrated success in a number of frequentist estimation problems, and is used fairly often for logistic regression.Reference Firth

26

–

Reference Sartori

29

$O(n^{-1})$

bias, essentially due to the curvature of the score function.Reference Firth

26

Firth (1993)Reference Firth

26

showed that for exponential family distributions, an appropriate penalty on the likelihood to correct this bias coincides with estimation under the Jeffreys prior. This is essentially because the Jeffreys prior introduces a bias in the score function that compensates for the bias due to its curvature.Reference Firth

26

In particular, the posterior mode under this prior can be viewed in frequentist terms as a bias-corrected ML estimate; consequently, the posterior mode under the Jeffreys prior has sometimes been termed the “Firth correction.” The Firth correction has demonstrated success in a number of frequentist estimation problems, and is used fairly often for logistic regression.Reference Firth

26

–

Reference Sartori

29

Given the Jeffreys prior’s effectiveness as a bias-correction method in small samples, it seems plausible that using this prior in small meta-analyses might improve point and interval estimation. Bodnar et al. (2016, 2017)Reference Bodnar, Link and Arendacká

15

,

Reference Bodnar, Link and Elster

30

derived the Jeffreys prior on the heterogeneity

![]() $\tau $

alone (i.e., holding the mean

$\tau $

alone (i.e., holding the mean

![]() $\mu $

constant), an approach that may be optimal if

$\mu $

constant), an approach that may be optimal if

![]() $\tau $

is strictly a nuisance parameter.Reference Bernardo

25

Their simulations suggested that, along with an independent flat prior on

$\tau $

is strictly a nuisance parameter.Reference Bernardo

25

Their simulations suggested that, along with an independent flat prior on

![]() $\mu $

, the resulting credible intervals may have better frequentist coverage than existing frequentist methods.Reference Bodnar, Link and Arendacká

15

We term this prior “Jeffreys1” because it is the prior with respect to a single parameter. Kosmidis et al. (2017)Reference Kosmidis, Guolo and Varin

31

independently derived a penalized likelihood correction that is equivalent to the single-parameter Jeffreys prior on

$\mu $

, the resulting credible intervals may have better frequentist coverage than existing frequentist methods.Reference Bodnar, Link and Arendacká

15

We term this prior “Jeffreys1” because it is the prior with respect to a single parameter. Kosmidis et al. (2017)Reference Kosmidis, Guolo and Varin

31

independently derived a penalized likelihood correction that is equivalent to the single-parameter Jeffreys prior on

![]() $\mu $

alone; that is, treating

$\mu $

alone; that is, treating

![]() $\mu $

rather than

$\mu $

rather than

![]() $\tau $

as a nuisance parameter. This penalization is closely related to the restricted ML (REML) estimator of

$\tau $

as a nuisance parameter. This penalization is closely related to the restricted ML (REML) estimator of

![]() $\tau $

.Reference Kosmidis, Guolo and Varin

31

$\tau $

.Reference Kosmidis, Guolo and Varin

31

In this paper, we consider the Jeffreys1 prior along with the two-parameter Jeffreys prior on both

![]() $\mu $

and

$\mu $

and

![]() $\tau $

. To the best of our knowledge, the latter has not appeared in the published literature on meta-analysis. We consider this prior, termed “Jeffreys2”, for several reasons. First, while the mean parameter is often of primary interest in meta-analysis, the heterogeneity should generally also be estimated and reported, so it may not be optimal to treat

$\tau $

. To the best of our knowledge, the latter has not appeared in the published literature on meta-analysis. We consider this prior, termed “Jeffreys2”, for several reasons. First, while the mean parameter is often of primary interest in meta-analysis, the heterogeneity should generally also be estimated and reported, so it may not be optimal to treat

![]() $\tau $

as a nuisance parameter.Reference Schünemann, Higgins, Vist, Higgins, Thomas, Chandler, Cumpston, Li, Page and Welch

32

Second, in other small-sample estimation problems, multiparameter Jeffreys priors that include scale parameters (e.g., the dispersion parameter in exponential-family models) have been proposed and have good empirical properties.Reference Firth

26

,

Reference Zhou, Giacometti and Fabozzi

28

,

Reference Alam, Rahman and Bari

33

(We return to this issue in Section 3.3.) In the context of adjusting for p-hacking in meta-analyses by meta-analyzing only a truncated part of the random-effects distribution, we recently found that a Jeffreys prior on

$\tau $

as a nuisance parameter.Reference Schünemann, Higgins, Vist, Higgins, Thomas, Chandler, Cumpston, Li, Page and Welch

32

Second, in other small-sample estimation problems, multiparameter Jeffreys priors that include scale parameters (e.g., the dispersion parameter in exponential-family models) have been proposed and have good empirical properties.Reference Firth

26

,

Reference Zhou, Giacometti and Fabozzi

28

,

Reference Alam, Rahman and Bari

33

(We return to this issue in Section 3.3.) In the context of adjusting for p-hacking in meta-analyses by meta-analyzing only a truncated part of the random-effects distribution, we recently found that a Jeffreys prior on

![]() $\mu $

and

$\mu $

and

![]() $\tau $

performed considerably better than ML,Reference Mathur

34

whose performance is remarkably poor for truncated distributions in general.Reference Zhou, Giacometti and Fabozzi

28

,

Reference Cope

35

Third, as we will discuss, the shape of the Jeffreys2 prior suggests it might provide more precise intervals than the Jeffreys1 prior. Whether Jeffreys2 credible intervals show nominal frequentist coverage, and whether point estimation for

$\tau $

performed considerably better than ML,Reference Mathur

34

whose performance is remarkably poor for truncated distributions in general.Reference Zhou, Giacometti and Fabozzi

28

,

Reference Cope

35

Third, as we will discuss, the shape of the Jeffreys2 prior suggests it might provide more precise intervals than the Jeffreys1 prior. Whether Jeffreys2 credible intervals show nominal frequentist coverage, and whether point estimation for

![]() $\mu $

and

$\mu $

and

![]() $\tau $

performs well, are open questions.

$\tau $

performs well, are open questions.

Previous simulation studies of Jeffreys priors in meta-analysis have provided promising preliminary results, but do have limitations. Those simulations investigated only the Jeffreys1 prior, but not Jeffreys2, and have considered point and interval estimation for

![]() $\mu $

, but not

$\mu $

, but not

![]() $\tau $

.Reference Bodnar, Link and Arendacká

15

In this paper, we present a simulation study comparing the frequentist properties of point and interval estimation for both

$\tau $

.Reference Bodnar, Link and Arendacká

15

In this paper, we present a simulation study comparing the frequentist properties of point and interval estimation for both

![]() $\mu $

and

$\mu $

and

![]() $\tau $

under the Jeffreys1 and Jeffreys2 priors, as well as several of the best-performing frequentist methods. Using a simulation design that closely paralleled a recent, extensive simulation study by Langan et al. (2019)Reference Langan, Higgins and Jackson

7

, we substantially expanded on the range of comparison methods and simulation scenarios used in previous simulation studies of the Jeffreys1 prior. Previous simulations regarding the Jeffreys1 prior considered only posterior means for point estimation,Reference Bodnar, Link and Arendacká

15

whereas the aforementioned bias-correction properties specifically apply to posterior modes. This may be especially relevant for point estimation of

$\tau $

under the Jeffreys1 and Jeffreys2 priors, as well as several of the best-performing frequentist methods. Using a simulation design that closely paralleled a recent, extensive simulation study by Langan et al. (2019)Reference Langan, Higgins and Jackson

7

, we substantially expanded on the range of comparison methods and simulation scenarios used in previous simulation studies of the Jeffreys1 prior. Previous simulations regarding the Jeffreys1 prior considered only posterior means for point estimation,Reference Bodnar, Link and Arendacká

15

whereas the aforementioned bias-correction properties specifically apply to posterior modes. This may be especially relevant for point estimation of

![]() $\tau $

, whose posterior is highly asymmetric. We therefore consider three types of Bayesian point estimates (the posterior mode, mean, and median) as well as two types of credible intervals (central and shortest). Our simulations include the best-performing methods in Langan et al.’s (2019)Reference Langan, Higgins and Jackson

7

simulation study, along with several other methods whose theoretical properties suggest they might also perform well, such as exact intervalsReference Michael, Thornton and Xie

18

and intervals based on the profile likelihood.Reference Harville

6

$\tau $

, whose posterior is highly asymmetric. We therefore consider three types of Bayesian point estimates (the posterior mode, mean, and median) as well as two types of credible intervals (central and shortest). Our simulations include the best-performing methods in Langan et al.’s (2019)Reference Langan, Higgins and Jackson

7

simulation study, along with several other methods whose theoretical properties suggest they might also perform well, such as exact intervalsReference Michael, Thornton and Xie

18

and intervals based on the profile likelihood.Reference Harville

6

This paper is organized as follows. We briefly review existing moments and likelihood-based estimators for random-effects meta-analysis (Section 2), all of which have been covered in more detail elsewhere.Reference Harville 6 , Reference Michael, Thornton and Xie 18 , Reference Veroniki, Jackson, Viechtbauer, Bender, Bowden, Knapp and Salanti 36 We also briefly review existing simulation results regarding these methods (Section 2.4). We review the established form of the Jeffreys1 priorReference Bodnar, Link and Arendacká 15 and derive the form of the Jeffreys2 prior; we then discuss posterior estimation under both priors (Section 3). We present the simulation study (Section 4) and a brief applied example (Section 5), and conclude with a general discussion.

2 Existing frequentist methods

2.1 Method-of-moments estimators

Moments estimators for meta-analysis are semiparametric; they involve specifying only the first two moments of the distribution of population effects, namely

![]() $\mu $

and

$\mu $

and

![]() $\tau ^2$

. Because these methods do not require specifying the higher moments, they do not requiring assuming that population effects are normal. Specifically, consider k studies whose population effects,

$\tau ^2$

. Because these methods do not require specifying the higher moments, they do not requiring assuming that population effects are normal. Specifically, consider k studies whose population effects,

![]() $\mu _i$

, have expectation

$\mu _i$

, have expectation

![]() $\mu $

and variance

$\mu $

and variance

![]() $\tau ^2$

. These two moments are the usual meta-analytic estimands of interest. Let

$\tau ^2$

. These two moments are the usual meta-analytic estimands of interest. Let

![]() $\widehat {\theta }_i$

and

$\widehat {\theta }_i$

and

![]() $\sigma _i$

respectively denote the point estimate and standard error of the ith study, such that

$\sigma _i$

respectively denote the point estimate and standard error of the ith study, such that

![]() $\widehat {\theta }_i \sim N \left ( \mu _i, \sigma _i^2 \right )$

holds approximately. The within-study standard errors

$\widehat {\theta }_i \sim N \left ( \mu _i, \sigma _i^2 \right )$

holds approximately. The within-study standard errors

![]() $\sigma _i$

are generally treated as fixed and known.

$\sigma _i$

are generally treated as fixed and known.

For a given estimate of the heterogeneity variance,

![]() $\widehat {\tau }^2$

, the estimated marginal variance of

$\widehat {\tau }^2$

, the estimated marginal variance of

![]() $\widehat {\theta }_i$

is

$\widehat {\theta }_i$

is

![]() $\widehat {\tau }^2 + \sigma _i^2$

. The uniformly minimum variance unbiased estimator (UMVUE) of

$\widehat {\tau }^2 + \sigma _i^2$

. The uniformly minimum variance unbiased estimator (UMVUE) of

![]() $\mu $

arises from weighting studies by the inverse of their estimated marginal variances,Reference Harville

6

denoted

$\mu $

arises from weighting studies by the inverse of their estimated marginal variances,Reference Harville

6

denoted

![]() $w_i = 1 / (\widehat {\tau }^2 + \sigma _i^2)$

:

$w_i = 1 / (\widehat {\tau }^2 + \sigma _i^2)$

:

$$ \begin{align*} \widehat{\mu} &= \frac{\sum_{i=1}^k w_i \widehat{\theta}_i}{\sum_{i=1}^k w_i}. \end{align*} $$

$$ \begin{align*} \widehat{\mu} &= \frac{\sum_{i=1}^k w_i \widehat{\theta}_i}{\sum_{i=1}^k w_i}. \end{align*} $$

The various moments estimators are distinguished by their estimators for

![]() $\tau ^2$

, and hence the form of the weights

$\tau ^2$

, and hence the form of the weights

![]() $w_i$

. Detailed reviewsReference Langan, Higgins and Jackson

7

,

Reference Veroniki, Jackson, Viechtbauer, Bender, Bowden, Knapp and Salanti

36

,

Reference Viechtbauer

37

and original papers on these approaches are available, so here we summarize briefly. Moments estimators for

$w_i$

. Detailed reviewsReference Langan, Higgins and Jackson

7

,

Reference Veroniki, Jackson, Viechtbauer, Bender, Bowden, Knapp and Salanti

36

,

Reference Viechtbauer

37

and original papers on these approaches are available, so here we summarize briefly. Moments estimators for

![]() $\tau ^2$

are based on the generalized Q-statistic:

$\tau ^2$

are based on the generalized Q-statistic:

$$ \begin{align} Q &= \sum_{i=1}^k a_i (\widehat{\theta}_i - \widehat{\mu})^2, \end{align} $$

$$ \begin{align} Q &= \sum_{i=1}^k a_i (\widehat{\theta}_i - \widehat{\mu})^2, \end{align} $$

where the form of the coefficients,

![]() $a_i$

, differs across moments estimators. For example, the traditional Dersimonian–Laird estimator (DL)Reference DerSimonian and Laird

1

sets

$a_i$

, differs across moments estimators. For example, the traditional Dersimonian–Laird estimator (DL)Reference DerSimonian and Laird

1

sets

![]() $a_i = 1 / \sigma _i^2$

. The two-step DL estimator (DL2)Reference DerSimonian and Kacker

2

instead sets

$a_i = 1 / \sigma _i^2$

. The two-step DL estimator (DL2)Reference DerSimonian and Kacker

2

instead sets

![]() $a_i = 1 / (\widehat {\tau }^2_{DL} + \sigma _i^2)$

, where

$a_i = 1 / (\widehat {\tau }^2_{DL} + \sigma _i^2)$

, where

![]() $\widehat {\tau }^2_{DL}$

is an initial estimate obtained using the DL estimator. The Paule–Mandel (PM)Reference Paule and Mandel

3

,

Reference van Aert and Jackson

4

estimator can be viewed as a limiting case of DL2, involving iteration over the estimates

$\widehat {\tau }^2_{DL}$

is an initial estimate obtained using the DL estimator. The Paule–Mandel (PM)Reference Paule and Mandel

3

,

Reference van Aert and Jackson

4

estimator can be viewed as a limiting case of DL2, involving iteration over the estimates

![]() $\widehat {\mu }$

and

$\widehat {\mu }$

and

![]() $\widehat {\tau }^2$

until convergence. This estimator is also equivalent to the empirical Bayes estimator.Reference Raudenbush and Bryk

5

In general terms, empirical Bayes estimation uses the observed data to estimate the parameters of the Bayesian prior, rather than specifying the prior independently of the data.Reference Gelman, Carlin and Stern

21

In the context of meta-analysis, the empirical Bayes estimator essentially estimates the distribution of population effects by their posterior means, with the prior determined empirically.Reference Raudenbush and Bryk

5

$\widehat {\tau }^2$

until convergence. This estimator is also equivalent to the empirical Bayes estimator.Reference Raudenbush and Bryk

5

In general terms, empirical Bayes estimation uses the observed data to estimate the parameters of the Bayesian prior, rather than specifying the prior independently of the data.Reference Gelman, Carlin and Stern

21

In the context of meta-analysis, the empirical Bayes estimator essentially estimates the distribution of population effects by their posterior means, with the prior determined empirically.Reference Raudenbush and Bryk

5

2.2 Likelihood-based estimators

In contrast to moments estimators, commonly used likelihood-based estimators assume that the population effects,

![]() $\mu _i$

, arise independently from the distribution

$\mu _i$

, arise independently from the distribution

![]() $\mu _i \sim N \left ( \mu , \tau ^2 \right )$

. Thus, the marginal distribution of studies’ point estimates,

$\mu _i \sim N \left ( \mu , \tau ^2 \right )$

. Thus, the marginal distribution of studies’ point estimates,

![]() $\widehat {\theta }_i$

, is

$\widehat {\theta }_i$

, is

![]() $\widehat {\theta }_i \sim N \left ( \mu , \tau ^2 + \sigma _i^2 \right )$

. We denote the k-vector of point estimates as

$\widehat {\theta }_i \sim N \left ( \mu , \tau ^2 + \sigma _i^2 \right )$

. We denote the k-vector of point estimates as

![]() $\widehat {\boldsymbol {\theta }}$

. Letting

$\widehat {\boldsymbol {\theta }}$

. Letting

![]() $S_i(\tau ) = \sqrt {\tau ^2 + \sigma _i^2}$

be the true marginal standard deviation of the ith study, the joint likelihood is:

$S_i(\tau ) = \sqrt {\tau ^2 + \sigma _i^2}$

be the true marginal standard deviation of the ith study, the joint likelihood is:

The standard ML estimator for

![]() $\tau $

is obtained as usual by solving

$\tau $

is obtained as usual by solving

![]() $\frac {\partial }{\partial \tau } \; \log p ( \widehat {\boldsymbol {\theta }} \mid \mu , \tau ) = 0$

, whose solution depends on

$\frac {\partial }{\partial \tau } \; \log p ( \widehat {\boldsymbol {\theta }} \mid \mu , \tau ) = 0$

, whose solution depends on

![]() $\mu $

.Reference Harville

6

Since this estimator does not take into account the loss in degrees of freedom due to the additional estimation of

$\mu $

.Reference Harville

6

Since this estimator does not take into account the loss in degrees of freedom due to the additional estimation of

![]() $\mu $

itself, the resulting estimate is often negatively biased.Reference Harville

6

This issue motivates REML estimation, which can improve upon ML estimation by transforming the log-likelihood to remove the parameter

$\mu $

itself, the resulting estimate is often negatively biased.Reference Harville

6

This issue motivates REML estimation, which can improve upon ML estimation by transforming the log-likelihood to remove the parameter

![]() $\mu $

.Reference Harville

6

$\mu $

.Reference Harville

6

2.3 Interval estimation

A simple Wald confidence interval can be obtained by assuming

![]() $\widehat {\mu }$

is normally distributed, which holds asymptotically in k by standard ML properties. If the weights

$\widehat {\mu }$

is normally distributed, which holds asymptotically in k by standard ML properties. If the weights

![]() $w_i$

are treated as known rather than estimated, we have

$w_i$

are treated as known rather than estimated, we have

![]() $\widehat {\text {Var}}(\widehat {\mu }) = 1 / \sum _{i=1}^k w_i$

. A Wald 95% confidence interval is:

$\widehat {\text {Var}}(\widehat {\mu }) = 1 / \sum _{i=1}^k w_i$

. A Wald 95% confidence interval is:

where

![]() $c = \Phi ^{-1}(0.975) \approx 1.96$

is the critical value of the standard normal distribution. However, Wald intervals exhibit substantial under-coverage for small meta-analyses, both because the normal approximation holds only asymptotically and because the approximation

$c = \Phi ^{-1}(0.975) \approx 1.96$

is the critical value of the standard normal distribution. However, Wald intervals exhibit substantial under-coverage for small meta-analyses, both because the normal approximation holds only asymptotically and because the approximation

![]() $\widehat {\text {Var}}(\widehat {\mu }) = 1 / \sum _{i=1}^k w_i$

does not account for the estimation of

$\widehat {\text {Var}}(\widehat {\mu }) = 1 / \sum _{i=1}^k w_i$

does not account for the estimation of

![]() $\tau ^2$

.Reference Langan, Higgins and Jackson

7

,

Reference Langan, Higgins and Simmonds

11

,

Reference IntHout, Ioannidis and Borm

12

Wald intervals can also be constructed for

$\tau ^2$

.Reference Langan, Higgins and Jackson

7

,

Reference Langan, Higgins and Simmonds

11

,

Reference IntHout, Ioannidis and Borm

12

Wald intervals can also be constructed for

![]() $\widehat {\tau }$

, but exhibit similarly poor performance.Reference Viechtbauer

19

We therefore do not further discuss Wald intervals, focusing instead on the better-performing alternatives discussed below.

$\widehat {\tau }$

, but exhibit similarly poor performance.Reference Viechtbauer

19

We therefore do not further discuss Wald intervals, focusing instead on the better-performing alternatives discussed below.

Regarding interval estimation for

![]() $\mu $

, the alternative HKSJ, sometimes called “Knapp–Hartung,” interval addresses the limitations of the Wald interval.Reference Sidik and Jonkman

13

,

Reference Knapp and Hartung

14

This method more flexibly assumes that

$\mu $

, the alternative HKSJ, sometimes called “Knapp–Hartung,” interval addresses the limitations of the Wald interval.Reference Sidik and Jonkman

13

,

Reference Knapp and Hartung

14

This method more flexibly assumes that

![]() $\widehat {\mu }$

follows a t distribution and additionally rescales

$\widehat {\mu }$

follows a t distribution and additionally rescales

![]() $\widehat {\text {Var}}(\widehat {\mu })$

to account for the estimation of

$\widehat {\text {Var}}(\widehat {\mu })$

to account for the estimation of

![]() $\tau ^2$

in the weights

$\tau ^2$

in the weights

![]() $w_i$

:

$w_i$

:

$$ \begin{align*} \widehat{\text{Var}}(\widehat{\mu}) = \frac{\sum_{i=1}^k w_i (\widehat{\theta}_i - \widehat{\mu})^2 }{(k-1) \sum_{i=1}^k w_i }. \end{align*} $$

$$ \begin{align*} \widehat{\text{Var}}(\widehat{\mu}) = \frac{\sum_{i=1}^k w_i (\widehat{\theta}_i - \widehat{\mu})^2 }{(k-1) \sum_{i=1}^k w_i }. \end{align*} $$

For

![]() $\tau $

, improved intervals can be constructed using the chi-square distribution of the Q statistic, per Eq. (1).Reference Viechtbauer

19

These “Q-profile” intervals substantially outperform Wald intervals.Reference Viechtbauer

19

For both

$\tau $

, improved intervals can be constructed using the chi-square distribution of the Q statistic, per Eq. (1).Reference Viechtbauer

19

These “Q-profile” intervals substantially outperform Wald intervals.Reference Viechtbauer

19

For both

![]() $\mu $

and

$\mu $

and

![]() $\tau $

, ML profile intervals can also be constructed in the usual way.Reference Harville

6

$\tau $

, ML profile intervals can also be constructed in the usual way.Reference Harville

6

An interesting, relatively new approach provides exact rather than asymptotic intervals and is theoretically guaranteed to provide more than nominal coverage, under the assumption of normal population effects.Reference Michael, Thornton and Xie

18

This method essentially involves inverting exact tests. Other parametric methods provide finite-sample corrections to the likelihood ratio test statistic; these include Skovgaard’s second-order correction and Bartlett’s correction.Reference Huizenga, Visser and Dolan

38

–

Reference Guolo and Varin

40

These methods can improve upon basic likelihood methods for hypothesis testing,Reference Guolo and Varin

40

but Skovgaard’s second-order correction was not designed for interval estimation and can be numerically unstable in this context.Reference Kosmidis, Guolo and Varin

31

Interval estimation with Bartlett’s correction is possible,Reference Noma

41

but is not implemented in existing software (I. Visser, personal communication, 8 July 2024).Reference Hilde and Ingmar

42

,

Reference Veroniki, Jackson and Bender

43

Because our focus is on interval estimation rather than testing, our simulations do not include Skovgaard’s or Bartlett’s corrections. Finally, various parametric or nonparametric resampling methods can be used to obtain bootstrapped confidence intervals.Reference Viechtbauer

19

,

Reference Veroniki, Jackson and Bender

43

,

Reference Van Den Noortgate and Onghena

44

Nonparametric resampling can be conducted by resampling rows with replacement, after which one can obtain simple percentile bootstrap intervals or bias-corrected and accelerated (BCa) intervals, among many other types of bootstrap intervals.Reference Efron

45

,

Reference Carpenter and Bithell

46

The BCa confidence corrects for bias and skewness in the bootstrapped sampling distribution, which we speculate could be helpful when estimating the sampling distribution of

![]() $\tau $

. The BCa bootstrap has performed relatively well for certain meta-analytic estimators that are functions of

$\tau $

. The BCa bootstrap has performed relatively well for certain meta-analytic estimators that are functions of

![]() $\widehat {\tau }$

.Reference Mathur and VanderWeele

47

However, bootstrapping is an asymptotic procedure whose finite-sample performance typically must be assessed through simulations.

$\widehat {\tau }$

.Reference Mathur and VanderWeele

47

However, bootstrapping is an asymptotic procedure whose finite-sample performance typically must be assessed through simulations.

2.4 Existing simulations comparing these methods

Langan et al. (2017)Reference Langan, Higgins and Simmonds

11

provide an excellent systematic review of simulation studies for different heterogeneity estimators.Reference Langan, Higgins and Jackson

7

Briefly, the DL estimator was negatively biased for

![]() $\tau $

when heterogeneity was moderate to high, and the PM estimator was typically less biased.Reference Langan, Higgins and Simmonds

11

The reviewed studies do not appear to have assessed interval estimation for

$\tau $

when heterogeneity was moderate to high, and the PM estimator was typically less biased.Reference Langan, Higgins and Simmonds

11

The reviewed studies do not appear to have assessed interval estimation for

![]() $\tau $

. Based on their own, more extensive simulation study, Langan et al. (2019)Reference Langan, Higgins and Jackson

7

generally recommend REML, PM, or DL2 for heterogeneity estimation, along with HKSJ confidence intervals for

$\tau $

. Based on their own, more extensive simulation study, Langan et al. (2019)Reference Langan, Higgins and Jackson

7

generally recommend REML, PM, or DL2 for heterogeneity estimation, along with HKSJ confidence intervals for

![]() $\mu $

; however, they recommend caution in interpreting heterogeneity estimates in small meta-analyses.

$\mu $

; however, they recommend caution in interpreting heterogeneity estimates in small meta-analyses.

Langan et al.’s (2019)Reference Langan, Higgins and Jackson 7 simulation study did not assess intervals based on the profile likelihood, bootstrapping, or the exact method; the latter was developed only recently. Regarding profile intervals, recommendations in the literature are inconsistent. A prominent paper stated that “the profile likelihood is a good method for computing confidence intervals”.Reference Cornell, Mulrow and Localio 48 One simulation study seemed to support this recommendation, finding that when the heterogeneity is greater than zero, profile likelihood intervals showed the closest to nominal coverage.Reference Kontopantelis and Reeves 10 On the other hand, another simulation study suggested that profile intervals often exhibited under-coverage for meta-analyses of only 5 studies.Reference Guolo 39 The originators of the exact method provide simulations suggesting that the resulting intervals are not substantially wider than those of existing methods, despite the method’s theoretical guarantee of at least nominal coverage.Reference Michael, Thornton and Xie 18 While our simulation study is primarily motivated by investigating the Jeffreys methods, a secondary contribution is to more extensively evaluate profile, bootstrap, and exact intervals. We now turn to establishing the theory for the Jeffreys1 and Jeffreys2 priors.

3 Bayesian methods using Jeffreys priors

3.1 The Jeffreys priors

Under the assumption of normal population effects, Bodnar et al. (2017)Reference Bodnar, Link and Arendacká 15 showed that the improper Jeffreys1 prior is:

$$ \begin{align*} p \left( \mu, \tau \right) \propto \tau \sqrt{\sum_{i=1}^k \left(S_i(\tau)\right)^{-4}} \end{align*} $$

$$ \begin{align*} p \left( \mu, \tau \right) \propto \tau \sqrt{\sum_{i=1}^k \left(S_i(\tau)\right)^{-4}} \end{align*} $$

where, again,

![]() $S_i(\tau ) = \sqrt {\tau ^2 + \sigma _i^2}$

. Since this prior is independent of

$S_i(\tau ) = \sqrt {\tau ^2 + \sigma _i^2}$

. Since this prior is independent of

![]() $\mu $

, it can be expressed as two independent priors on

$\mu $

, it can be expressed as two independent priors on

![]() $\mu $

and

$\mu $

and

![]() $\tau $

, where the prior on

$\tau $

, where the prior on

![]() $\mu $

is uniform:

$\mu $

is uniform:

$$ \begin{align} p \left( \mu, \tau \right) &\propto p(\mu) \; p(\tau), \text{ where } p(\mu) \propto 1 \text{ and } p(\tau) \propto \tau \sqrt{\sum_{i=1}^k \left(S_i(\tau)\right)^{-4}}. \end{align} $$

$$ \begin{align} p \left( \mu, \tau \right) &\propto p(\mu) \; p(\tau), \text{ where } p(\mu) \propto 1 \text{ and } p(\tau) \propto \tau \sqrt{\sum_{i=1}^k \left(S_i(\tau)\right)^{-4}}. \end{align} $$

If

![]() $\mu $

is treated as the only parameter of interest and

$\mu $

is treated as the only parameter of interest and

![]() $\tau $

is considered a nuisance parameter, then the Jeffreys1 prior also coincides with the Berger–Bernardo reference prior.Reference Bodnar, Link and Elster

30

In general, the Berger–Bernardo prior for a given distribution is designed to be maximally “noninformative” in the sense of minimizing the amount of information provided by the prior and maximizing the amount of information provided by the data.Reference Bodnar, Link and Elster

30

,

Reference Berger and Bernardo

49

Specifically, this prior maximizes the Kullback–Liebler divergence between the prior and the posterior.Reference Berger and Bernardo

49

$\tau $

is considered a nuisance parameter, then the Jeffreys1 prior also coincides with the Berger–Bernardo reference prior.Reference Bodnar, Link and Elster

30

In general, the Berger–Bernardo prior for a given distribution is designed to be maximally “noninformative” in the sense of minimizing the amount of information provided by the prior and maximizing the amount of information provided by the data.Reference Bodnar, Link and Elster

30

,

Reference Berger and Bernardo

49

Specifically, this prior maximizes the Kullback–Liebler divergence between the prior and the posterior.Reference Berger and Bernardo

49

Regarding the Jeffreys2 prior, the joint likelihood in Eq. (2) implies that the entries of the expected Fisher information are:

$$ \begin{align*} k_{\mu\mu} := E \Bigg[ \frac{\partial^2 \ell}{\partial\mu^2} \Bigg] = -\sum_{i=1}^k \left(S_i(\tau)\right)^{-2}, \; \; \; k_{\tau \tau} := E \Bigg[ \frac{\partial^2 \ell}{\partial \tau^2} \Bigg] = -2 \tau^2 \sum_{i=1}^k \left(S_i(\tau)\right)^{-4}, \; \; \; k_{\mu \tau} := E \Bigg[ \frac{\partial^2 \ell}{\partial\mu\partial \tau} \Bigg] = 0 \end{align*} $$

$$ \begin{align*} k_{\mu\mu} := E \Bigg[ \frac{\partial^2 \ell}{\partial\mu^2} \Bigg] = -\sum_{i=1}^k \left(S_i(\tau)\right)^{-2}, \; \; \; k_{\tau \tau} := E \Bigg[ \frac{\partial^2 \ell}{\partial \tau^2} \Bigg] = -2 \tau^2 \sum_{i=1}^k \left(S_i(\tau)\right)^{-4}, \; \; \; k_{\mu \tau} := E \Bigg[ \frac{\partial^2 \ell}{\partial\mu\partial \tau} \Bigg] = 0 \end{align*} $$

where

![]() $\ell $

is the likelihood function. Therefore, the Jeffreys2 prior is

$\ell $

is the likelihood function. Therefore, the Jeffreys2 prior is

![]() $p \left ( \mu , \tau \right ) \propto \sqrt { k_{\mu \mu } k_{\tau \tau } }$

. (This result is straightforward to show directly, or alternatively can be viewed as a simple special case of the prior given in Mathur (2024).Reference Mathur

34

,

Footnote

ii

This yields the improper two-parameter prior:

$p \left ( \mu , \tau \right ) \propto \sqrt { k_{\mu \mu } k_{\tau \tau } }$

. (This result is straightforward to show directly, or alternatively can be viewed as a simple special case of the prior given in Mathur (2024).Reference Mathur

34

,

Footnote

ii

This yields the improper two-parameter prior:

$$ \begin{align*} p \left( \mu, \tau \right) &\propto \tau \sqrt{\left( \sum_{i=1}^k \left(S_i(\tau)\right)^{-2} \right) \left( \sum_{i=1}^k \left(S_i(\tau)\right)^{-4} \right)}. \end{align*} $$

$$ \begin{align*} p \left( \mu, \tau \right) &\propto \tau \sqrt{\left( \sum_{i=1}^k \left(S_i(\tau)\right)^{-2} \right) \left( \sum_{i=1}^k \left(S_i(\tau)\right)^{-4} \right)}. \end{align*} $$

Like the Jeffreys1 prior, the Jeffreys2 prior can be expressed as:

$$ \begin{align} p \left( \mu, \tau \right) &\propto p(\mu) \; p(\tau), \text{ where } p(\mu) \propto 1 \text{ and } p(\tau) \propto \tau \sqrt{\left( \sum_{i=1}^k \left(S_i(\tau)\right)^{-2} \right) \left( \sum_{i=1}^k \left(S_i(\tau)\right)^{-4} \right)}. \end{align} $$

$$ \begin{align} p \left( \mu, \tau \right) &\propto p(\mu) \; p(\tau), \text{ where } p(\mu) \propto 1 \text{ and } p(\tau) \propto \tau \sqrt{\left( \sum_{i=1}^k \left(S_i(\tau)\right)^{-2} \right) \left( \sum_{i=1}^k \left(S_i(\tau)\right)^{-4} \right)}. \end{align} $$

To illustrate, Figure 1 shows both priors on

![]() $\tau $

for four meta-analyses of standardized mean differences. The meta-analyses were simulated with studies’ sample sizes, N, arising from four different distributions. Although the magnitude of the priors will of course be affected by the number of studies k, their shape is minimally affected by k, so Figure 1 depicts the prior for meta-analyses with

$\tau $

for four meta-analyses of standardized mean differences. The meta-analyses were simulated with studies’ sample sizes, N, arising from four different distributions. Although the magnitude of the priors will of course be affected by the number of studies k, their shape is minimally affected by k, so Figure 1 depicts the prior for meta-analyses with

![]() $k=10$

. Note that for each meta-analysis, the Jeffreys2 prior is somewhat narrower than the Jeffreys1 prior, suggesting that the former may provide narrower intervals; this hypothesis will be explored in more depth in the simulation study (Section 4). Both priors lead to proper posteriors if

$k=10$

. Note that for each meta-analysis, the Jeffreys2 prior is somewhat narrower than the Jeffreys1 prior, suggesting that the former may provide narrower intervals; this hypothesis will be explored in more depth in the simulation study (Section 4). Both priors lead to proper posteriors if

![]() $k> 1$

(see Bodnar (2017)Reference Bodnar, Link and Arendacká

15

regarding Jeffreys1 and the present Section 1 of the Supplementary Material, regarding Jeffreys2). Additionally, both priors generalize easily to the case of meta-regression: the Jeffreys1 prior would coincide with that of Bodnar et al. (2024) for generalized marginal random effects models,Reference Bodnar and Bodnar

50

and we derive the Jeffreys2 prior for meta-regression in Section 1 of the Supplementary Material. We do not further consider meta-regression in the main text.

$k> 1$

(see Bodnar (2017)Reference Bodnar, Link and Arendacká

15

regarding Jeffreys1 and the present Section 1 of the Supplementary Material, regarding Jeffreys2). Additionally, both priors generalize easily to the case of meta-regression: the Jeffreys1 prior would coincide with that of Bodnar et al. (2024) for generalized marginal random effects models,Reference Bodnar and Bodnar

50

and we derive the Jeffreys2 prior for meta-regression in Section 1 of the Supplementary Material. We do not further consider meta-regression in the main text.

Figure 1 Priors for four simulated meta-analyses of standardized mean differences (

![]() $k=10$

), in which the within-study sample sizes (N) were generated from four possible distributions. Studies’ standard errors were estimated using Eq. (5) and, given the data-generation parameters, were approximately equal to

$k=10$

), in which the within-study sample sizes (N) were generated from four possible distributions. Studies’ standard errors were estimated using Eq. (5) and, given the data-generation parameters, were approximately equal to

![]() $2/\sqrt {N}$

. Points are the maxima. The priors have been scaled to have the same maximum height.

$2/\sqrt {N}$

. Points are the maxima. The priors have been scaled to have the same maximum height.

3.2 The posterior under each prior

For either prior, since

![]() $p(\mu , \tau ) \propto p(\tau )$

, the marginal posterior on

$p(\mu , \tau ) \propto p(\tau )$

, the marginal posterior on

![]() $\tau $

is:Reference Bodnar, Link and Arendacká

15

$\tau $

is:Reference Bodnar, Link and Arendacká

15

In turn, the conditional posterior of

![]() $\mu $

, given

$\mu $

, given

![]() $\tau $

, is normal:Reference Röver

9

,

Reference Bodnar, Link and Arendacká

15

,

Reference Gelman, Carlin and Stern

21

$\tau $

, is normal:Reference Röver

9

,

Reference Bodnar, Link and Arendacká

15

,

Reference Gelman, Carlin and Stern

21

$$ \begin{align*} p(\mu \mid \tau, \widehat{\boldsymbol{\theta}}) &\propto \frac{1}{\sqrt{2\pi \; \text{Var}(\mu \mid \tau, \widehat{\boldsymbol{\theta}})} } \cdot \exp \Bigg\{ -\frac{1}{2 \; \text{Var}(\mu \mid \tau, \widehat{\boldsymbol{\theta}})} \left( \mu - E[ \mu \mid \tau, \widehat{\boldsymbol{\theta}}] \right)^2 \Bigg\}, \end{align*} $$

$$ \begin{align*} p(\mu \mid \tau, \widehat{\boldsymbol{\theta}}) &\propto \frac{1}{\sqrt{2\pi \; \text{Var}(\mu \mid \tau, \widehat{\boldsymbol{\theta}})} } \cdot \exp \Bigg\{ -\frac{1}{2 \; \text{Var}(\mu \mid \tau, \widehat{\boldsymbol{\theta}})} \left( \mu - E[ \mu \mid \tau, \widehat{\boldsymbol{\theta}}] \right)^2 \Bigg\}, \end{align*} $$

where:

$$ \begin{align*} E[ \mu \mid \tau, \widehat{\boldsymbol{\theta}}] = \frac{\sum_{i=1}^k \widehat{\theta}_i \left(S_i(\tau)\right)^{-2} }{\sum_{i=1}^k \left(S_i(\tau)\right)^{-2}}, \; \; \text{Var}(\mu \mid \tau, \widehat{\boldsymbol{\theta}}) &= \frac{1}{\sum_{i=1}^k \left(S_i(\tau)\right)^{-2}}. \end{align*} $$

$$ \begin{align*} E[ \mu \mid \tau, \widehat{\boldsymbol{\theta}}] = \frac{\sum_{i=1}^k \widehat{\theta}_i \left(S_i(\tau)\right)^{-2} }{\sum_{i=1}^k \left(S_i(\tau)\right)^{-2}}, \; \; \text{Var}(\mu \mid \tau, \widehat{\boldsymbol{\theta}}) &= \frac{1}{\sum_{i=1}^k \left(S_i(\tau)\right)^{-2}}. \end{align*} $$

Thus, the joint posterior

![]() $p(\mu , \tau \mid \widehat {\boldsymbol {\theta }})$

can be decomposed into the two tractable components

$p(\mu , \tau \mid \widehat {\boldsymbol {\theta }})$

can be decomposed into the two tractable components

![]() $p(\tau \mid \widehat {\boldsymbol {\theta }})$

and

$p(\tau \mid \widehat {\boldsymbol {\theta }})$

and

![]() $p(\mu \mid \tau , \widehat {\boldsymbol {\theta }})$

.Reference Röver

9

Given this observation, Röver and othersReference Röver

9

,

Reference Röver and Friede

51

developed theory and software for a discrete approximation to the joint posterior

$p(\mu \mid \tau , \widehat {\boldsymbol {\theta }})$

.Reference Röver

9

Given this observation, Röver and othersReference Röver

9

,

Reference Röver and Friede

51

developed theory and software for a discrete approximation to the joint posterior

![]() $p(\mu , \tau \mid \widehat {\boldsymbol {\theta }})$

and the marginal posterior on

$p(\mu , \tau \mid \widehat {\boldsymbol {\theta }})$

and the marginal posterior on

![]() $\mu $

, given by the mixture distribution:

$\mu $

, given by the mixture distribution:

The discrete approximation approach does not require sampling via mixed-chain Monte Carlo (MCMC) and is implemented in the R package bayesmeta.Reference Röver 9 , Reference Röver and Friede 51 We use this package in our simulations and applied example.

With approximations to the joint and marginal posteriors in hand, point estimates can be defined in terms of various measures of central tendency, such as the posterior mode, median, or mean. For either prior,

![]() $p(\mu \mid \widehat {\boldsymbol {\theta }})$

appears to be nearly symmetric in many cases (e.g., Figure 4), so the three measures of central tendency will often agree closely. However, this is not the case for

$p(\mu \mid \widehat {\boldsymbol {\theta }})$

appears to be nearly symmetric in many cases (e.g., Figure 4), so the three measures of central tendency will often agree closely. However, this is not the case for

![]() $p(\tau \mid \widehat {\boldsymbol {\theta }})$

, which is asymmetric under either prior. Existing work on the Jeffreys1 prior focused primarily on posterior means and medians,Reference Bodnar, Link and Arendacká

15

but we focus on posterior modes given their aforementioned theoretical advantages.Reference Firth

26

Indeed, as discussed in Section 4.4, our simulations indicated that posterior modes for

$p(\tau \mid \widehat {\boldsymbol {\theta }})$

, which is asymmetric under either prior. Existing work on the Jeffreys1 prior focused primarily on posterior means and medians,Reference Bodnar, Link and Arendacká

15

but we focus on posterior modes given their aforementioned theoretical advantages.Reference Firth

26

Indeed, as discussed in Section 4.4, our simulations indicated that posterior modes for

![]() $\tau $

provided substantially lower bias, root mean square error (RMSE), and mean absolute error (MAE) than did posterior means and medians. As in ML estimation, point estimates can be defined either in terms of the marginal or the joint mode. In the Bayesian context, the marginal mode represents the value of a given parameter (e.g.,

$\tau $

provided substantially lower bias, root mean square error (RMSE), and mean absolute error (MAE) than did posterior means and medians. As in ML estimation, point estimates can be defined either in terms of the marginal or the joint mode. In the Bayesian context, the marginal mode represents the value of a given parameter (e.g.,

![]() $\mu $

) that maximizes the posterior for that parameter alone, marginalizing over the other parameter (e.g.,

$\mu $

) that maximizes the posterior for that parameter alone, marginalizing over the other parameter (e.g.,

![]() $\tau $

). In contrast, the joint mode represents the values of both parameters that jointly maximize the joint posterior. We consider marginal modes in this paper to provide a more direct comparison to marginal ML estimation, which is the usual implementation for meta-analysis.

$\tau $

). In contrast, the joint mode represents the values of both parameters that jointly maximize the joint posterior. We consider marginal modes in this paper to provide a more direct comparison to marginal ML estimation, which is the usual implementation for meta-analysis.

Figure 2 Priors on

![]() $\tau $

for the meta-analysis on all-cause death (

$\tau $

for the meta-analysis on all-cause death (

![]() $k=3, \{ \sigma _i \} = \{ 1.15, 1.63, 0.19 \}$

). Points are maxima. The priors have been scaled to have the same maximum height.

$k=3, \{ \sigma _i \} = \{ 1.15, 1.63, 0.19 \}$

). Points are maxima. The priors have been scaled to have the same maximum height.

Figure 3 Joint posterior under the Jeffreys2 prior for the meta-analysis on all-cause death (

![]() $k=3, \{ \sigma _i \} = \{ 1.15, 1.63, 0.19 \}$

). Horizontal red line: marginal posterior mode of

$k=3, \{ \sigma _i \} = \{ 1.15, 1.63, 0.19 \}$

). Horizontal red line: marginal posterior mode of

![]() $\mu $

. Vertical blue line: marginal posterior mode of

$\mu $

. Vertical blue line: marginal posterior mode of

![]() $\tau $

.

$\tau $

.

Figure 4 Marginal posteriors under the Jeffreys2 prior for the meta-analysis on all-cause death. Solid vertical lines: marginal posterior modes. Dashed vertical lines: limits of 95% intervals.

Figure 5 Interval limits greater than

![]() $RR=10$

are truncated. The exact method does not yield point estimates. CI: credible interval.

$RR=10$

are truncated. The exact method does not yield point estimates. CI: credible interval.

Also analogously to ML estimation, symmetric Wald credible intervals are sometimes constructed for Bayesian estimates by approximating the posterior as asymptotically normal around the posterior mode, with a variance–covariance matrix equal to the inverse of the Hessian of the negative log-posterior evaluated at the posterior mode.Reference Gelman, Carlin and Stern 21 However, just as Wald intervals around the ML estimate can perform poorly if the likelihood is asymmetric, Wald intervals around the posterior mode can likewise perform poorly if the posterior is asymmetric.Reference Greenland 52 To obtain appropriately asymmetric posterior intervals, we consider two approaches. First, a central (also called “equal-tailed”) 95% posterior quantile interval can be obtained by taking the 2.5th and 97.5th quantiles of the estimated posterior distribution. Second, the shortest possible 95% posterior quantile interval can be obtained numerically; this interval is equivalent to a highest posterior density interval for unimodal distributions.Reference Gelman, Carlin and Stern 21 In our simulations and applied example, we obtain both types of intervals from the R package bayesmeta.Reference Röver 9

3.3 Theoretical and substantive distinctions between the priors

The distinction between the Jeffreys1 and Jeffreys2 priors invokes theoretical and substantive considerations that pertain in general to multiparameter Jeffreys priors. Jeffreys and others have argued that multiparameter Jeffreys priors are appropriate if one wishes to estimate all of the parameters (i.e., both

![]() $\mu $

and

$\mu $

and

![]() $\tau $

in meta-analysis), but not if one wishes to estimate only a subset of the parameters (i.e., only

$\tau $

in meta-analysis), but not if one wishes to estimate only a subset of the parameters (i.e., only

![]() $\mu $

), with the others treated as nuisance parameters.Reference Kass and Wasserman

24

,

Reference Bernardo

25

,

Reference Jeffreys

53

As noted in the Introduction, a random-effects meta-analysis should generally involve estimation and reporting of

$\mu $

), with the others treated as nuisance parameters.Reference Kass and Wasserman

24

,

Reference Bernardo

25

,

Reference Jeffreys

53

As noted in the Introduction, a random-effects meta-analysis should generally involve estimation and reporting of

![]() $\tau $

(or related metricsReference Schünemann, Higgins, Vist, Higgins, Thomas, Chandler, Cumpston, Li, Page and Welch

32

,

Reference Riley, Higgins and Deeks

54

,

Reference Mathur and VanderWeele

55

) in addition to

$\tau $

(or related metricsReference Schünemann, Higgins, Vist, Higgins, Thomas, Chandler, Cumpston, Li, Page and Welch

32

,

Reference Riley, Higgins and Deeks

54

,

Reference Mathur and VanderWeele

55

) in addition to

![]() $\mu $

, which suggests consideration of the Jeffreys2 prior. On the other hand, in general location-scale problems, Jeffreys recommended obtaining the prior with respect to only the scale parameters, holding constant the location parameters.Reference Kass and Wasserman

24

,

Reference Jeffreys

53

This would correspond to the Jeffreys1 prior. Jeffreys’ recommendation was motivated by problems that can arise when the number of location parameters increases with the sample size, similarly to the well-known Neyman–Scott problem in which the ML estimator fails to be consistent.Reference Kass and Wasserman

24

,

Reference Jeffreys

53

Interestingly, Firth later showed that in a specific, severe version of the Neyman–Scott problem, the multiparameter Jeffreys prior (i.e., the Firth correction) in fact leads to a consistent and exactly unbiased estimator.Reference Firth

26

This was unexpected given that the asymptotic arguments justifying the Firth correction are violated with an increasing number of parameters.Reference Firth

26

Of course, in the present setting of random-effects meta-analysis, the number of parameters is fixed, so this potential issue does not arise in the first place. Our view is that existing substantive and theoretical considerations do not clearly rule out either prior as inappropriate for random-effects meta-analysis, so our simulation study evaluates both.

$\mu $

, which suggests consideration of the Jeffreys2 prior. On the other hand, in general location-scale problems, Jeffreys recommended obtaining the prior with respect to only the scale parameters, holding constant the location parameters.Reference Kass and Wasserman

24

,

Reference Jeffreys

53

This would correspond to the Jeffreys1 prior. Jeffreys’ recommendation was motivated by problems that can arise when the number of location parameters increases with the sample size, similarly to the well-known Neyman–Scott problem in which the ML estimator fails to be consistent.Reference Kass and Wasserman

24

,

Reference Jeffreys

53

Interestingly, Firth later showed that in a specific, severe version of the Neyman–Scott problem, the multiparameter Jeffreys prior (i.e., the Firth correction) in fact leads to a consistent and exactly unbiased estimator.Reference Firth

26

This was unexpected given that the asymptotic arguments justifying the Firth correction are violated with an increasing number of parameters.Reference Firth

26

Of course, in the present setting of random-effects meta-analysis, the number of parameters is fixed, so this potential issue does not arise in the first place. Our view is that existing substantive and theoretical considerations do not clearly rule out either prior as inappropriate for random-effects meta-analysis, so our simulation study evaluates both.

4 Simulation study

We designed the simulation study to closely parallel that of Langan et al. (2019),Reference Langan, Higgins and Jackson 7 which in turn was designed to address many of the limitations of previous simulation studies.Reference Langan, Higgins and Simmonds 11 As detailed below, we considered meta-analyses with binary outcomes (with effect sizes on the log-odds ratio scale) and with continuous outcomes (with effect sizes on the Hedges’ g scaleReference Hedges 56 ), with as few as 2 studies, with varying amounts of heterogeneity, with varying means and outcome probabilities (for binary outcomes), and with varying distributions of within-study sample sizes. Because we assessed a variety of parametric, semiparametric, and nonparametric methods, we preliminarily investigated robustness to parametric misspecification by considering exponentially distributed population effects in addition to normally distributed effects.

4.1 Point and interval estimation methods

Table 1 lists the methods assessed in our simulation study. We assessed both Jeffreys priors. For point estimation under each prior, we primarily considered marginal posterior modes but secondarily investigated posterior means and medians (Section 2.2 of the Supplementary Material). Regarding interval estimation for

![]() $\mu $

, central and shortest intervals were generally quite similar, so we only show results for shortest intervals. Regarding interval estimation for

$\mu $

, central and shortest intervals were generally quite similar, so we only show results for shortest intervals. Regarding interval estimation for

![]() $\tau $

, we consider both types of intervals for each prior, termed “Jeffreys1-shortest,” “Jeffreys1-central,” “Jeffreys2-shortest,” and “Jeffreys2-central.”

$\tau $

, we consider both types of intervals for each prior, termed “Jeffreys1-shortest,” “Jeffreys1-central,” “Jeffreys2-shortest,” and “Jeffreys2-central.”

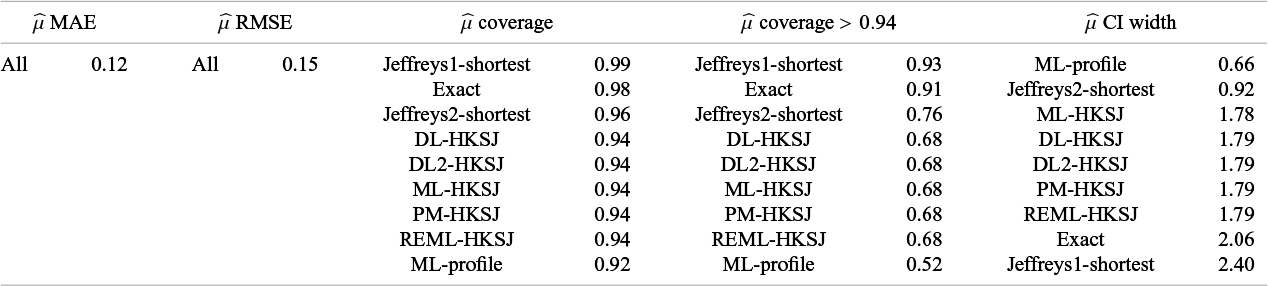

Table 1 Methods assessed in simulation study

![]() $^{a}$

In pilot tests for the scenarios with

$^{a}$

In pilot tests for the scenarios with

![]() $k=10$

, the bootstrap methods were not competitive with other methods, so these computationally intensive methods were not run for other sample sizes.

$k=10$

, the bootstrap methods were not competitive with other methods, so these computationally intensive methods were not run for other sample sizes.

We compared the performance of both Jeffreys priors to that of several existing frequentist methods that were described in Section 2. We selected methods that have performed well in existing, large simulation studies or that have desirable theoretical properties, such as providing appropriately asymmetric intervals for

![]() $\tau $

.Reference Harville

6

,

Reference Langan, Higgins and Jackson

7

,

Reference Michael, Thornton and Xie

18

,

Reference Guolo

39

,

Reference Cornell, Mulrow and Localio

48

,

Reference Brockwell and Gordon

57

For point estimation, the comparison methods were ML estimation, REML, DL, DL2, and PM. Regarding interval estimation for

$\tau $

.Reference Harville

6

,

Reference Langan, Higgins and Jackson

7

,

Reference Michael, Thornton and Xie

18

,

Reference Guolo

39

,

Reference Cornell, Mulrow and Localio

48

,

Reference Brockwell and Gordon

57

For point estimation, the comparison methods were ML estimation, REML, DL, DL2, and PM. Regarding interval estimation for

![]() $\mu $

, we considered HKSJ intervals for each frequentist estimation method, ML profile intervals (ML-profile), exact intervals,Reference Michael, Thornton and Xie

18

nonparametric BCa bootstrap intervals, and nonparametric percentile bootstrap intervals.Reference Efron

45

,

Reference Carpenter and Bithell

46

Regarding interval estimation for

$\mu $

, we considered HKSJ intervals for each frequentist estimation method, ML profile intervals (ML-profile), exact intervals,Reference Michael, Thornton and Xie

18

nonparametric BCa bootstrap intervals, and nonparametric percentile bootstrap intervals.Reference Efron

45

,

Reference Carpenter and Bithell

46

Regarding interval estimation for

![]() $\tau $

, we considered Q-profile intervals for each frequentist estimation method, as well as ML-profile and both bootstrap intervals. We implemented all frequentist methods and intervals using the R package metafor

Reference Viechtbauer

58

with the following exceptions: we implemented ML-profile using custom R code, the exact method using the R package rma.exact,Reference Michael, Thornton and Xie

18

and the bootstrap methods using the R package boot.Reference Canty and Boot

59

$\tau $

, we considered Q-profile intervals for each frequentist estimation method, as well as ML-profile and both bootstrap intervals. We implemented all frequentist methods and intervals using the R package metafor

Reference Viechtbauer

58

with the following exceptions: we implemented ML-profile using custom R code, the exact method using the R package rma.exact,Reference Michael, Thornton and Xie

18

and the bootstrap methods using the R package boot.Reference Canty and Boot

59

4.2 Data generation

Table 2 summarizes the simulation parameters we manipulated, which were similar to those of Langan et al.’s (2019) simulation study.Reference Langan, Higgins and Jackson

7

We considered continuous outcomes with point estimates on the Hedges’ g scaleReference Hedges

56

as well as binary outcomes with point estimates on the log-odds ratio scale. We considered both normally distributed and exponentially distributed population effects; in the latter case, the assumptions for all point estimators except the moments estimators were violated. Statistical theory suggests that all methods would perform comparably for very large meta-analyses with normal effects, and accordingly our focus is on point and interval estimation for smaller meta-analyses (

![]() $k \le 20$

). Our primary simulations reported in the main text are those with

$k \le 20$

). Our primary simulations reported in the main text are those with

![]() $k \in \{ 2, 3, 5, 10, 20\}$

. We additionally ran simulations with

$k \in \{ 2, 3, 5, 10, 20\}$

. We additionally ran simulations with

![]() $k=100$

to confirm asymptotic behavior (Section 3 of the Supplementary Material). Because the bootstrap intervals required much more computational time than the other methods, we first pilot-tested them in all scenarios with a single sample size (

$k=100$

to confirm asymptotic behavior (Section 3 of the Supplementary Material). Because the bootstrap intervals required much more computational time than the other methods, we first pilot-tested them in all scenarios with a single sample size (

![]() $k=10$

) to assess whether these methods were competitive with other methods.

$k=10$

) to assess whether these methods were competitive with other methods.

Table 2 Possible values of simulation parameters

![]() $^{a}$

Results for scenarios with

$^{a}$

Results for scenarios with

![]() $k=100$

appear in the Supplementary Material; these scenarios are excluded from aggregated results in the main text.

$k=100$

appear in the Supplementary Material; these scenarios are excluded from aggregated results in the main text.

Data generation proceeded as follows. For each simulation iterate, we generated a meta-analysis whose underlying population effects (

![]() $\mu _i$

) were either normal or exponential. Normal population effects were generated as

$\mu _i$

) were either normal or exponential. Normal population effects were generated as

![]() $\mu _i \sim N \left ( \mu , \tau ^2 \right )$

, where we varied

$\mu _i \sim N \left ( \mu , \tau ^2 \right )$

, where we varied

![]() $\mu $

and

$\mu $

and

![]() $\tau $

as indicated in Table 2. Exponential population effects were generated from an appropriately scaled and shifted distribution to achieve the desired population moments,

$\tau $

as indicated in Table 2. Exponential population effects were generated from an appropriately scaled and shifted distribution to achieve the desired population moments,

![]() $\mu $

and

$\mu $

and

![]() $\tau ^2$

. For each study in the meta-analysis, we generated a total sample size N from one of the four distributions listed in Table 2. We then simulated individual participant data, such that

$\tau ^2$

. For each study in the meta-analysis, we generated a total sample size N from one of the four distributions listed in Table 2. We then simulated individual participant data, such that

![]() $N/2$

participants were allocated to a treatment group, and the other

$N/2$

participants were allocated to a treatment group, and the other

![]() $N/2$

to a control group. In scenarios with a continuous outcome, we simulated outcomes with a mean of 0 in the control group and

$N/2$

to a control group. In scenarios with a continuous outcome, we simulated outcomes with a mean of 0 in the control group and

![]() $\mu _i$

in the treatment group, and with a standard deviation of 1 within each group. We then estimated the standardized mean difference using the Hedges’ g correction.Reference Hedges

56

,

Reference Viechtbauer

58

We used the standard large-sample approximation for studies’ standard errors (Eq. (8) in Hedges (1982)Reference Hedges

60

):

$\mu _i$

in the treatment group, and with a standard deviation of 1 within each group. We then estimated the standardized mean difference using the Hedges’ g correction.Reference Hedges

56

,

Reference Viechtbauer

58

We used the standard large-sample approximation for studies’ standard errors (Eq. (8) in Hedges (1982)Reference Hedges

60

):

$$ \begin{align} \widehat{\sigma}_i = \sqrt{ \frac{N^c + N^t}{N^c N^t} + \frac{\widehat{\theta}_i^2}{2(N^c + N^t)} } = \sqrt{ \frac{8 + \widehat{\theta}_i^2}{2N} } \end{align} $$

$$ \begin{align} \widehat{\sigma}_i = \sqrt{ \frac{N^c + N^t}{N^c N^t} + \frac{\widehat{\theta}_i^2}{2(N^c + N^t)} } = \sqrt{ \frac{8 + \widehat{\theta}_i^2}{2N} } \end{align} $$

where

![]() $N^c$

and

$N^c$

and

![]() $N^t$

are the within-group sample sizes, which were both equal to

$N^t$

are the within-group sample sizes, which were both equal to

![]() $N/2$

in our simulations. The expectation of this estimator is approximately

$N/2$

in our simulations. The expectation of this estimator is approximately

![]() $\sqrt {(8 + \mu ^2)/2N}$

.

$\sqrt {(8 + \mu ^2)/2N}$

.

In scenarios with a binary outcome, we simulated outcomes from a logistic model such that:

where

![]() $P(Y = 1 \mid X = 0)$

was a scenario parameter that we manipulated among the values listed in Table 2. We then estimated the odds ratio; to handle potential zero cell counts when present, we added 0.5 to each table cell when any cells had a count of zero.Reference Viechtbauer

58

$P(Y = 1 \mid X = 0)$

was a scenario parameter that we manipulated among the values listed in Table 2. We then estimated the odds ratio; to handle potential zero cell counts when present, we added 0.5 to each table cell when any cells had a count of zero.Reference Viechtbauer

58

We expected that for binary outcomes and small within-study sample sizes, certain extreme combinations of scenario parameters (e.g.,

![]() $N=40$

and

$N=40$

and

![]() $\mu =2.3$

, corresponding to an extreme odds ratio of 10) would result in biased within-study odds ratios.Reference Firth

26

,

Reference Nemes, Jonasson and Genell

61

In pilot simulations, we identified combinations of scenario parameters that resulted in within-study absolute bias of greater than 0.05. We excluded these combinations of scenario parameters since our focus is on bias arising from meta-analytic estimation methods rather than from within-study bias. After excluding these combinations of simulation parameters, we ultimately simulated 240 unique scenarios for continuous outcomes and 2267 for binary outcomes.

$\mu =2.3$

, corresponding to an extreme odds ratio of 10) would result in biased within-study odds ratios.Reference Firth

26

,

Reference Nemes, Jonasson and Genell

61

In pilot simulations, we identified combinations of scenario parameters that resulted in within-study absolute bias of greater than 0.05. We excluded these combinations of scenario parameters since our focus is on bias arising from meta-analytic estimation methods rather than from within-study bias. After excluding these combinations of simulation parameters, we ultimately simulated 240 unique scenarios for continuous outcomes and 2267 for binary outcomes.