Writing a book is a horrible, exhausting struggle, like a long bout of some painful illness. One would never undertake such a thing if one were nor driven on by some demon whom one can neither resist nor understand.

In this chapter, we briefly remind what is equilibrium thermodynamics, what is chemical transport and nonequilibrium thermodynamics and what is statistical thermodynamics. We tell why it is crucial to find a relationship with mechanics and why we need physicochemical mechanics for this.

1.1 Chemical Transport

All physicochemical processes in Nature occur in space and time, and transformations of energy and matter determine them. According to thermodynamics, evolution of an isolated system should lead to an increase in entropy. According to mechanics, a body under the influence of mechanical and other forces is moving toward decrease in potential energy. Do these two principles contradict one another? Can they be combined into one? This is the most general question answered in this book.

Chemistry is one of the well-known examples where it is often assumed that transformations of matter take place in a homogeneous phase, such as a gas or liquid. This is not an unreasonable assumption because homogeneity can usually be achieved simply by shaking or stirring. Nevertheless, the validity of this assumption is violated by the vast majority of transport phenomena, which rely intrinsically on the presence of external and internal fields, asymmetry and inhomogeneity. One point in a homogeneous medium has the same properties as any other, but the same is not true for transport. An important and well-known example is diode-based rectification: the difference of material properties at two points leads to different currents in one or another direction when the voltage changes only its sign, not its magnitude. The world around us is full of examples of inhomogeneous effects leading to transport processes.

A homogeneous chemical reaction is a scalar process, but the rate of chemical transport is a vector. It may be driven by the gradients of external physical fields and by the gradients of internal properties, such as polarity. As a result, one position in space is different from another, and in this sense, the system is not homogeneous anymore. As will be demonstrated, this leads to essential changes of thermodynamic description because intensive parameters, such as field potentials, temperature and even molar entropy, become functions of coordinates.

To be sure, thermodynamic equations can be specialized to interfaces, but as an equilibrium theory, classical thermodynamics is fundamentally not suited for kinetics of chemical reactions and inhomogeneous transport processes, some of which occur far from equilibrium. In the hierarchy of ideas that describe transformations of energy and matter, from mechanics to thermodynamics and statistical mechanics, statistical mechanics is generally chosen as the right level to deal with inhomogeneous chemical systems and transport properties, although it usually treats more degrees of freedom than are necessary for a practical macroscopic or coarse-grained description of transport (namely, every position, momentum and even spin). While statistical mechanics can provide complete and formally elegant descriptions, they are often exceedingly complex. Because of that, mass- and energy-transport textbooks often fall either into the category of empiricism or into full-blown discussions of Fokker–Planck equations and correlation functions underlying Onsager’s reciprocal relations, so that they sometimes leave one with a feeling of overkill. It would be good to have a simpler description than that provided by physicists-theoreticians and more suitable for processes important for specialists in physical chemistry.

What if we have a microscopy experiment where we need to describe the reaction and diffusion of labeled proteins in a cell? Or transport near two immiscible interfaces needs to be explained? Or barodiffusion in a magnetic field? Or perhaps we’d just like to derive Faraday’s law or transition state theory of chemical reactions in a few short lines while eliminating some unnecessary assumptions usually made when deriving these models. It would then be nice to have a middle ground theory between statistical mechanics and thermodynamics, which can deal with transport processes and show how equilibrium is attained. Traditionally these questions have been considered in the field of nonequilibrium thermodynamics.

Physicochemical mechanics (PCM) occupies such a ground between statistical mechanics and thermodynamics for transport and reactions. As we shall see in Chapter 6, only two postulates are added to mechanics and the conventional postulates of thermodynamics. These postulates of “physicochemical mechanics” can, of course, be derived from statistical mechanics (Chapter 3). While PCM is not as general as statistical mechanics, one gets a nice bonus: the derivation of myriad laws, from nonequilibrium Fick’s law to the equilibrium Nernst equation, to activated rate theory, becomes extremely simple. External driving forces can be added to the nonisolated systems in an entirely systematic fashion, and relations between the rates of different transport processes become clear. So, if the reader wants to find an equation for transport driven in a thin film by surface tension in a magnetic field, look no further: it can be derived easily in a few steps from PCM.

1.2 From Mechanics to Statistical Mechanics to Thermodynamics and Physicochemical Mechanics

Let us now consider the hierarchy from mechanics to statistical mechanics, thermodynamics and finally to PCM (the topic of this book). We will start with mechanics, which describes the movement of macroscopic objects in space. Mechanics is a complete and self-consistent area of science because it can predict the position of a body if we know initial coordinates and speed, plus the force field. It is a simple, powerful and elegant system of fundamental ideas that has already become an essential part of human thinking about Nature. It became a component of human culture, of our intuition.

In mechanics, the reason for changes in movement is an applied force. Newton’s second law defines a force F via mass m (amount of matter) and acceleration a: F = ma. Acceleration is a vector directly proportional to force. If there are several forces, they should be added, while the shape and mass of a body are usually considered constant.

We know that velocity changes with position in space and in time. Acceleration is the derivative of velocity in time, while the object’s path is the integral of velocity in time. Newton’s first law states that if the total force is zero, then the velocity is constant and not necessarily equal to zero. In an ideal situation without friction, each body remains in uniform motion unless an external unbalanced force acts on it.

Newton’s third law describes interactions leading to the force. It states that any action is equal to the counteraction. This means that whenever a first body exerts a force F on a second body, the second body exerts a force −F on the first body. The mutual forces between two bodies are equal, have opposite directions and are collinear.

Most common in mechanics are conservative forces, given by the negative derivative of potential energy in space and independent of velocity. These will play an essential role in PCM. But so will another type of force: ironically, before Newton, Aristotle’s thinking was that without an acting force, movement should eventually stop. Is this logic wrong? No, it is not! If the movement is not in a vacuum, we have additional interactions, leading to friction and energy dissipation as heat. When the velocity goes up, the friction in the fluid also goes up. Very soon, acting and counteracting forces become equal, and the system reaches the steady state when the body moves without any acceleration under a constant force. A well-known example is Stokes’ law for a sphere falling in liquid, where the steady state speed and not acceleration is proportional to the gravitational force. Newtonian dynamics can be formally amended to include friction effects, putting Aristotle’s dynamics on a mathematical footing. As described in Chapter 3, Langevin equation is an example of such a friction-dependent extension of mechanics. Friction in PCM plays a central role, as envisioned long ago in Aristotle dynamics.

For macroscopic systems with constant mass and not too high speed, the dynamics can be described based on either forces or energies. Nevertheless, conservation of energy, which is helpful for analysis of isolated systems, was not firmly established until the nineteenth century, long after Newton. This long delay occurred because of the difficulty in understanding the role of nonmechanical forms of energy such as heat, which is one of the major subjects of thermodynamics.

In theoretical mechanics, which considers a system of particles, this system is most easily described based on Lagrange’s and Hamilton’s equations, from which Newton’s laws may be derived. As it turns out, Lagrangian dynamics can be extended to describe systems with friction and energy dissipation. To do this, Rayleigh’s “dissipation function” usually is added as an additional term to Lagrange’s equation. Dissipation usually leads to a temperature increase. Although the temperature is not a dynamical variable in mechanics, it is an emergent collective phenomenon meaningful for many particles. When used to describe a single particle, that particle is in contact with a “heat bath” of many particles.

The next step from inhomogeneity is different restrictions and even construction, which can be used in different chemical and engineering applications. The most straightforward constructions imposing some spatial restrictions on chemical processes include the walls of a flask or a transporting fluid pipe. More advanced constructions often can move in space or even change in time. These constructions can do valuable work, such as mechanical work done by a pump or chemical and electric work in an electrochemical fuel cell. Constructions may be very large, which is common in civil engineering; they may be very small, like microfluidics hosting a single cancer cell, or even at the molecular scale, like carbon nanotubes in nanoengineering. Even biological code is a construction. Because of mobility constraints, the genetic code can be written and maintained as a stable sequence of nucleotides in a DNA double helix. Mutations of this code can result in different genetic pathologies or find applications in agriculture, biotechnology and medicine.

Initially, thermodynamics was developed to describe heat engines, which are very important constructions. The primary question it answered was “How much?” For example, how much work can be done by an engine, how much the system will finally change when it reaches equilibrium, how much product can a chemical reaction yield once equilibrium is reached? Classical equilibrium thermodynamics provides the natural way to characterize the efficiency of a system when heat or other forms of stored energy are converted into work.

Thermodynamics is a highly averaged theory of matter. Chemical thermodynamics deals not with one body but with a system of very many bodies, averaging over individual atoms or molecules. The new fundamental function introduced in thermodynamics is entropy. The first law of thermodynamics tells us about energy conservation, and the second law tells us that entropy always increases in spontaneous processes in isolated systems. The fundamental statement of thermodynamics is that all the longtime and distance information about any system near equilibrium is encoded in entropy S, but this property, like temperature, does not exist in classical mechanics. For an isolated system, any spontaneous process leads to an entropy increase, which gives information about the direction of the process. One of the thermodynamic postulates poses that entropy is an additive function in macroscopic systems, where surface effects do not play an essential role.

The principal value of Gibbs’ equilibrium thermodynamics is its ability to transform certain macroscopic characteristics of a system into other macroscopic observables for the same system so that it is not necessary to measure all of them directly. Thus, entropy cannot be measured, but its changes may be calculated based on temperature, energy, heat and other macroscopic characteristics of the system.

Ludwig Boltzmann connected mechanics and thermodynamics in the late nineteenth century. The theory of statistical mechanics he created provides a way to calculate entropy, and thus other thermodynamic properties, from the microscopic behavior of a system with many atoms or molecules. Statistical mechanics does add a critical and restrictive additional postulate on top of the framework of mechanics: the idea that at constant energy all possible configurations of a system (a so-called microcanonical system) are equally likely to occur. Suppose the number “i” of microstates can realize the same macroscopic system (e.g., different configurations of gas molecules in a room, which have the same volume and temperature). In that case, the entropy is generally defined as –Σ pi ln pi, where pi is the probability of microstate “i.” In the microcanonical case, this simplifies to

![]() , that is, relation of entropy and the logarithm of the number of possible configurations W of the system at constant energy, multiplied by Boltzmann constant kB. If the system is in thermal equilibrium, then it is also possible to calculate other thermodynamic properties.

, that is, relation of entropy and the logarithm of the number of possible configurations W of the system at constant energy, multiplied by Boltzmann constant kB. If the system is in thermal equilibrium, then it is also possible to calculate other thermodynamic properties.

Equilibrium thermodynamics does not tell how fast the events happen, and time is not present in equilibrium thermodynamics. In equilibrium, all forces are balanced, and there is no time evolution of the macroscopic thermodynamic observables. Conservation of energy also provides no clues to predict the direction and rate of a process. That is why the more proper name of this area of science should be thermostatics, not thermodynamics.

Nonequilibrium statistical mechanics moves further, allowing the calculation of the rates of processes. Its major postulate is that it is still possible to use entropy and other thermodynamic functions locally. This postulate is enabled by the concept of “baths” taken over from thermodynamics. As long as it is in contact with a heat bath, even a single molecule can be treated by statistical mechanics. Thus, statistical mechanics occupies a fertile middle ground between mechanics and thermodynamics.

Nonequilibrium thermodynamics is another essential part of modern thermodynamics because of two main lines of thought. First of all, based on the concepts developed mainly by Nobel Prize laureate Lars Onsager, nonequilibrium thermodynamics describes many linear mass transport processes, such as Fick’s law of diffusion, charge transfer (Faraday’s law), pressure-driven volumetric flux (Darcy’s law) and interactions of such processes. The second reason is the ability to explain and, in some cases, even to predict nonlinear phenomena, including oscillations and spontaneous formation of structure, which is especially important for biological applications. This area was developed mainly in Europe (the “Belgian school,” including an immigrant from Russia, Nobel Prize laureate Ilya Prigogine, Figure 1.1).

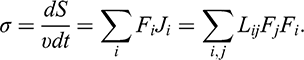

There are many examples where thermodynamics exploits mechanical terminology. Even the term thermodynamic potential is based on the analogy with mechanical potential energy. Nonequilibrium thermodynamics introduces new parameters, called general thermodynamic forces, though they do not have the standard units of force (Newtons in SI (System International) units)! Any thermodynamic force can produce different flows. In general, the flows can be nonlinear functions of the forces. However, near equilibrium, it is possible to expand the nonlinear functions in the Taylor series, neglect the higher-order terms and use only the linear approximation. Thus, in linear thermodynamics, when a system is close to equilibrium, a general description of its behavior in space and time is based on linear relations between thermodynamic forces Fj and flows Ji of components “i” of the system,

![]() (Reference Kondepudi and PrigogineKondepudi & Prigogine, 1998). The expression “close to equilibrium” loosely means that energy deviations from the equilibrium are less than RT, that is, less than 2.5 kJ/mol at room temperature.

(Reference Kondepudi and PrigogineKondepudi & Prigogine, 1998). The expression “close to equilibrium” loosely means that energy deviations from the equilibrium are less than RT, that is, less than 2.5 kJ/mol at room temperature.

Entropy production is the crucial element of nonequilibrium thermodynamics, and it is used to derive many basic equations. Even the definition of thermodynamic forces and the way to find them are based on the equation for entropy production

![]() per unit of time t and unit volume

per unit of time t and unit volume

![]()

(1.1)

(1.1)

The local rate of energy dissipation or heat production can be calculated if we multiply both sides by temperature T.

The coefficients Lij are called Onsager phenomenological coefficients (Reference OnsagerOnsager, 1931). The coefficients with the repeated subscript indices i = j describe more common relations of conjugate forces and flows and are always positive. These coefficients are analogs to the conductance in Ohm’s law, where electric current (a flow) is proportional to voltage difference (a driving force). In other cases, i ≠ j. Examples are thermoelectric and thermodiffusion phenomena, where the thermal gradient leads not only to the heat transport but also to an electric current and mass transport, and vice versa. The cross coefficients with i ≠ j may be positive or negative, and simple theory often cannot predict the value or even the sign of the coefficients. The only thing we know is that Lij = Lji. The derivation of this relation, which is known as Onsager’s reciprocal relation, is based on an analysis of thermal molecular fluctuations and entropy production near equilibrium (Reference OnsagerOnsager, 1931).

Linear nonequilibrium thermodynamics answers many vital questions, but it is not very popular, truth be told (Reference DemirelDemirel, 2014). One of the reasons is that this area was developed based on the idea of entropy increase in spontaneous processes. This representation is generally referred to as the entropy-based. The concept of entropy is not a simple one to understand. The presentation based on entropy is not the most natural approach from the historical perspective. In comparison, the first law of thermodynamics, which is the conservation of energy, looks natural.

The idea of general thermodynamic forces also is not easily supported by intuition based on mechanical forces. Thermodynamic forces have units different from the mechanical force, and, for example, for gas expansion, the general thermodynamic force, which is conjugate to volume, has units pressure/absolute temperature; for electric current it is voltage/temperature; while for a heat conduction thermodynamic force conjugate to energy has the dimension 1/temperature (Reference Kondepudi and PrigogineKondepudi & Prigogine, 1998, pp. 95, 346). One more thermodynamic force is the partial derivative of entropy over the number of particles, and it is equal to

![]() , where μ is the chemical potential.

, where μ is the chemical potential.

Dealing with membrane transport, one can also find the terminology where a temperature gradient is called a driving force for heat conduction and membrane distillation; a gradient of electric potential is a driving force for electric current and electrodialysis, and a concentration gradient is a driving force for dialysis and diffusion. Proton motive force in bioenergetics is the difference of electrochemical potentials of H+ ions through a membrane. It is essential to avoid confusion in terminology. Ilya Prigogine used the term “cause” instead of thermodynamic force in some of his publications. We will use the term “driving factor” to make sure it is not confused with mechanical forces with units of newton or molar forces that we will introduce later with units of newton/mole.

J. W. Gibbs wrote: “If you wish to find in rational mechanics a priori foundation for the principles of thermodynamics, we must seek a mechanical definition of temperature and entropy” (Reference GibbsGibbs, 1948). Gibbs remarked on the occasion of receiving the Rumford Medal (1881): “One of the principal objects of theoretical research in any department of knowledge is to find the point of view from which the subjects appear in its greatest simplicity.” Classical Newtonian mechanics is based on three major laws. Equilibrium Gibbs thermodynamics also has three basics laws. Statistical physics is based on two postulates. The situation is different in chemical kinetics and mass transport, including many seemingly unrelated equations, often carrying names of great scientists. That is why there is room left for a theory between statistical mechanics and thermodynamics. Physicochemical mechanics will reduce this description to thermodynamics plus two transport postulates. The goal of PCM is to describe transport in systems out of equilibrium in the presence of external and internal forces, both in steady state and equilibrium.

Calculating the rate(s) of any molecular process is possible based on nonequilibrium statistical mechanics. Still, such calculations are often rather cumbersome when the complete apparatus of statistical mechanics is used. For large systems, simulations of such processes need a lot of computer time. We will not describe this area in detail. Still, we will discuss in Chapter 3 the Langevin, Smoluchowski and Fokker–Planck equations, which describe stochastic transport processes, where friction combined with random forces due to the Brownian motion of molecules play a role. At the core of the suggested PCM theory, such transport processes lie between statistical mechanics and thermodynamics. Sometimes the same equation is essential for different areas, and it is not surprising that it may be found in several chapters.

From an analysis of initial and final states of a system in equilibrium and ideal infinitely slow reversible processes, the theory moved to nonequilibrium states and kinetics of their changes. We will further develop a simple description of transport and other physicochemical processes based on the intuitive ideas from classical mechanics. We also build on an important work that started in the first half of the twentieth century, when thermodynamics made an essential step forward toward a unified description based on statistical mechanics and classical thermodynamics. It is also possible to think of PCM as a streamlined version of nonequilibrium thermodynamics. Why is this an intermediate description? The price paid for it is some loss of generality compared to statistical mechanics. The reward is a much simpler theory.

In many cases, instead of a description on a molecular level, it remains natural to look for a macroscopic description of nonequilibrium processes. Good accuracy is quickly obtained once the number of molecules involved is more than a few hundreds, and fluctuations become relatively small compared to the mean. This book presents a simple, unifying treatment that resembles fundamental aspects of Newtonian and Aristotelian dynamics to describe chemical transport and reactions. Thus, we can tap into the intuition of readers for such concepts as “without friction, particles not subject to force keep moving,” or “with friction, particles subject to a constant force reach a constant drift velocity.” Our formulation is based on intuitive ideas about forces and energy, and for that reason, it will be called PCM.

As stated earlier, two new postulates make the standard thermodynamics variables more general by introducing external forces and position-dependence within the system. The first major postulate of PCM considers nonisolated systems. It introduces the physicochemical potential of each component as a local thermodynamic function of a state, dependent on position within the system. Depending on the complexity of the system we want to describe, the physicochemical potential is a sum over terms depending on concentration, pressure, electric potential, entropy, surface tension, magnetic and gravitational fields, mechanical stress and so on. These terms follow the same bilinear form such as “TS” and “PV” in thermodynamics. They have units of energy and serve as driving factors for mass transport or other physicochemical processes.

The second postulate is derived using ideas from statistical mechanics. It states that flux (rate of mass transport of each component or another transport process) is proportional to the gradient of the physicochemical potential introduced in the first postulate. This gradient has units of molar force. The proportionality coefficient for mass transport is Einstein mobility, determined by the Brownian motion of molecules that make up our system’s “bath.” And, of course, the total energy gradient and mobility product must be multiplied by local concentration – no concentration means no flux.

Combining these two postulates yields one general equation for the transport rate. Now it is possible to derive all significant and well-known physicochemical equations systematically. The success of PCM demonstrates that processes usually related to fluctuations can be understood more simply in terms of macroscopic behavior of a system. The reason for this is given by the famous fluctuation-dissipation theorem, which relates fluctuations of individual particles to the average behavior of a larger assembly of such particles. The equivalent information obtained from fluctuations versus dissipation is nowhere more evident than in PCM.

Once accepted, a unified representation of seemingly disparate topics such as multicomponent molecular transport, colloid chemistry, transition state theory in chemical kinetics and the Nernst equation in electrochemical equilibrium will be obtained. In some cases, it became possible to derive new equations, giving more accurate or novel explanations of existing phenomena, such as diffusion in a nonhomogeneous media or dependence of surface electric potential on the curvature of a colloid particle. As a result, all critical mass transport processes are summarized in Table 6.1, and the relations of all these processes become clear and straightforward.

Physicochemical mechanics is further used here to describe the kinetics of elementary chemical reactions, including reversible monomolecular and bimolecular reactions. These reactions usually are far from equilibrium and are difficult to explain based on linear thermodynamics. The transition state theory of a reacting particle coupled to a bath will be derived in a few lines of simple algebra with clear conditions for its range of validity. In contrast, Kramers’ more general statistical mechanical derivation takes dozens of complex steps involving complicated differential equations to reach pretty much the same conclusion. Physicochemical mechanics allows us to reformulate the classic descriptions of transition state theory in a much simpler framework and describe the rates with equations, which involve exponential terms with energy but do not explicitly involve concentrations.

Remarkably, PCM even allows the derivation of concepts such as Onsager’s relations without making any recourse to fluctuations usually used to do this. It quickly leads to the reciprocal relations for transport of different properties by the same species. Still, it also demonstrates the need for new relations for multicomponent systems, which Onsager did not address. Simultaneously, it provides a unifying description and comparison of all different mass transport laws, all transport coefficients and their relations in equilibrium. These aspects usually are not discussed in linear thermodynamics. To derive all transport coefficients, we need only molar properties and Einstein mobility of components “i” of the system, which is easily measured. Thus, the PCM approach is not just a reformulation of linear thermodynamics, dealing with transport phenomena.

In many models dealing with nonequilibrium systems, it is assumed that equilibration of temperature and pressure in the system is established much faster than equilibration of composition due to transport or chemical reactions. Pressure and temperature are the same at different system points, so viscous flow and heat transfer are not considered. The system is in so-called partial equilibrium. These approximations are not necessary for PCM, which is essential for the kinetics of chemical reactions.

Examples include thermodiffusion, pressure-driven membrane processes and even intracellular heat profiles generated by mitochondria. Thermodiffusion is the last of the classic mass transfer processes, not adequately explained based on traditional linear nonequilibrium thermodynamics. Now we can provide a straightforward and natural treatment for systems with temperature gradients. Both gradients of entropy and temperature become necessary for describing heat- and temperature-related transport processes, including diffusion, thermodiffusion and coupled heat transport. Decrease of physicochemical potential for a component or an increase of Lagrangian for nonisolated system leads to heat dissipation and increase of entropy, which leads to a more general formulation of the second law of thermodynamics.

Physicochemical mechanics provides a straightforward way of describing macroscopic systems out of equilibrium when diffusive transport processes are the means of restoring equilibrium. This is important in many practical applications, such as multiphase reactors where diffusion occurs; systems where interfacial transport is essential; kinetics and dynamics of reactions and transport inside cells, where diffusion or anomalous diffusion is more important than ballistic transport; and many other cases in engineering, chemistry and biology. Physicochemical mechanics is also helpful for comparing rates and relative merits of different modern separation methods, especially those based on membranes. It describes reverse osmosis and other pressure-driven processes and is more general and accurate than a popular solution-diffusion model. The PCM formulation in some cases is different in comparison to the traditional point of view, especially in ion transport through biomembranes and bioenergetics. Still, we think it can stimulate new discussions and experiments.

Physicochemical mechanics is a universal approach, and it is part of the natural dialectic spiral process in science. Starting from the lower basic mechanical steps, based on thermodynamics with some further development, it leads to the description of many complex processes, including biological membrane processes, biological evolution, social progress and even free markets, which are mentioned in Chapter 11.

Glossary of Terms

- a

acceleration

- Fj

thermodynamic force

- Ji

flow of components “i”

- kB

Boltzmann constant

- Lij

Onsager phenomenological coefficients

- m

mass (amount of matter)

- pi

the probability of microstate “i”

- P

pressure

- R

universal gas constant

- S

entropy

- T

temperature

-

volume

- W

number of possible configurations of the system at constant energy

- μ

-

rate of entropy production